🏋️♂️🤸♀️Edge#61: Understanding AutoML and its Different Disciplines

TheSequence is the best way to build and reinforce your knowledge about machine learning and AI

In this issue:

we discuss the concept of AutoML and its different disciplines;

we review the original AutoML paper;

we explore Amazon AutoGluon which brings deep learning to AutoML.

Enjoy the learning!

💡 ML Concept of the Day: Understanding AutoML and its Different Disciplines

With this issue of TheSequence Edge, we are starting a new series focused on automated machine learning or, better known as, AutoML. Interestingly enough, we covered AutoML in one of the first issues of this newsletter. However, given the fast evolution of the AutoML space and the increasing interest that the trend is triggering within the data science community, we thought it might be worth dedicating a few editions of TheSequence to evaluate the concepts, technologies and research relevant in the space.

The fascination with AutoML is rooted in this idea of using machine learning to create better machine learning models. Conceptually, AutoML is a collection of methods focused on the selection and optimization of machine learning models. Based on that definition, you can think the term AutoML is a bit broad and intersects with several areas of machine learning. From that perspective, we can find many “loose definitions” of AutoML that might cause some confusion. It is important to realize that AutoML is not one method but a large collection of techniques that encompass several machine learning schools. In general, most AutoML methods fall into some of the following categories (all of which have been discussed in previous issues of TheSequence):

Hyperparameter Optimization: Methods that find the best combination of hyperparameters for a machine learning architecture.

Meta-Learning: Methods that focus on automated learning based on prior experience with other tasks.

Neural Architecture Search: Methods that focus on finding the best machine learning architecture for a given problem.

Other machine learning areas, such as transfer learning, can also be seen as forms of AutoML. There is a significant overlap between these different methods, which is often the cause of confusion. In general, hyperparameter optimization methods test a set of predefined architectures for a given problem and find the optimal hyperparameter configuration. Neural architecture search approaches rely on reinforcement learning or evolutionary models to generate architectures for a given problem. Finally, meta-learning methods start with a series of machine learning models and observe how they learn in order to master a new task. While the overlapping can result in confusion, it is also what makes AutoML one of the most fascinating areas of modern machine learning; one that is becoming ubiquitous in most deep learning platforms.

🔎 ML Research You Should Know: The Original AutoML Paper

In Neural Architecture Search with Reinforcement Learning, researchers from Google outlined the principles of what today we consider the modern AutoML approach.

The objective: Use reinforcement learning to automate the generation of machine learning architectures.

Why is it so important: Most ideas of modern AutoML stacks are rooted in the ideas discussed in this paper.

Diving deeper: Most researchers point to the Google paper as the original AutoML paper, but it should really be considered the original neural architecture search (NAS) paper. In their work, Google Research details the use of recurrent neural networks (RNNs) to generate the model description of neural networks that can be applied to a given problem, followed by the use of reinforcement learning to train those RNNs in order to maximize their accuracy. They called that approach neural architecture search (NAS), but the paper has also been associated with the beginning of the AutoML school. So don’t feel bad for being confused 😉.

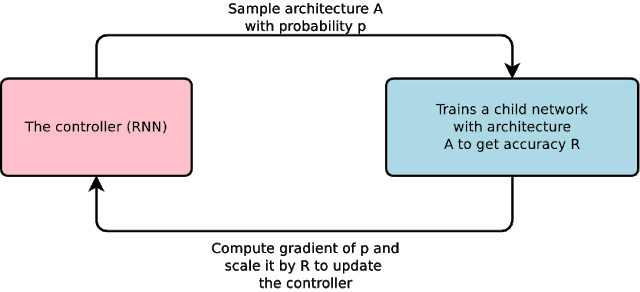

Diving a bit into the specific AutoML-NAS method, Google proposes a dual-network architecture model. In this architecture, a “child” network is trained and evaluated on a specific task. The results are fed to a “controller ” network that uses reinforcement learning to improve architecture proposals for the next round. The process is repeated multiple times until the controller learns to effectively assign probabilities to different areas of the architecture space, depending on their score solving the original task.

Image credit: the original paper

Google applied this reinforcement learning AutoML-NAS approach to several image classification problems and, to their surprise, the produced models matched state-of-the-art neural networks designed by humans. Since then, this architecture has become the cornerstone of Google’s work in the AutoML space and has sparked many new research ideas to automate the creation of machine learning models.

🤖 ML Technology to Follow: Amazon AutoGluon Brings Deep Learning to AutoML

Why should I know about this: AutoGluon is one of the most robust AutoML stacks in the market for generating deep learning models.

What is it: AutoML stacks are a great component to add to your arsenal of deep learning toolkits, but be prepared for a frustrating journey if you are trying to apply it to real-world scenarios. Especially if you like deep learning. Almost paradoxically, one of the main limitations of using AutoML platforms in real-world applications is the limited support for deep neural networks. I refer to it as paradoxical because AutoML methods are, for the most part, deep learning-based but most of the platforms in the market operate using simpler machine learning models. A group of researchers from Amazon Web Services (AWS) AI Labs set out to address this challenge. The result was AutoGluon, an open-source framework to automate the creation of deep learning models using a few lines of code.

Functionally, AutoGluon is an open-source library for developers who build applications involving machine learning with image, text, or tabular data sets. AutoGluon enables easy-to-use and easy-to-extend AutoML with a focus on deep learning and real-world applications spanning image, text, or tabular data. The framework is intended for both machine learning beginners and advanced experts. The first version of AutoGluon includes some of the following capabilities:

Quickly prototype deep learning solutions for your data with few lines of code.

Leverage automatic hyperparameter tuning, model selection/architecture search, and data processing.

Automatically utilize state-of-the-art deep learning techniques without expert knowledge.

Easily improve existing bespoke models and data pipelines, or customize AutoGluon for your use-case.

In the grand spectrum of AutoML methods, AutoGluon excels in the area of hyperparameter optimizations. The framework provides state-of-the-art hyperparameter tuning methods for deep learning models, across diverse areas such as image classification, object detection, text classification, and supervised learning with tabular datasets. The developer experience allows data scientists to generate fairly sophisticated deep learning models using a handful of lines of code. However, hyperparameter optimization is not the only area of focus of AutoGluon, with the framework supporting novel neural architecture search capabilities based on reinforcement learning.

The AWS AI Labs team has benchmarked AutoGluon in several Kaggle competitions and open deep learning challenges, achieving state-of-the-art performance across several tasks. Although relatively new, AutoGluon can already be classified as one of the most promising and exciting projects in the AutoML space.

How can I use it: AutoGluon is open-source and available at https://github.com/awslabs/autogluon

🧠 The Quiz

Now, to our regular quiz. After ten quizzes, we will reward the winners. The questions are the following:

In its original paper, Google based its approach to AutoML on dual-neural network models. What was the role of the controller network in that AutoML architecture?

What is the main use case to consider using Amazon’s AutoGluon framework?

That was fun! Thank you. See you on Thursday 😉