🟢 ⚪️Edge#160: A Deep Dive Into Aporia, the ML Observability Platform

This is an example of TheSequence Edge, a Premium newsletter that our subscribers receive every Tuesday and Thursday. On Thursdays, we do deep dives into one of the freshest research papers or technology frameworks that is worth your attention.

🤿 Deep Dive: Aporia: The Full-Stack ML Observability Platform

Finalizing our MLOps series, we’d like to circle back to the importance of ML Observability and give an overview of Aporia, the Full-Stack ML Observability Platform that is worth your attention. Aporia offers a completely customizable solution that includes visibility, monitoring and automation, advanced investigation tools, and explainability. The platform is designed to empower data science and ML engineering teams to build their own in-house observability platform, customized to their specific models and use cases.

The Importance of Customized ML Observability

Every model is unique. Even when organizations have the same use case for their model, such as fraud detection, the data used to train the model and the model itself is tailor-made to fit the needs of the organization.

A data scientist’s job is to build these models to achieve the goals of the organization and ensure they work effectively. Data scientists train these models in a controlled environment. After deploying those models into production, they then enter an uncontrolled environment, where they are affected by real-world data and events that impact the model’s performance.

To be able to maintain model performance in production, it’s critical to detect real-world changes that impact models such as drift, performance degradation, data integrity issues, and unexpected bias. Given that each model is unique, it’s essential to have a customizable ML observability solution that can be tailored by the experts behind the models to best support the specific use case and business needs.

Customized Observability with Aporia

Aporia’s customizable ML observability enables data science and ML teams to visualize their models in production, analyze the behavior of different data segments, and compare model behavior to training or over time. This is done using common evaluation metrics, in addition to customized performance metrics, which a data scientist can customize to their specific models.

Aporia’s monitor builder allows teams to easily create monitors for their models to detect a wide variety of behaviors, including drift, bias, data integrity issues, performance degradation, and more. Each monitor can run against different baselines – from the training set, a specific data segment comparison, or anomaly detection over time. With all these combinations, data science teams have access to over 50 customizable monitors, including a Python code-based monitor.

Visibility & Investigation for Root Cause Analysis

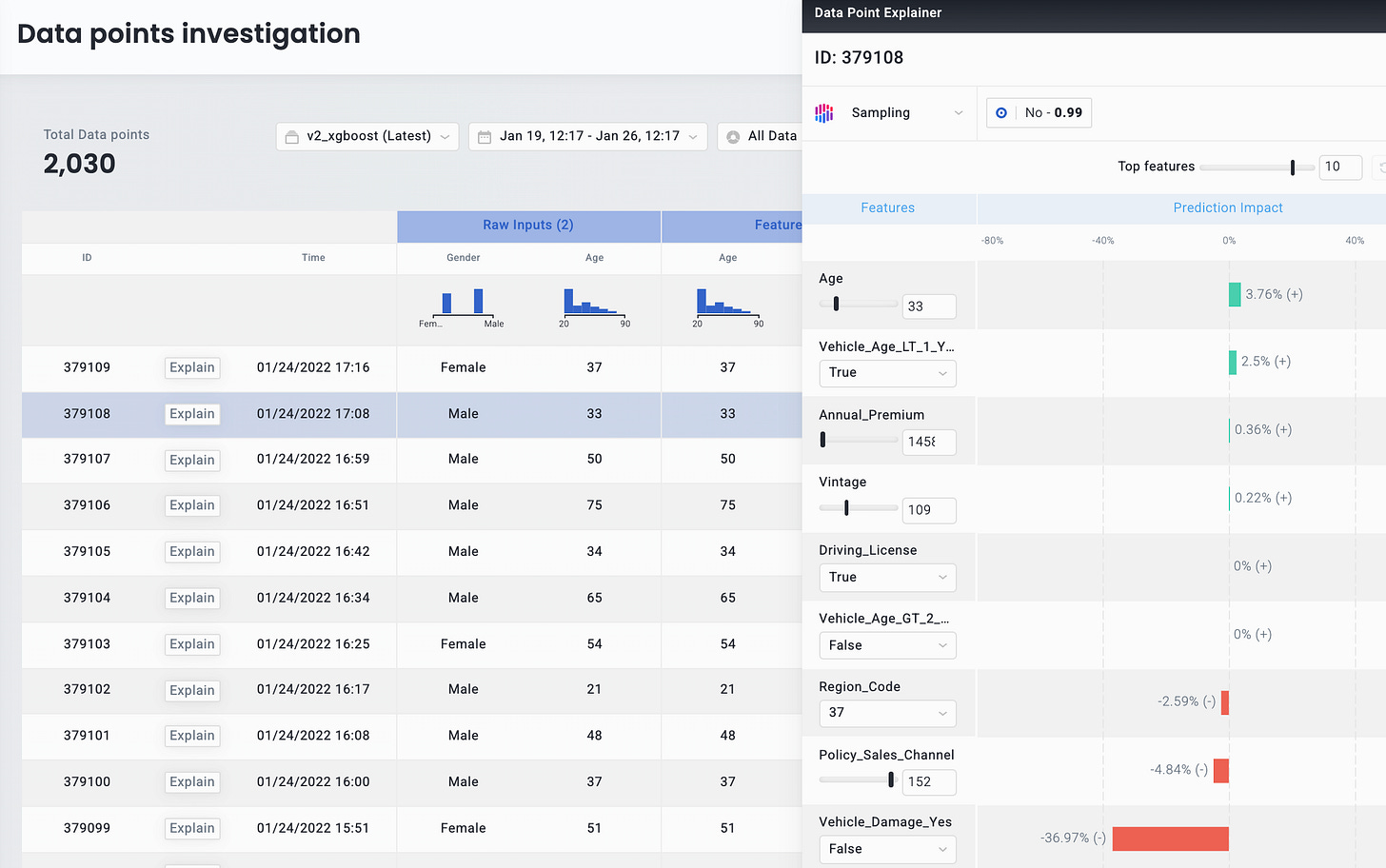

With Aporia, data science and ML teams can see all their models in one place, in real-time. Using the solution’s Time-Series and Datapoints Investigation tools, teams can also understand the root cause of any issue, discover when an issue actually started (before it reached the threshold or after), what was impacted by that issue, compare prediction distribution to training, feature importance and more.

Furthermore, data scientists can see which features impacted predictions the most using advanced explainability tools. Combined, these tools enable a data scientist to answer the question: How do I resolve this particular issue and improve my model’s performance and business results?

Improving Models in Production & Monitoring for Drift

Aporia’s focus on customization makes it easy for data scientists to go beyond drift detection and improve their models in production in a number of ways.

Improving the data pipeline: More often than not, data in production can change dramatically. For example, True/False can suddenly change to 0/1, impacting model performance significantly, and early detection enables ML engineers to resolve the issue before it impacts the business.

Retrain models: When detecting drift in critical features, Aporia facilitates the retraining of a model automatically using relevant production data, which ensures that drift doesn’t affect the business.

Optimizing models: When drift affects features of low importance, data scientists can remove these features and retrain the model, improving model production results.

Critical segments: With early detection of underperforming data segments, data scientists can tailor their model to focus on improving the performance of the underperforming segment.

Explainability in Aporia

Aporia’s explainability tools help data scientists understand which features impact model predictions the most and explain model results to business owners. Explainability can also be used to simulate “What if” scenarios – quickly debugging prediction results by changing feature content and re-explaining the model. Together, these capabilities allow data scientists to better understand their model results in production and communicate those results to stakeholders.

Customized Monitoring in Less Than 5 Minutes & Self-Hosted Deployment

With Aporia’s self-hosted solution, the organization’s data remains secure. Integrating new models takes less than 3 minutes (see documentation). To try, you can sign up to Aporia’s free Community Edition, start with the demo model provided, or integrate your own production models to start monitoring and improving your models in production.

Conclusion:

ML systems play a central role in real-world production settings, from biomedical domains to space exploration. Hence, ensuring the consistent reliability of ML models is of utmost importance in the industry.

ML Observability is, thus, an essential part of the production process for data science teams to monitor, validate and update the models deployed to users. Robust and diverse observability tools like Aporia can aid in identifying gaps in the deployed model or the production data, help visualize performance in every stage of the development, and recommend solutions to the detected problems.

You can go over our full MLOps series here. Next week we will start a new fascinating series about Generative Models. Stay tuned!