TheSequence Scope: The Transformer Race

Initially published as 'This Week in AI', a newsletter about the AI news, and the latest developments in ML research and technology

From the Editor: The Transformer Race

Transformers represent the biggest breakthrough in deep learning in the last 4-5 years. Many experts believe that Transformers methods are on their way to replace recurrent neural networks(RNNs) as the preferred method of choice for deep learning models. In just a couple of years, Transformers have been at the center of some of the most important deep learning models such as OpenAI’s GPT, Microsoft’s Turing-NLG, and, of course, Google’s BERT.

The evolution of Transformers has also turned the artificial intelligence (AI) space into a race for building the biggest possible models. Empirical evidence suggests that larger models have consistently outperformed smaller alternatives across many tasks. A few months ago, Microsoft established a new record with the release of its Turing-NLG model that used 17 billion parameters. That lasted a few weeks until OpenAI announced a massive version of its GPT-3 model which uses an astonishing 175 billion parameters. Creating this type of model is certainly impossible for most organizations but, when comes to Transformers, bigger is certainly better.

Now let’s take a look at the core developments in AI research and technology this week.

AI Research:

GPT-3

OpenAI open-sourced GPT-3, its massive new version of its natural language processing model. Making it the biggest deep learning model in history.

>Read more in the GPT-3 research paper

BERT for Natural Language Generation

Google Research proposed a BLEURT, a BERT-based method for evaluating natural language generation models.

>Read more in this blog post from Google Research

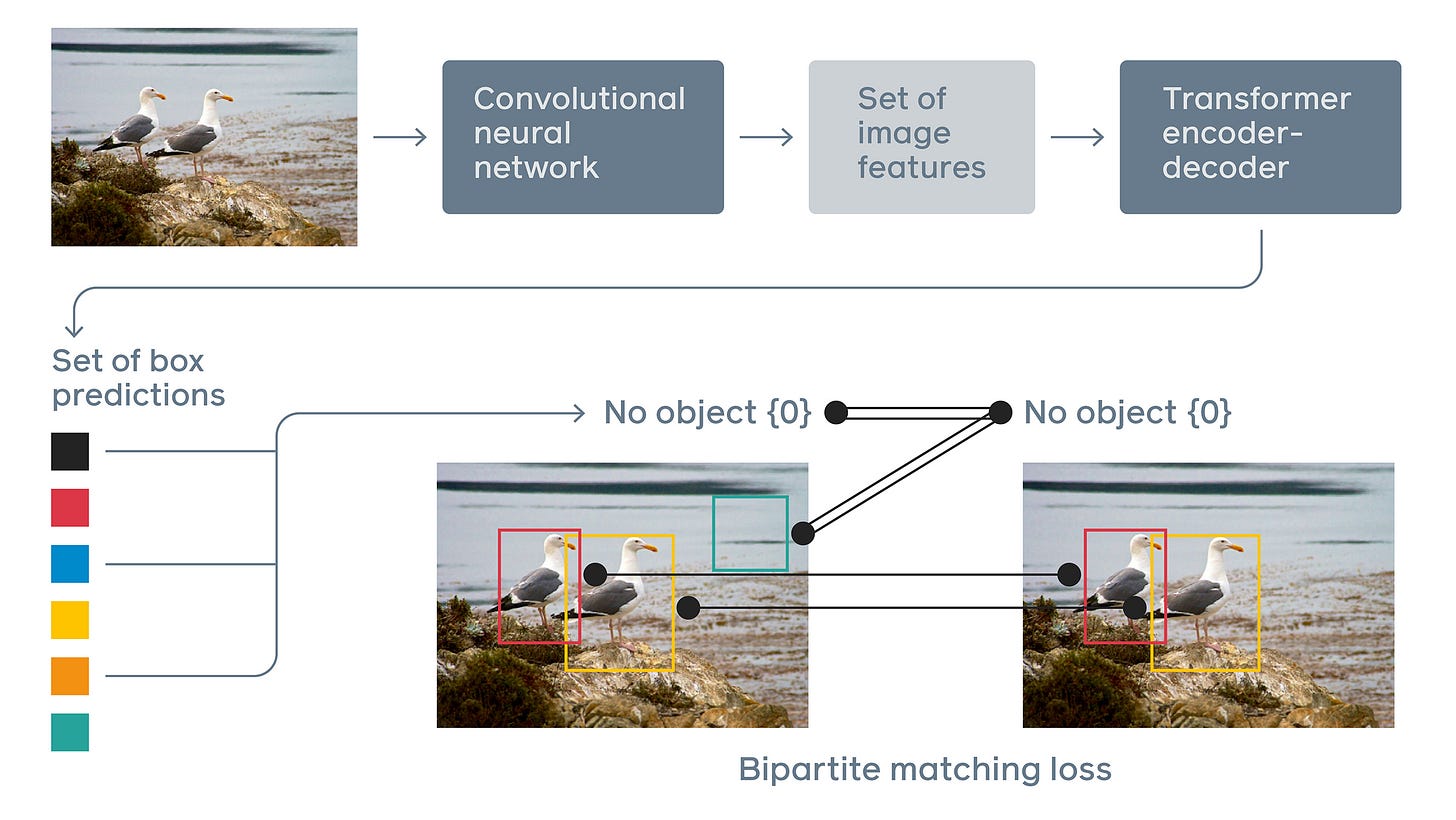

Transformers for Vision Intelligence

Transformers have typically been applied in language tasks. Facebook AI research published a paper proposing a transformer-based model for computer vision tasks.

The image credit: Facebook AI

>Read more in this blog post from Facebook AI Research

Cool AI Tech Releases:

Paddle Quantum

Baidu released a new version of its PaddlePaddle deep learning framework optimized for quantum computing tasks.

>Read more in this blog post from Baidu Research

Best Practices for Notebooks

Microsoft unveiled Wrex, a new toolkit that includes several best practices to improve data science notebooks.

>Read more in this blog post from Microsoft Research

AI in the Real World:

Eye-Catching AI vs. Real-World AI

Science magazine published a thoughtful piece detailing how many of the advancements in AI research are impractical in the real world.

>Read more in this article from Science Magazine

DefinedCrowd

DefinedCrowd raised $50 million for its AI training data platform.

>Read more in this coverage from TechCrunch

Replacing Journalists with AI

In a bold move, Microsoft is replacing dozens of journalists from MSN with AI models.

>Read more in this coverage from The Guardian

“This Week in AI” is a newsletter curated by industry insiders and the Invector Labs team, every week it brings you the latest developments in AI research and technology.

From July 14th the newsletter will change its name and format to develop ideas of systematic AI education.

To stay up-to-date and know more about TheSequence, please consider to ➡️