The Sequence Radar #779: The Inference Wars and China’s AI IPO Race

NVIDIA's large deal with Groq and new releases by Z.ai and Minimax.

Next Week in The Sequence:

Our knowledge series continues diving into synthetic data generation. This time we focus on synthetic image generation. In the AI of the Week section we are going to cover the newly released GLM 4.7. In the opinion section, we will discuss three non-trivial AI areas that I am excited about fro 2026.

Subscribe and don’t miss out:

📝 Editorial: The Inference Wars and China’s AI IPO Race

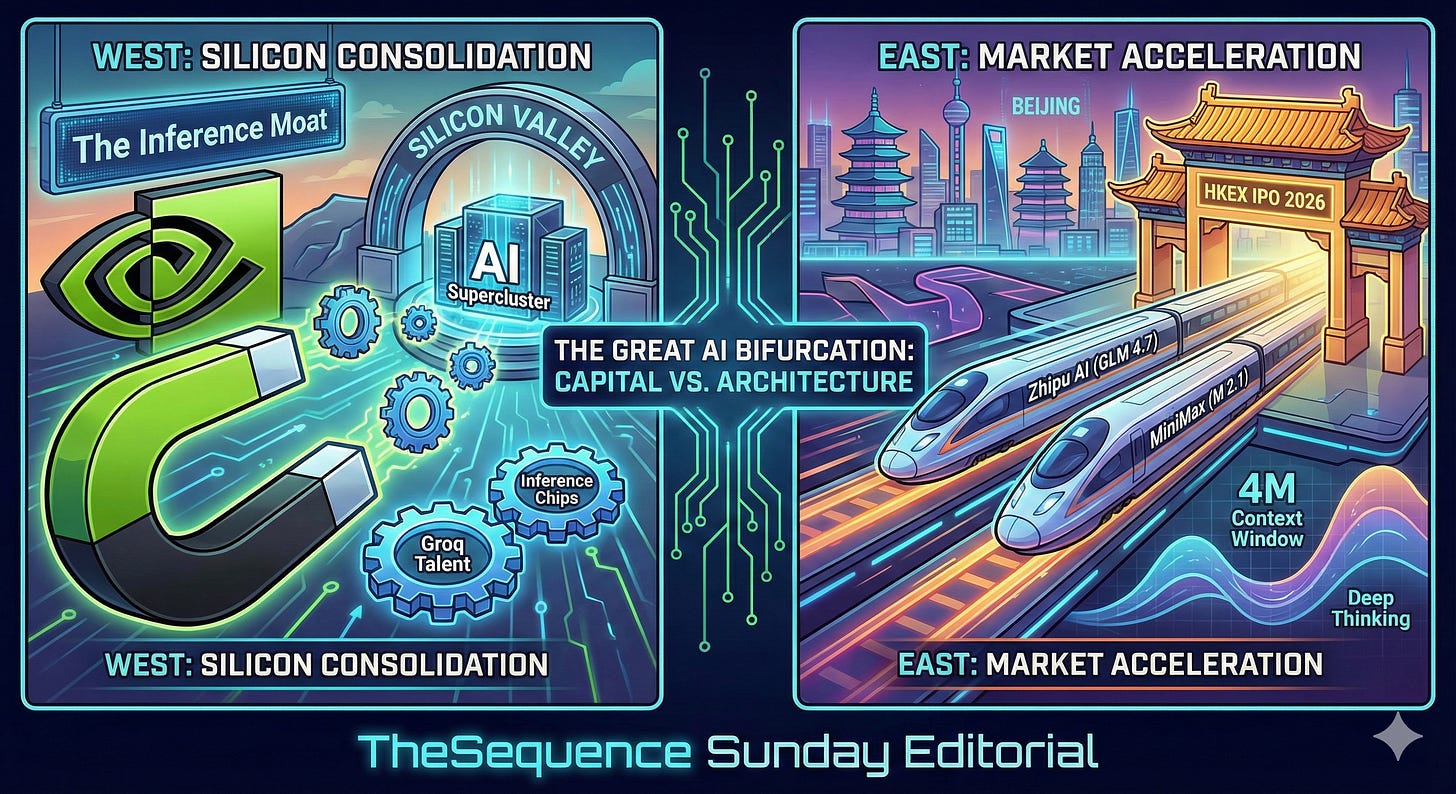

This week, the AI industry witnessed a fascinating divergence in strategy between East and West, marked by a massive talent consolidation in Silicon Valley and a maturing public market push in Beijing. While Nvidia solidified its iron grip on inference hardware through a strategic “acqui-hire” of Groq’s leadership, China’s leading model labs—Zhipu AI and MiniMax—simultaneously released frontier-class models and cleared major regulatory hurdles for Hong Kong IPOs.

The holiday headlines were dominated by Nvidia’s decisive move to license Groq’s LPU (Language Processing Unit) technology and hire its core leadership, including founder Jonathan Ross. While rumors initially pegged this as a $20 billion acquisition, the reality is more nuanced and perhaps more ruthless: a non-exclusive licensing deal that decapitates a key competitor by absorbing its visionary talent while leaving the entity independent. This follows the “Microsoft-Inflection” playbook—avoiding antitrust gridlock while securing critical IP. For Nvidia, this isn’t just about removing a rival; it’s about acknowledging that the next phase of the AI cycle is inference. By integrating Groq’s ultra-low-latency architecture, Nvidia is future-proofing its dominance against a rising tide of specialized inference chips.

While US hardware consolidates, Chinese model labs are delivering shocking performance per dollar. Zhipu AI led the charge on December 22 with the release of GLM 4.7, a model that directly challenges the Western “reasoning” class exemplified by o1 and DeepThink. By introducing “Deep Thinking” capabilities, GLM 4.7 demonstrates state-of-the-art performance in complex coding tasks, effectively positioning Zhipu as the “OpenAI of China” with a robust full-stack ecosystem. Simultaneously, MiniMax took a divergent architectural path with the release of M 2.1. Moving away from standard Transformers, this new model utilizes a linear attention mechanism combined with a Mixture-of-Experts (MoE) design. The result is a massive 4-million-token context window that offers extreme computational efficiency, which MiniMax is aggressively marketing as a “RAG Killer” capable of ingesting entire repositories for a fraction of the cost of traditional vector search pipelines.

Perhaps most significantly, both Zhipu AI and MiniMax have reportedly cleared regulatory hurdles to list on the Hong Kong Stock Exchange in early 2026. This signals a new era where foundation model labs move from venture-subsidized research projects to public entities demanding revenue discipline.

The “scaling laws” are bifurcating. In the US, scaling is becoming a function of capital concentration—Nvidia absorbing the best hardware minds to build bigger clusters. In China, scaling is becoming architectural and economic—optimizing for massive context and agentic reasoning to viable commercial products ahead of public listings. The race is no longer just about who has the most GPUs, but who can build a sustainable business on top of them.

🔎 AI Research

Google Research 2025: Bolder breakthroughs, bigger impact

AI Lab: Google Research

Summary: This article outlines Google Research’s 2025 achievements, highlighting the acceleration of the “magic cycle” of research that translates breakthroughs in generative models, quantum computing, and scientific discovery into real-world impact. Key advancements include the high-factuality Gemini 3 model with generative UI, the “Quantum Echoes” algorithm for verifiable quantum advantage, and new AI tools for genomics, neuroscience, and climate resilience.

Gemma Scope 2 - Technical Paper

AI Lab: Google

Summary: This paper introduces Gemma Scope 2, a comprehensive suite of open sparse autoencoders (SAEs) and transcoders trained on all layers of the Gemma 3 model family to advance interpretability research. The release includes novel multi-layer architectures, such as crosscoders and cross-layer transcoders, designed to capture complex circuit-level interactions and behaviors that span across multiple transformer blocks.

SAM Audio: Segment Anything in Audio

AI Lab: Meta Superintelligence Labs

Summary: This paper presents SAM AUDIO, a foundation model that unifies audio separation by allowing users to prompt with text descriptions, visual masks, or temporal spans within a single flow-matching diffusion framework. The model achieves state-of-the-art performance across diverse domains like speech, music, and sound effects, and introduces a new benchmark, SAM AUDIO-BENCH, which includes human-labeled multimodal prompts for more realistic evaluation.

INTELLECT-3: Technical Report

AI Lab: Prime Intellect

Summary: This report introduces INTELLECT-3, a 106-billion parameter Mixture-of-Experts model trained via a new scalable reinforcement learning framework called prime-rl, which supports asynchronous off-policy training and continuous batching. The model outperforms similar-sized open-source models on reasoning, math, and coding benchmarks, and the authors release the full training stack, including the model weights, the RL framework, and the environment library, to democratize large-scale agentic training.

Bolmo: Byteifying the Next Generation of Language Models

AI Lab: Allen Institute for AI (Ai2), University of Cambridge, University of Washington, University of Edinburgh

Summary: This paper introduces Bolmo, a family of open byte-level language models created by efficiently “byteifying” existing subword models to overcome tokenization limitations. The resulting architecture uses non-causal boundary prediction to achieve performance competitive with state-of-the-art subword models while enabling superior character-level understanding.

GenEnv: Difficulty-Aligned Co-Evolution Between LLM Agents and Environment Simulators

AI Lab: Princeton University, ByteDance Seed, Columbia University, University of Michigan, University of Chicago

Summary: The authors propose GenEnv, a framework that trains agents via a co-evolutionary game where a generative simulator creates dynamic tasks aligned with the agent’s current difficulty level. This adaptive curriculum approach significantly improves agent performance across multiple benchmarks while requiring substantially less synthetic data than static augmentation methods.

🤖 AI Tech Releases

GLM 4.7

Z.ai released GLM 4.7, a new version of its marquee model with strong coding and agentic capabililties.

MiniMax M2.1

Minimax released M2.1, a new multimodal model optimized for complex tasks.

Bloom

Anthropic released Bloom, a tool for behavioral evaluations of frontier models.

📡AI Radar

Groq Licensing Deal: Nvidia has entered a non-exclusive licensing agreement to utilize Groq’s inference technology, a deal that will see Groq’s founder and key engineering talent join Nvidia while the startup continues to operate independently.

Waymo In-Car Assistant: Hidden code discovered in the Waymo app reveals the company is testing an integration of Google’s Gemini AI to function as a voice-activated “virtual butler” for passengers, capable of controlling vehicle settings and answering queries.

European Data Center Partnership: The Canada Pension Plan Investment Board (CPP Investments) and Goodman Group have established a A$14 billion partnership to develop major data center projects in Frankfurt, Amsterdam, and Paris to support growing AI and cloud computing needs.

Graphite Acquisition: The AI-powered code review platform Graphite has agreed to be acquired by Cursor to integrate its collaboration tools directly into the Cursor IDE.

Lemon Slice Funding: Generative AI startup Lemon Slice has raised $10.5 million in seed funding from Matrix Partners and Y Combinator to advance its technology for creating expressive, interactive digital avatars.

Dazzle Funding: Marissa Mayer’s new company, Dazzle, has secured $8 million in seed capital led by Forerunner Ventures to build consumer applications that make AI more accessible and useful.

Alphabet Acquisition: Alphabet has entered a definitive agreement to acquire energy developer Intersect Power for approximately $4.75 billion to secure reliable clean energy for its growing data center needs.

Waymo Software Update: Following a massive power outage in San Francisco that caused its vehicles to gridlock traffic, Waymo is deploying a fleet-wide software update to improve how its autonomous driver handles intersections with disabled signals.