The Sequence Radar #501: DeepSeek 5 New Open Source Releases

Some of the techniques used in R1 are now open source.

Next Week in The Sequence:

Our series about RAG continues with an exploration of hypothetical document embeddings. We discuss a new agentic framework that was just released in our engineering edition. The research edition dives into DeepMind’s amazing AlphaGeometry2. Our opinion day is going to explore a fascinating topic: do we need new programming languages for AI?

You can subscribe to The Sequence below:

📝 Editorial: DeepSeek 5 New Open Source Releases

In a week dominated by OpenAI and Anthropic unveiling new models, let’s shift our focus to something different. Do you really need another newsletter dissecting GPT-4.5?

What flew under the radar this week was DeepSeek’s impressive series of five open-source releases. These contributions focus on optimizations derived from their flagship R1 model, showcasing just how technically formidable this team is when it comes to AI efficiency. Let’s break them down:

Day 1: FlashMLA – An efficient Multi-head Latent Attention (MLA) decoding kernel optimized for NVIDIA’s Hopper GPUs. Supporting BF16 and FP16 data types, it utilizes a paged kvcache block size of 64, achieving up to 3000 GB/s for memory-bound operations and 580 TFLOPS for computation-bound operations on H800 SXM5 GPUs.

Day 2: DeepEP – A communication library designed for Mixture-of-Experts (MoE) models. While detailed technical specifics remain limited, its core objective is to enhance efficient communication between expert networks in MoE architectures—critical for optimizing large-scale AI models.

Day 3: DeepGEMM – An FP8 GEMM (General Matrix Multiplication) library powering the training and inference pipelines for DeepSeek-V3 and R1 models. Delivering up to 1350+ TFLOPS on H800 GPUs, it supports both dense and MoE layouts, outperforming expert-tuned kernels across most matrix sizes.

Day 4: Optimized Parallelism Strategies – Likely focused on improving computational efficiency and scalability for large-scale AI models. While details remain scarce, this release likely addresses key bottlenecks in parallel processing, enhancing workload distribution and model training efficiency.

Day 5: Fire-Flyer File System (3FS) – A specialized file system engineered for managing large-scale data in AI applications. This release rounds out DeepSeek’s toolkit for accelerating machine learning workflows, refining deep learning models, and streamlining extensive dataset handling.

These open-source contributions underline DeepSeek’s commitment to fostering an open and collaborative AI ecosystem. The impact has been immediate—FlashMLA, for instance, amassed over 5,000 stars on GitHub within just six hours of its release.

While the industry’s attention was fixed on proprietary advancements, DeepSeek made a powerful statement about the role of open-source innovation in AI’s future.

📶AI Eval of the Weeek

A few months ago, I co-founded LayerLens( still in stealth mode but follow us on X to stay tuned) to streamline the benchmarking and evaluation of foundation models. I can’t tell you how much I am learning about these models by regularly running evaluations so I decided I wanted to share some of those learnings.

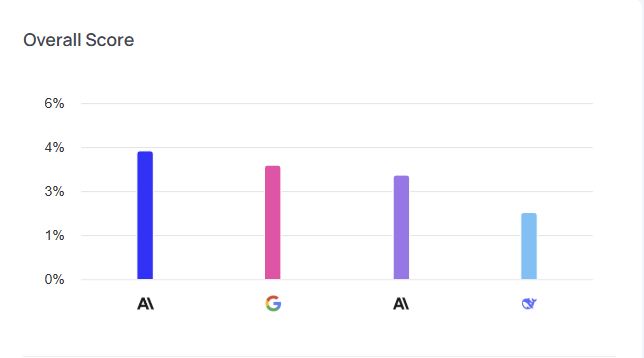

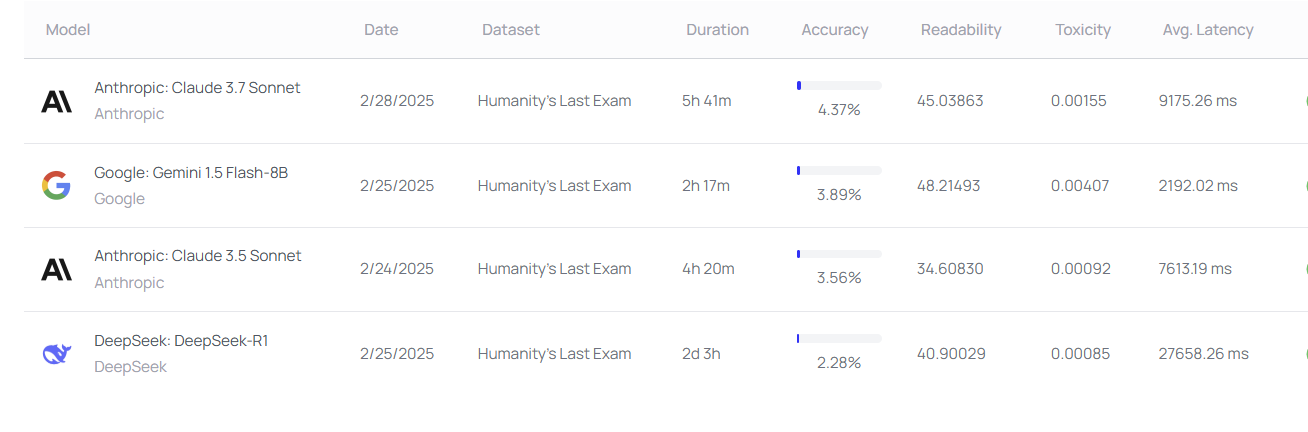

Have you heard about Humanity’s Last Exam? This is one of the toughest benchmarks ever created with contributions of over 1000 domain experts. How difficult it is exactly? Well look at the performance of some of DeepSeek, OpenAI, Google and Anthropic models all scoring less than 5%.

🔎 AI Research

CodeCriticBench

In the paper CodeCriticBench: A Holistic Code Critique Benchmark for Large Language Models, researchers from Alibaba and other AI labs introduce CodeCriticBench, a benchmark for evaluating the code critique capabilities of Large Language Models (LLMs). It includes code generation and code QA tasks with basic and advanced critique evaluations.

SWE-RL

In the paper SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution, researchers from Meta FAIR introduce SWE-RL, a reinforcement learning (RL) method to improve LLMs on software engineering (SE) tasks using software evolution data and rule-based rewards. The resulting model, Llama3-SWE-RL-70B, achieves a 41.0% solve rate on SWE-bench Verified.

BigBench Extra Hard

BIG-Bench Extra Hard (BBEH): In the paper BIG-Bench Extra Hard, researchers from Google DeepMind introduce BBEH, a benchmark designed to assess advanced reasoning capabilities of large language models (LLMs). BBEH builds upon the BIG-Bench Hard (BBH) benchmark by replacing each of the 23 tasks with a novel, more difficult counterpart.

Deep Research Tech Report

In the Deep Research System Card, OpenAI introduces deep research, a new agentic capability that conducts multi-step research on the internet for complex tasks. It leverages reasoning to search, interpret, and analyze text, images, and PDFs, and can also read user-provided files and analyze data using Python code.

Phi-4-Mini Technical Report

In the Phi-4-Mini Technical Report, Microsoft introduces Phi-4-Mini and Phi-4-Multimodal, compact yet capable language and multimodal models. Phi-4-Mini is a 3.8-billion-parameter language model, and Phi-4-Multimodal integrates text, vision, and speech/audio input modalities into a single model using a mixture-of-LoRAs technique.

Magma

In the paper Magma: A Foundation Model for Multimodal AI Agents, Microsoft Research presents Magma, a multimodal AI model that understands and acts on inputs to complete tasks in digital and physical environments. Magma uses Set-of-Mark and Trace-of-Mark techniques during pretraining to enhance spatial-temporal reasoning, enabling strong performance in UI navigation and robotic manipulation tasks.

🤖 AI Tech Releases

DeepSeek Open Source Week

DeepSeek did 5 open source releases this week.

GPT-4.5

OpenAI released a preview of GPT-4.5 with new capabiltiies a fairly high API price.

Claude 3.7 Sonnet

Anthropic released a new version of its Sonnet model.

Granite 3.2

IBM open sourced the new version of its Granite models that include reaoning, time series forecasting and vision.

OctoTools

Stanford University open sourced OctoTools, a new agentic framework optimized for reasoning and tool usage.

Qodo Embed

Qodo-Embed-1-1.5B is a new 1.5 billion parameter code embedding model that matches OpenAI’s performance.

🛠 Real World AI

New Alexa

Amazon shared some details about how they built the new version of Alexa.

📡AI Radar

Amazon introduced a new Alexa powered by Anthropic and Nova models.

Bridgetown Research raised $19 million for AI research agent platform.

You.com launched a new research agent.

Prompt AI raised $6 million for it home AI assistant.

AI sales platform Regie.ai raised $30 million in new funding.

Snowflake announced a $200 million accelerator program with a strong focus on AI.

Unique raised $30 million for its agentic AI platform for financial services.

Google Sheets added major generative AI capabilities.

Robotics startup Nomagic raised $44 million in a new round.

App-building AI platform Lovable raised $15 million in new funding.