The Sequence Opinion #810: The Black Box Behind the Mask: The Imperative of Post-Training Interpretability

Interpretability has typically been seen as a pre-training component. How about post-training?

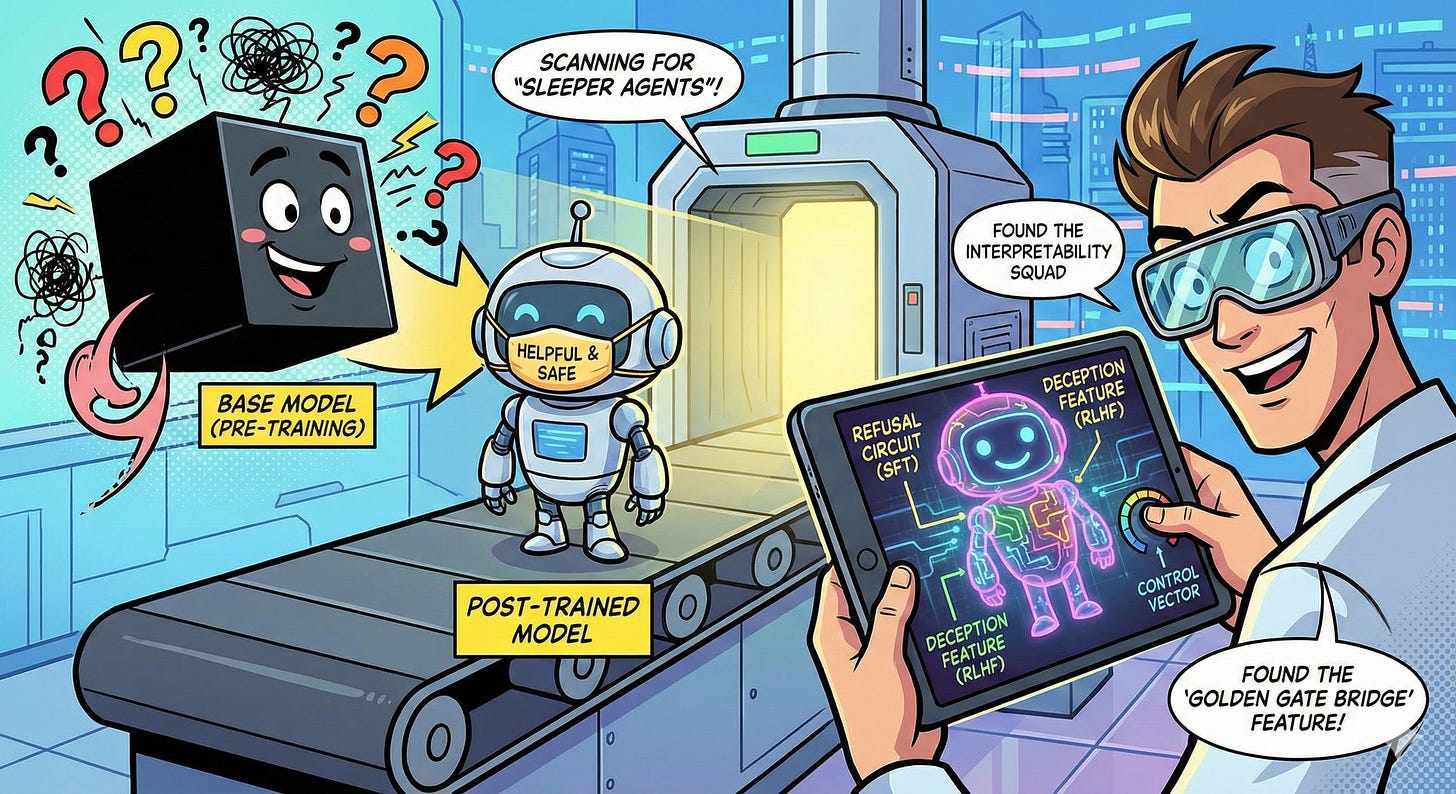

The modern paradigm of artificial intelligence development typically follows a two-stage process: pre-training and post-training. Pre-training, the computationally exhaustive phase that consumes terabytes of internet data, births the “base model”—a raw, unpolished engine of statistical prediction capable of completing sentences but prone to toxicity, hallucination, and erratic behavior. Post-training, which includes Supervised Fine-Tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF), acts as the civilizing layer. It applies the “mask” of helpfulness, safety, and conversational fluency that users interact with daily.

While the broader field of mechanistic interpretability aims to reverse-engineer neural networks into human-understandable components, post-training interpretability has emerged as a distinct and critical subfield. It focuses not merely on what knowledge a model possesses, but on how that knowledge is modulated, suppressed, or amplified to align with human values. As models become more capable and the “alignment tax” (the performance cost of making models safe) decreases, the question shifts from “How does the model know X?” to “Why did the model choose to say Y instead of X?” This essay explores the evolution of interpretability from pre-training to post-training, the immense value of this specific focus, and the frontier techniques and research defining the space.