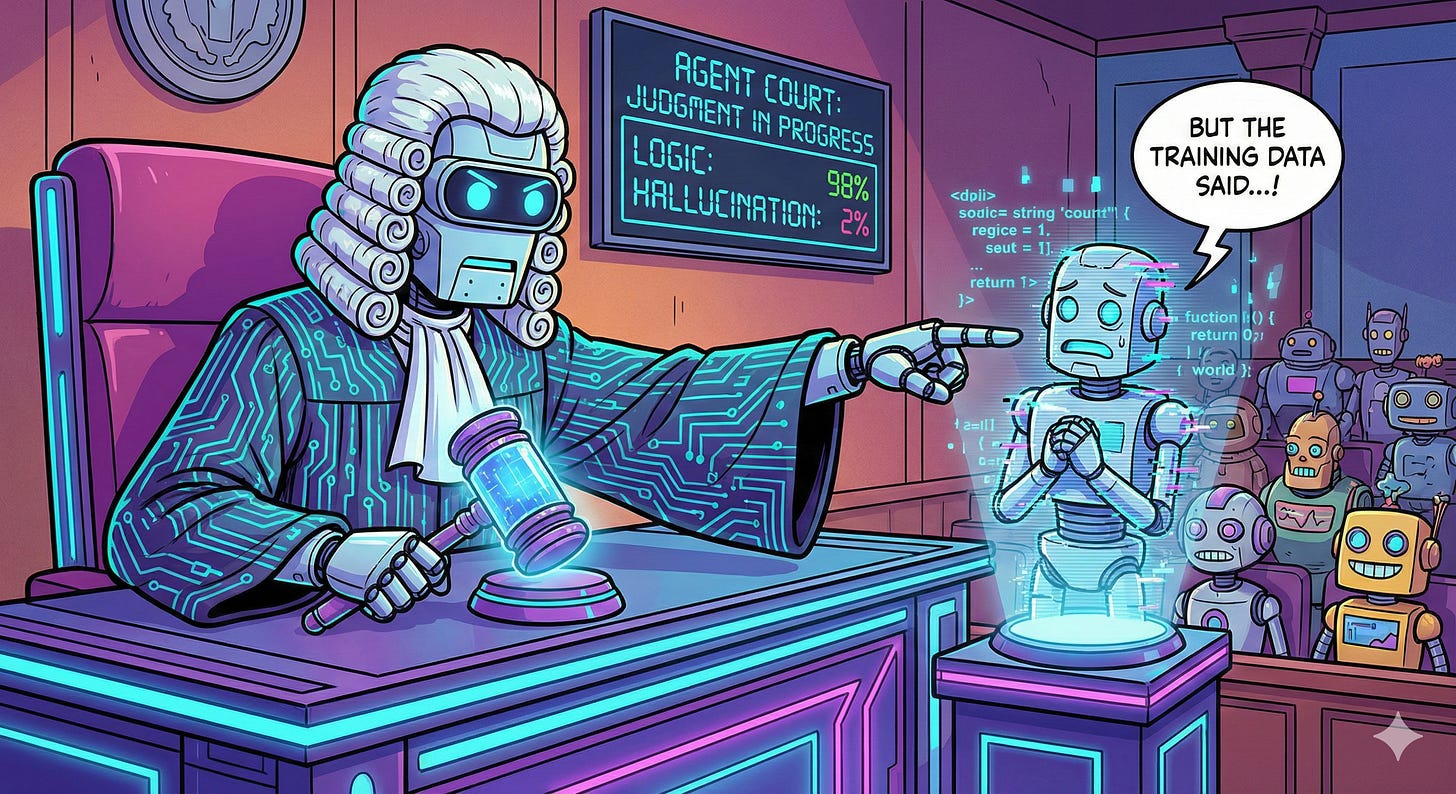

The Sequence Opinion #806: The Emergence of the Agent-as-a-Judge: Why Evals Need a Reasoning Engine

One of the hottest trends in AI evals.

In the early days of the current AI boom—roughly 2023—we had a very “vibe-based” relationship with our models. You’d spend your afternoon poking GPT-4 with various prompts, and if the output looked coherent and followed the instructions, you’d give it a mental thumbs-up and move on. We called this “evaluation,” but in reality, it was just a loose collection of anecdotes.

As we started building more complex systems, the “vibe check” stopped scaling. We needed something more rigorous, more automated, and frankly, more tireless. This led us to the first major transition: LLM-as-a-Judge. The idea was elegant in its simplicity—if these models are so good at generating text, surely they’re good at grading it, too. We started using a “stronger” model (like GPT-4) to grade the outputs of “weaker” models. It worked surprisingly well for a while. It gave us things like MT-Bench and Chatbot Arena, where the model would look at a response and say, “This is an 8/10 because it’s helpful but a bit wordy.”

But we are now in 2026, and the ground has shifted again. We’ve realized that a single-pass, “intuitive” judgment from an LLM is no longer enough. We are moving from the era of the Judge as a Critic to the era of the Judge as an Agent.

Recently, I’ve been doing a lot of work in the agent-as-a-judge space as part of the LayerLens platform so wanted to share some ideas about the space.