The Sequence Opinion #798: Inside the Most Important Paper in Agentic Reasoning: Why the Loop is the Logic

Is reasoning a property of the AI model or the loop?

TLDR: Researchers from DeepMind, Amazon, Meta, Yale and other AI labs collaborated in one of the most important publications about agentic reasoning that demistifies some of the core assumptions about the current methods.

If you look at the current state of Large Language Models, we are essentially stuck in a “System 1” trap. You feed a prompt into a transformer, the tensors flow through the layers, and out pops a completion. It’s fast, it’s intuitive, but it’s fundamentally “shallow.” It’s what Daniel Kahneman would call fast thinking—pattern matching at scale.

But the real world doesn’t reward pattern matching; it rewards agency.

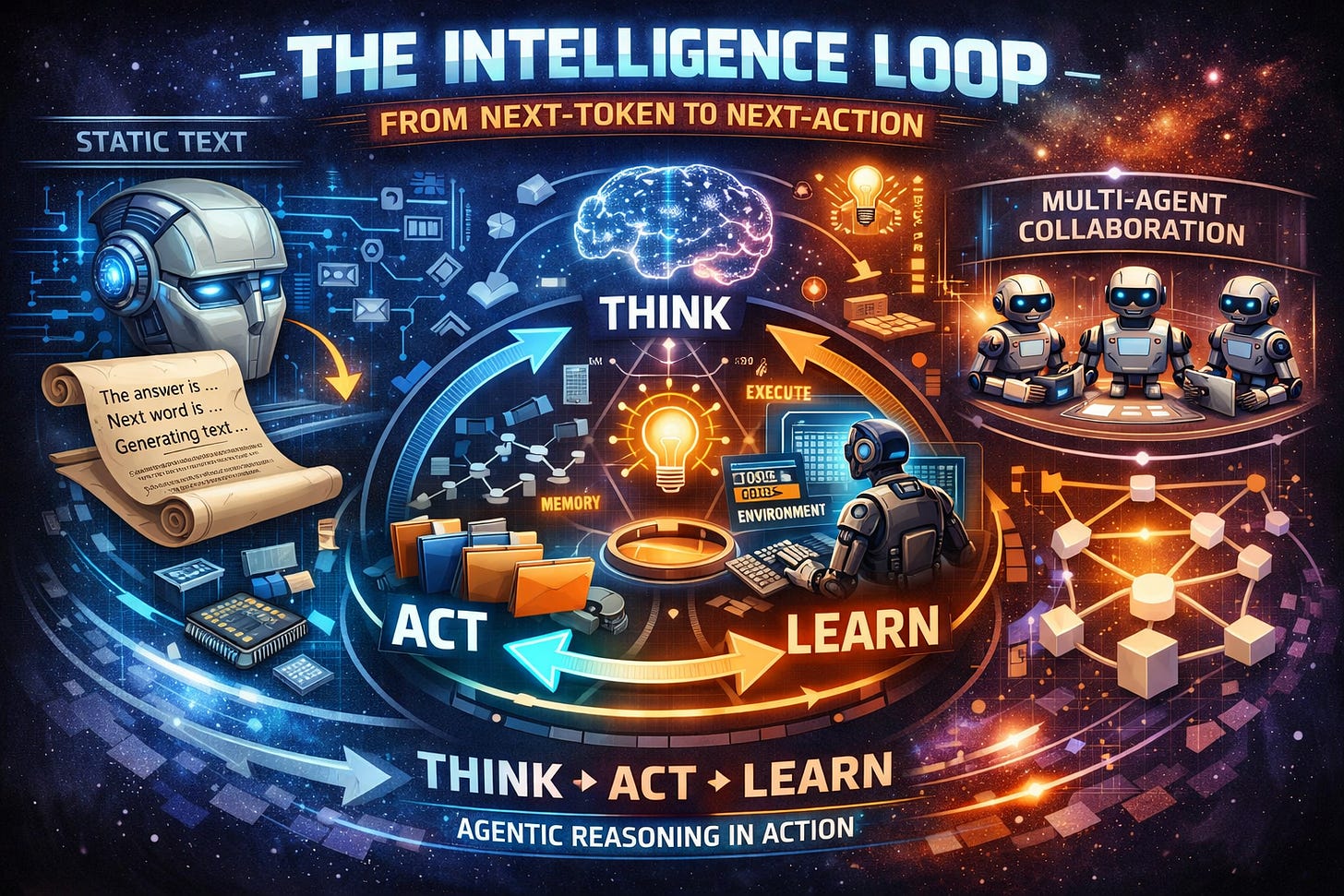

The recent, exhaustive research into agentic reasoning for LLMs provides a rigorous roadmap of where we are. But reading between the lines of the technical frameworks, a more provocative thesis emerges: Reasoning is not a property of the model; it is a property of the loop. We are currently transitioning from the “Model Age,” where we obsessed over parameter counts, to the “Agentic Age,” where we obsess over the architecture of the feedback loop.