The Sequence Opinion #794: The Uncanny Valley of Intent: Why We Need Systems, Not Just Models

Language is too abstract and programming languages are too constrained. We need a new abstraction for building systems.

If you’ve spent any time looking at the raw attention maps of a Transformer or debugging a failed prompt chain at 2 AM, you start to realize something fundamental about the current state of Artificial Intelligence: we are trying to interface with a probabilistic alien intelligence using tools designed for deterministic machines and biological evolution. And frankly, it’s a bit of a mess.

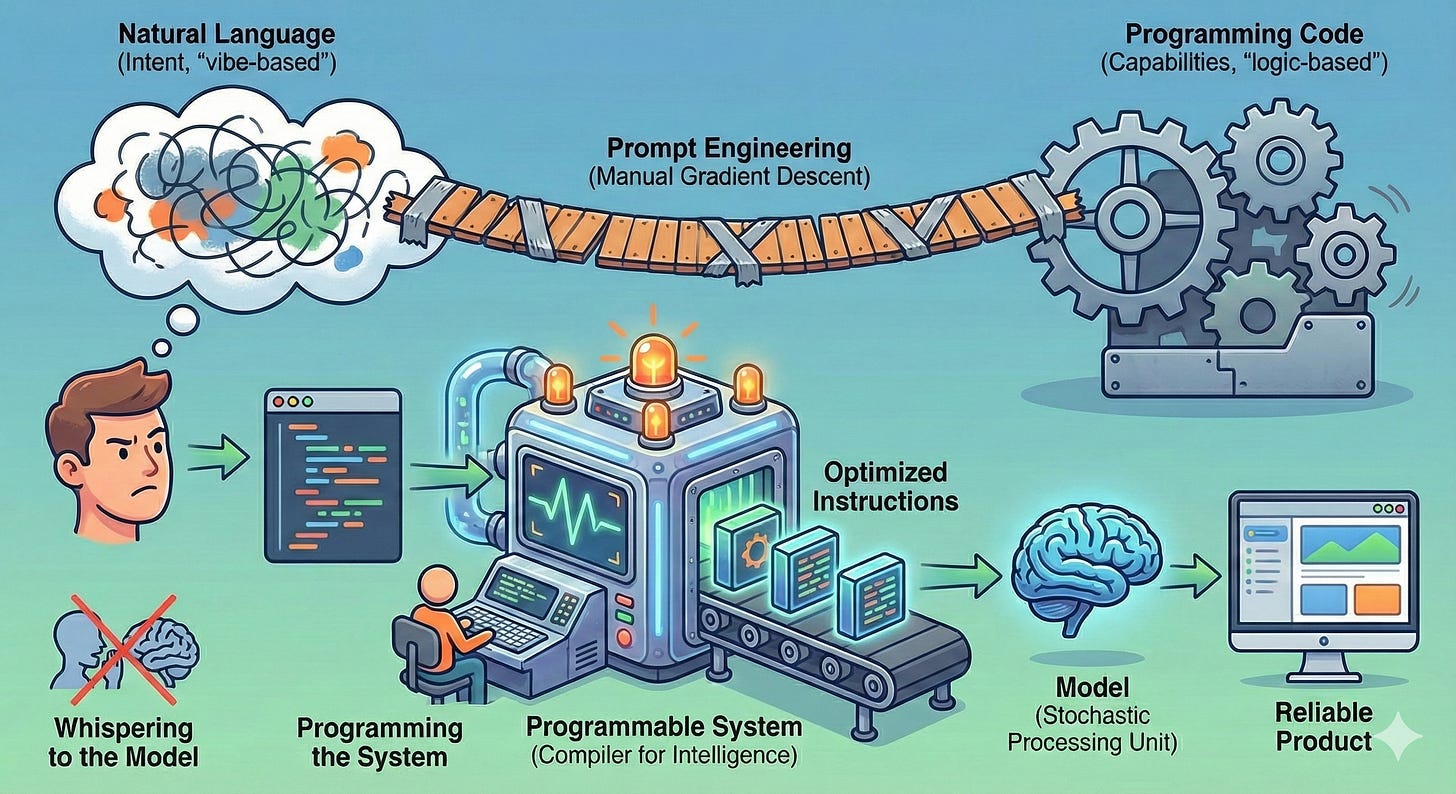

We are currently stuck in a strange localized minimum. On one side, we have Natural Language—the interface of biology. It is expressive, high-bandwidth, but incredibly lossy and ambiguous. It’s “vibe-based.” On the other side, we have Programming Languages (Python, C++)—the interface of classical silicon. They are precise, constrained, and brittle. They are “logic-based.”

The thesis I want to explore today is that neither of these is the correct abstraction for the future of AI application development. We are missing the middle layer. We have excellent Models (the raw weights), but we are terrible at building Systems (the cognitive architectures) that effectively direct those models.

We need a new kind of substrate. We need to move away from the binary of “talking to the bot” vs “coding the bot” and toward a paradigm of Artificial Programmable Intelligence (API).