The Sequence Opinion #782: The New Gradient: Research Directions That Will Ship in 2026

Research trends that are likely to have an impact in 2026 frontier models.

As the first post of 2026, I wanted to share some of the research trends that I think might be super influential in this year frontier model breakthroughs. These are development that have already showed quite a bit of promise and seems ready for most ambitious implementations

If you’ve been watching the loss curves over the last decade, the recipe has been surprisingly simple: take a Transformer, throw a massive pile of internet text at it, crank up the GPU cluster, and wait. And the “bitter lesson” held true—scale was all you needed. We built these incredible “stochastic parrots” that could complete your code, write poetry, and pass the bar exam, all by just really, really wanting to predict the next token.

But if you look at the research papers dropping recently, the vibe has shifted. We are hitting a point where just “scaling up” the pre-training run is seeing diminishing returns. We don’t just want models that can talk smooth; we want models that can think straight.

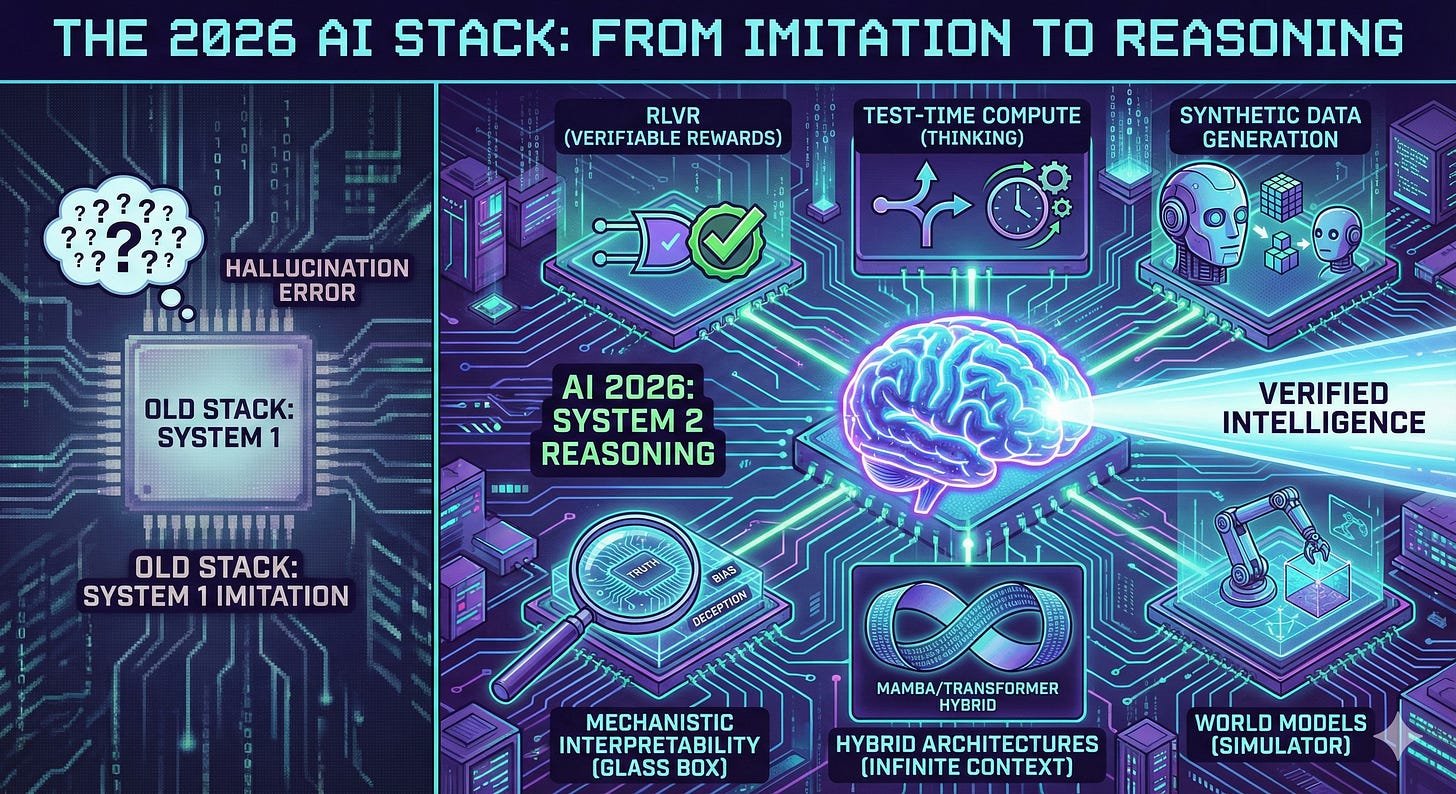

As we look toward 2026, we are moving from the era of Generative AI (making things that look real) to Verifiable AI (making things that are correct). We are effectively trying to give these models a “System 2”—that slow, deliberate, logical part of the brain that humans use when we aren’t just reacting on autopilot.

Here is a look at the new stack that is making this happen.