The Sequence Opinion #710: The Inference Cloud Wars: Speed, Scale, and the Road to Commoditization

Some thoughts about the past , present and future of AI inference providers.

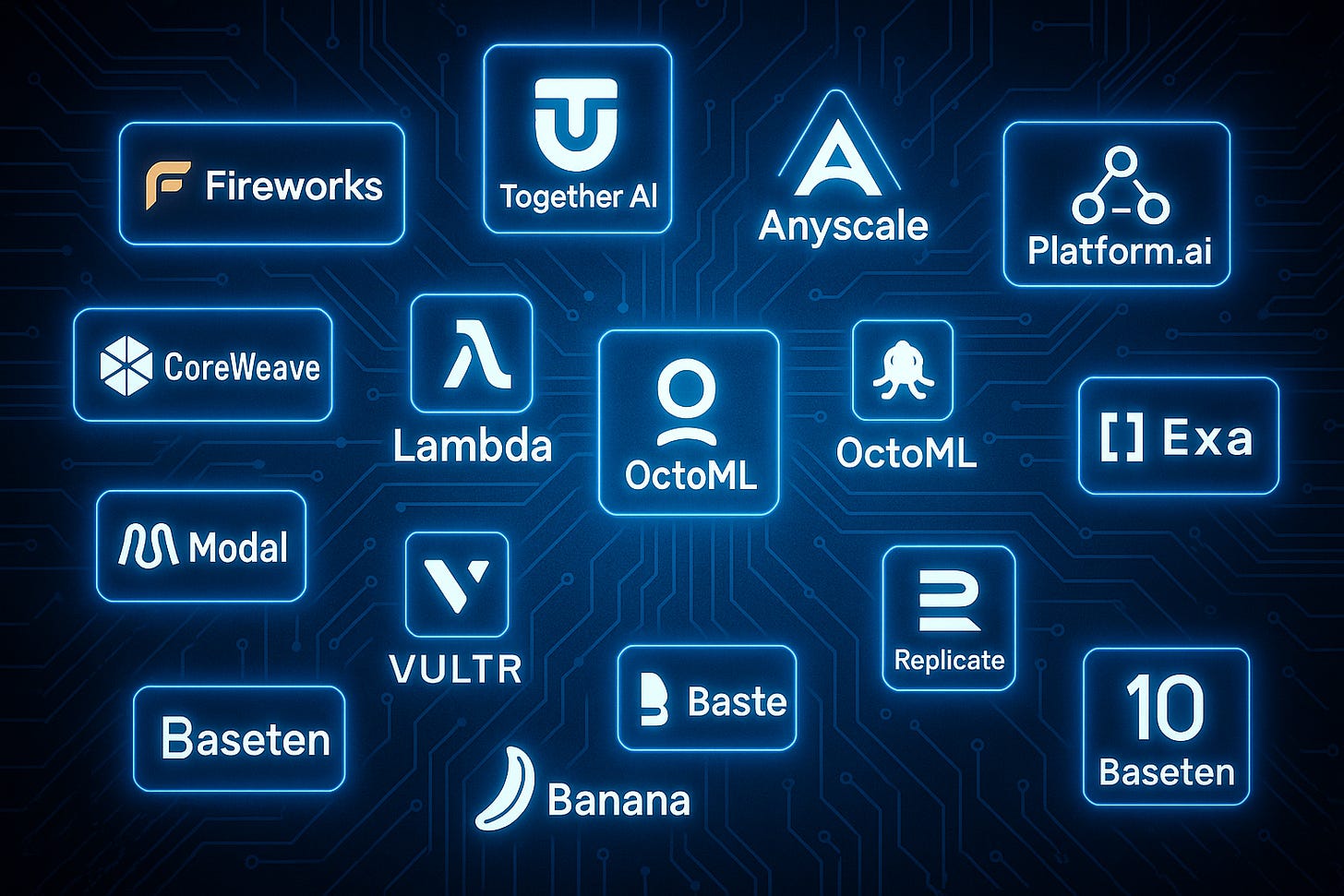

Since the launch of ChatGPT in late 2022, a new class of startups has emerged to meet the exploding demand for running large AI models in real time. These AI inference cloud providers – exemplified by companies like Together AI and Fireworks AI – offer hosted APIs to run generative models (large language models, image generators, etc.) on their optimized GPU infrastructure. In the past two years, they have proliferated rapidly, fueled by surging interest in AI applications and the need for cost-effective, customizable model deployment. This essay explores the main reasons behind their rise, the intense competition they face from hyperscale cloud platforms (AWS, Azure, Google Cloud) and even GPU vendors like NVIDIA, and a future scenario in which only the top players survive by differentiating on performance while others struggle to keep up. The race is on to provide the fastest, cheapest, and most flexible AI inference – but as the field matures, it may quickly commoditize, reshaping the landscape of AI infrastructure.