The Most Obvious Secret in AI: Every Tech Giant Will Build Its Own Chips

Sundays, The Sequence Scope brings a summary of the most important research papers, technology releases and VC funding deals in the artificial intelligence space.

Next Week in The Sequence:

Edge 333: Our series about fine tuning continues with an exploration of the popular parameter efficient tuning(PEFT) technique. We review the original PEFT paper and the Ray Train frameork.

Edge 334: We review 4 new AI compilers released by Microsoft.

You can subscribe below:

📝 Editorial: The Most Obvious Secret in AI: Every Tech Giant Will Build Its Own Chips

NVIDIA reigns as the undisputed king of the AI hardware market, a trend that has propelled the company to nearly one trillion dollars in market capitalization. NVIDIA's dominance has resulted in an unwelcome dependency for AI platform providers, often leading to limitations in their products. Even tech giants such as Microsoft and Amazon have experienced GPU shortages when it comes to pretraining or fine-tuning some of their massive foundation models. This dependency is even more challenging for AI startups, which are forced into multi-year leases of GPU infrastructure as a competitive defensive move.

Removing the reliance on NVIDIA GPUs is a natural evolution in the development of generative AI, and the most obvious path is for tech incumbents to develop their own AI chips. Google serves as a primary example of this trend. The search giant is ushering in a new generation of its tensor processing unit (TPU) technology, which is prevalent in Google Cloud. Just last week, reports surfaced that OpenAI is exploring options to develop its own AI chips. Similarly, Microsoft has been working on its own AI chip for a while, and it is expected to debut next month. Amazon has released its Inferentia AI chip, which is particularly interesting given its supply chain expertise. It can even be argued that companies like AMD might become attractive acquisition targets for these tech incumbents in order to have a competitive alternative to NVIDIA.

The current generation of foundation models has not shown any limitations in terms of scaling laws. This means that, contrary to the beliefs of some skeptics, these models will continue to grow in size for the foreseeable future. The process of building these larger models will require massive GPU computing power, and relying solely on NVIDIA for this computing power may not be the only option. Within a few months, we can expect every major cloud platform incumbent to start manufacturing its own AI chips.

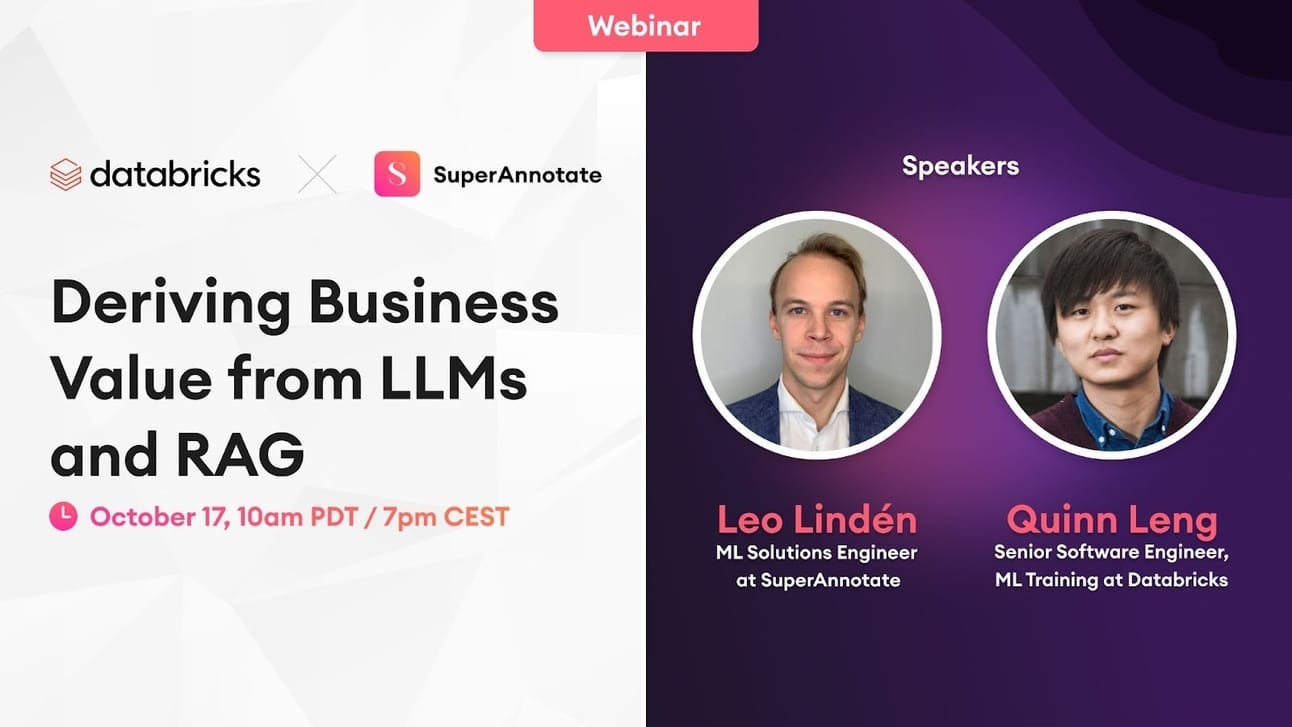

📌 Webinar: Deriving Business Value from LLMs and RAG

Date: October 17th, 10 am PDT / 7 pm CEST

We are excited to support an upcoming webinar with Databricks and SuperAnnotate where we'll learn how to derive business value from Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG). In this webinar, Leo and Quinn will delve into these capabilities to help you gain tangible insights into assessing these models for optimal alignment with your objectives.

Join us for a knowledge-packed session that offers actionable insights for companies, big or small, looking to leverage the might of LLMs and RAG. Equip yourself with the information to drive strategic AI decisions. Secure your spot today. It’s free (of course).

🔎 ML Research

DALL-E 3

OpenAI published a paper detailing some of the technical details behind DALL-E 3. The paper details elements of the DALL-E 3 readiness process including expert red teaming, evaluation and safety —> Read more.

LLama Ecosystem

Meta AI Research published an analysis of the adoption of its Llama 2 model. The writeup covers some of the future areas of focus of Llama including multimodality —> Read more.

Scaling Learning for Different Robots

Google DeepMind published a paper and dataset detailing Open X-Embodiment, a dataset for robotics training. The paper also discusses the robotics transformer model that can transfer skills across different robotics embodiments —> Read more.

Contrastive Learning for Data Representation

Amazon Science published two papers proposing constrastive learning techniques that can improve data representations in ML models. The first paper proposes a training function that creates useful representations while maintaining managing memory and training costs. The second paper proposes geometric constrainsts in representations that result more useful for downstream tasks —> Read more.

Text2Reward

Researchers from Microsoft Research, CMU, University of Hong Kong and others published a paper discussing Text2Reward, a method that can generate a reinforcement learning reward function based on natural language inputs. The methods takes a goal described in language as input and generates a dense reward function based on a representation of the environment —> Read more.

🤖 Cool AI Tech Releases

LMSYS-Chat-1M

LMSYS, the organization behind Vicuna and Chatbot Arena, open sourced a datasets containing one million real world conversations with LLMs —> Read more.

PyTorch 2.1

PyTorch released a new version wit interesting updates in areas such as tooling, audio generation and accleration —> Read more.

🛠 Real World ML

Embeddings at LinkedIn

LinkedIn discusses the embeddings architectures used to power its job search capabilities —> Read more.

Meta Contributions to Python’s New Version

Meta outlines some of its recent contributions to Python 3.12 —> Read more.

📡AI Radar

Anthropic is trying to raise another $2 billion, shortly after Amazon agreed to invest $4 billion a few days ago.

Microsoft is rumored to debut an AI chip in about one month.

Asana launched a new set of generative AI capabilties for productivity.

Meta AI unveiled new generative AI features for advertisers.

VISA launched a $100 million venture vehicle focused on generative AI.

Unitary, a platform for AI-based content moderation, announced a $15 million series A.

AI automation platform Induced AI announced a $2.3 million funding round tha includes industry luminaries such as Sam Altman.

AI powered parking automation platform Metropolis announced that it raised $1.7 billion to acquired SP Plus.

Observe, a data observability platform, announced that it has raised $50 million and unveiled new generative AI capabilities.

AI fine tuning platform Gradient raised $10 million in new funding.

Yahoo is spinning up Vespa, a big data search engine, as an indepedent company.

AI hardware company Lemurian Labs announced a $9 million seed round to build more efficient AI computing hardware.

Rabbit, a company trying to design a language-powered operating system, raised $20 million in new funding.