🎙Or Itzary/Superwise About Model Observability and Streamlining Large ML Projects

Getting to know the experience gained by researchers, engineers, and entrepreneurs doing real ML work is an excellent source of insight and inspiration. Share this interview if you like it. No subscription is needed.

👤 Quick bio / Or Itzary

Tell us a bit about yourself. Your background, current role and how did you get started in machine learning?

Or Itzary (OI): I’m Or Itzary, CTO at Superwise, which, as you’ve all probably heard 😊 is one of the leading model observability platforms. I’ve been at Superwise since its founding. I even wrote the majority of our original code for Superwise’s MVP. Today my focus is on streamlining our product and engineering interfaces – in a nutshell, that means helping translate domain knowledge into features and technology.

Data has been an ongoing theme in my life. Already in high school I was working with databases for school projects, so it was always clear to me that engineering was where I’d land. It was really in my master’s (in data science, BTW) that this turned into a proper career. While getting my degree, I started working as a junior data scientist at a professional services company specializing in data science. I’d highly recommend this as it exposed me to a variety of use cases and models, NLP, recommendation, time-series anomaly detection in wearables.

Because of where I got my start, I’ve always been a full-cycle data scientist, which means end-to-end conceptualization, development, and deployment of models. One of the things that we say time and time again was that more and more of our time was being taken up with maintenance and how to explain model behavior. It was a pain point for us, and when we couldn’t find a model monitoring solution to solve our pain, Superwise was born.

🛠 ML Work

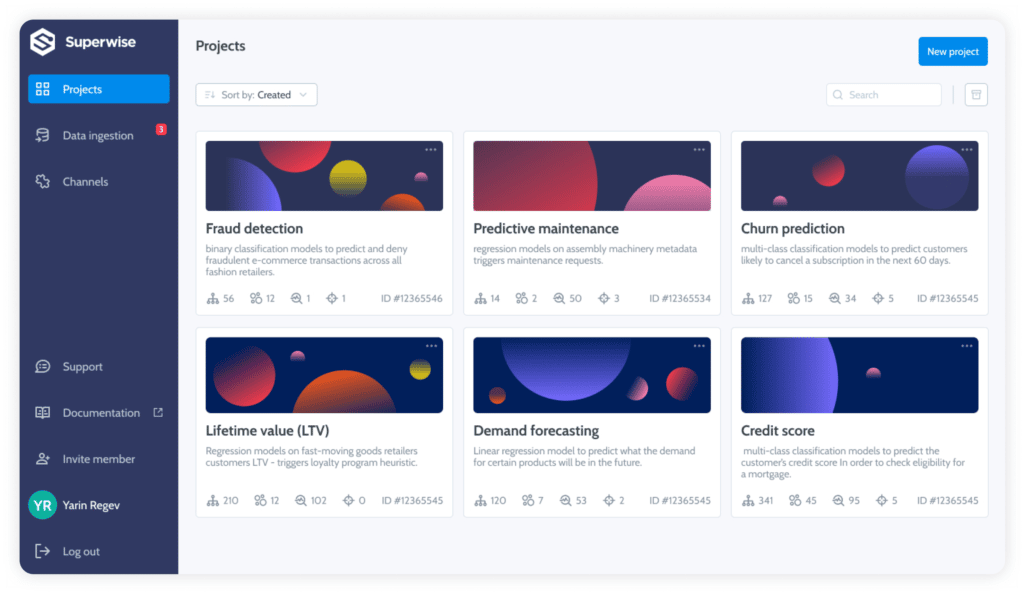

Superwise has rapidly become one of the premier ML observability platforms in the market but you guys recently launched a new effort focused on enabling high-scale model observability. Tell us about the inspiration for Superwise Projects and some of its core capabilities?

OI: If we’ve said it once, we’ve said it a million times, ML scales like nothing else in the engineering world. The more obvious side of this is volume, scaling up vertically in data, and, of course, predictions. But on the flip side, you have horizontal scale across a use case—companies are running hundreds and thousands of fraud detection, churn, recommendation models, each per customer, making model monitoring and observability even more complex. Superwise Projects was inspired by this customer exactly.

Superwise Projects let you group or unite a group of models based on a common denominator. You can group models around a shared identity, such as a customer or application, to facilitate quick access and visibility into groups, or you can create a functional group around a machine learning use case that will enable cross-model observability and monitoring.

Configurations can be shared and managed collectively to utilize schema, metrics, segments, and policies across the entire project. This will significantly reduce your setup and maintenance time and help you get to value faster. Projects are much more than an accelerated path to efficiency in identifying micro-events such as input drift on the model level. With projects, you can observe and monitor cross-pipeline macro-events like missing values, performance decay for a specific segment across all models, and so forth. It gives ML practitioners the ability to ask and answer questions like never before.

How do I monitor a production pipeline with hundreds of models?

Which anomalies are cross pipeline issues? Which are bounded to a specific model?

How do I integrate, monitor, and manage a group of models all at once?

How do I identify systemic model failures and inefficiencies?

Most ML projects still operate at a small scale using a handful of models so its fundamentally hard to envision the proper architecture to scale to hundreds of models. What are some of the unique challenges of monitoring ML pipelines at large scale and what are some of the key differences with ML observability practices for single models?

OI: So we actually ran a webinar exactly on the topic of multi-tenancy architectures in ML a few months back, which you’re welcome to view. Something that surprised even us was that when asked which data science method attendees use (the options were global model, model per customer, customer segmentation, or transfer learning), 65% answered “model per customer.” That makes you think about how “small” the scale out there really is. From a certain point of view, a company may have a single LTV model because the models share a high degree of schema. But if they’re training and serving the model to 500 different customers, is it low-scale or high-scale? On the pipeline side, regardless if they’ve gone with a single, multi, or hybrid tenant approach, the answer is high-scale, and it’s also a high-scale model observability challenge.

In the one model per customer use case, it’s highly important to be able to get observability and alerts on different levels of granularity.

Take model performance: you want to know what the overall performance across all models is. Is there a performance drop on some specific cross-section of models? It helps to think about this in the context of data leakage or concept drift, for example. If you see issues across customers, the potential implications could be much more severe than an issue in a single model.

If you have a data integrity issue in one of your features, you need to know how many models were affected. Understanding the degree of the integrity issue highlights quickly what dependencies were impacted and what went wrong in your pipeline/s.

But it’s not just about extending model observability to create a context for a group of models. It’s about centralizing the management of ML monitoring. The potential overhead in configuration and maintenance for 100 models is insane without cross-project functionality. Let’s say it takes a minute to create a segment or a policy – just to set up monitoring across sub-populations for all your models, you’re looking at roughly 3 ½ hours, and that’s assuming that metrics share threshold configurations. Superwise Projects are explicitly built to streamline the high-scale observability and monitoring management of ML models.

In the last few years, we have seen a proliferation of massively large architectures like transformers that seem incredibly difficult to understand. What are some of the best ML interpretability and observability practices that should be considered when managing large neural networks?

OI: Actually, the advance in our ability to build larger, more complex networks just emphasizes the need for a model observability platform that enables the different stakeholders to understand and monitor ML behavior, given that the box is “blacker.” As such, we see a huge increase in the need to support such use cases and the ability to monitor more complex data types like vectors and embeddings. The challenge is not just a technical challenge regarding the ability to integrate with such networks and measure them, but also how to interpret issues once discovered. Detecting drift or a distribution change in your embedded vector is important but could leave you wondering what’s the real root cause. It’s crucial to be able to understand the context. What has changed in your images that is causing you to see a drift in their embedded vectors? How do you supply such contextual information and detect it automatically? How these insights can be correlated to potential model issues is a challenge we are exploring extensively these days, so stay tuned!

ML observability is fundamentally tied to aspects such as bias and fairness. What are some of the key ideas in ML observability methods to ensure fairness in ML solutions?

OI: There are quite a few conflicting takes on fairness and bias. Intuitively we tend to think about fairness and bias in terms of good and bad, right and wrong. But fairness and bias are business concerns, not absolute truths. Models are, by definition, biased. From a business perspective, there might be ethical, brand, or other concerns you need to measure. One organization could be OK with gender as a contributing attribute to an ML decision-making process, while in a different context, a model will need to be unaware of gender characteristics (including proxy attributes). Also, different fairness metrics could have inherent tradeoffs between them, and the potential for such measures is endless. Given this, there are three key capabilities needed in regards to ensuring fairness.

Out-of-the-box metric observability

Metric customization to the different use cases

Bias and fairness monitoring

This way, companies hit the ground running with predefined common fairness metrics that domain experts can extend and customize by tailoring the fairness metrics to their use cases. Once configured and measured, like any other model-related metric, the challenge is how to monitor it automatically. Specifically, in bias monitoring, you should be able to define sensitive groups and benchmark groups that you wish to compare and detect abnormalities automatically.

💥 Miscellaneous – a set of rapid-fire questions

Favorite math paradox?

That’s like choosing a favorite child. You always love your first child, that would be the Birthday Problem for me, but I equally love Bertrand paradox.

What book can you recommend to an aspiring ML engineer?

Is the Turing Test still relevant? Any clever alternatives?

I wouldn’t go as far as to say that the Turing Test is irrelevant. However, a more appropriate question would be how strong an intelligence is as per the Chinese room argument.

Most exciting area of deep learning research at the moment?

I think that I’d have to go with either graph neural networks or online learning.