📝 Guest post: How to setup MLOps at a reasonable scale: tips, tool stacks, and templates from companies that did

No subscription is needed

In TheSequence Guest Post, our partners explain what ML and AI challenges they help deal with. In this article, neptune.ai discusses how to setup MLOps at a reasonable scale: tips, tool stacks, and templates from companies that did

We wrote about what MLOps at a reasonable scale is and why it is important to you.

But the big question we didn’t talk about there was:

How do reasonable scale companies actually set it up (and how should you do it)?

In this issue, we’ll go over resources to help you build a pragmatic MLOps stack that will work for your use case.

Let’s start with some tips.

MLOps tips

Recently we interviewed a few ML practitioners about setting up MLOps.

Lots of good stuff in there, but there was this one thought we just had to share with you:

“My number 1 tip is that MLOps is not a tool. It is not a product. It describes attempts to automate and simplify the process of building AI-related products and services.

Therefore, spend time defining your process, then find tools and techniques that fit that process.

For example, the process in a bank is wildly different from that of a tech startup. So the resulting MLOps practices and stacks end up being very different too.” – Phil Winder, CEO at Winder Research

So before everything, be pragmatic and think about your use case, your workflow, your needs. Not “industry best practices”.

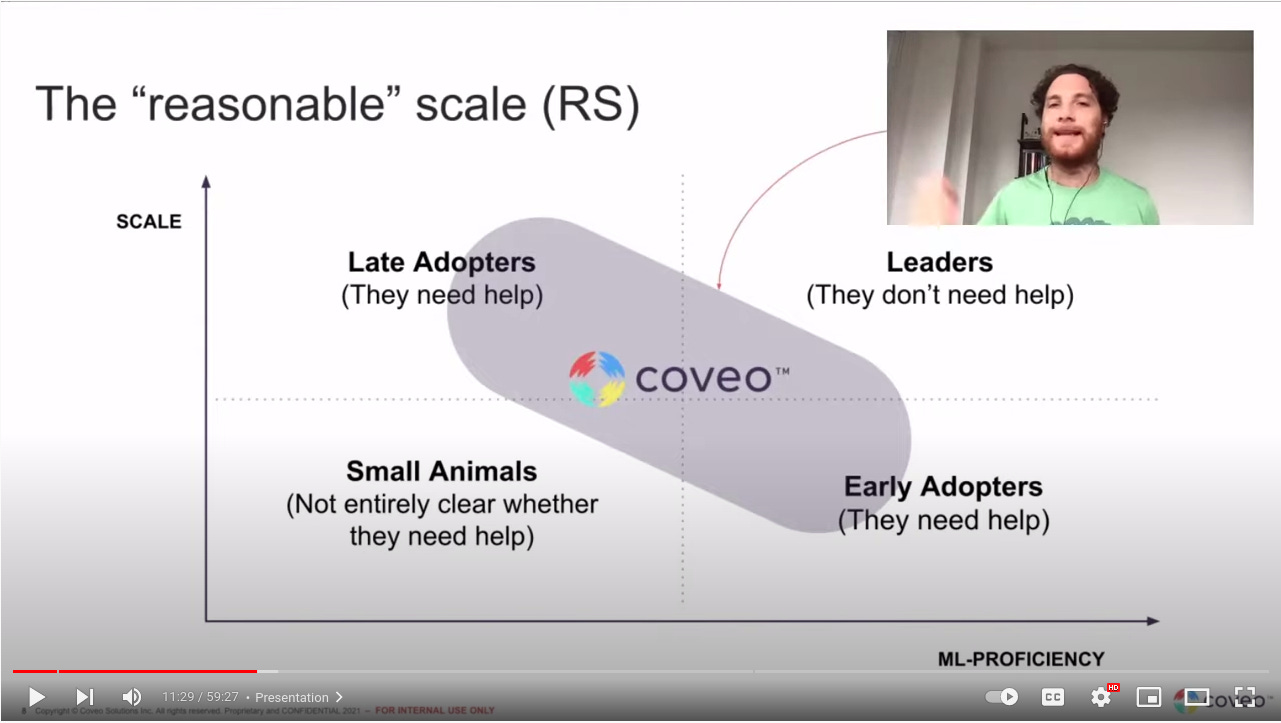

No reasonable scale ML discussion is complete without Jacopo Tagliabue, Head of AI at Coveo, who coined the term. In his pivotal blog post, he suggests a mindset shift that we think is crucial (especially early in your MLOps journey):

“to be ML productive at a reasonable scale, you should invest your time in your core problems (whatever that might be) and buy everything else.”

You can watch him go deep into the subject in this Stanford Sys seminar video.

The third tip we want you to remember comes from Orr Shilon, ML engineering team lead at Lemonade.

In this episode of mlops.community podcast, he talks about platform thinking.

He suggests that their focus on automation and pragmatically leveraging tools wherever possible were key to doing things efficiently in MLOps.

With this approach, at one point, his team of two ML engineers managed to support the entire data science team of 20+ people. That is some infrastructure leverage.

Now, let’s look at example MLOps stacks!

MLOps tool stacks

There are many tools that play in many MLOps categories though it is sometimes hard to understand who does what.

From our research into how reasonable scale teams set up their stacks, we found out that:

Pragmatic teams don’t do everything, they focus on what they actually need.

For example, the team over at Continuum Industries needed to get a lot of visibility into testing and evaluation suites of their optimization algorithms.

So they connected Neptune with GitHub actions CICD to visualize and compare various test runs.

GreenSteam needed something that would work in a hybrid monolith-microservice environment.

Because of their custom deployment needs, they decided to go with Argo pipelines for workflow orchestration and deploy things with FastAPI.

Their swit:

Those teams didn’t solve everything deeply but pinpointed what they needed and did that very well.

If you’d like to see more examples of how teams set up their MLOps, Stephen Oladele, our Developer Advocate, did a great job researching and writing down setups of 8 more companies.

Also, if you want to go deeper, there is a slack channel where people share and discuss their MLOps stacks.

So if you’d like to see even more stacks:

Find the #pancake-stacks channel

While at it, come say hi in the #neptune-ai channel and ask us about this article, MLOps, or whatever else

Okay, stacks are great, but you probably want some templates, too.

MLOps templates

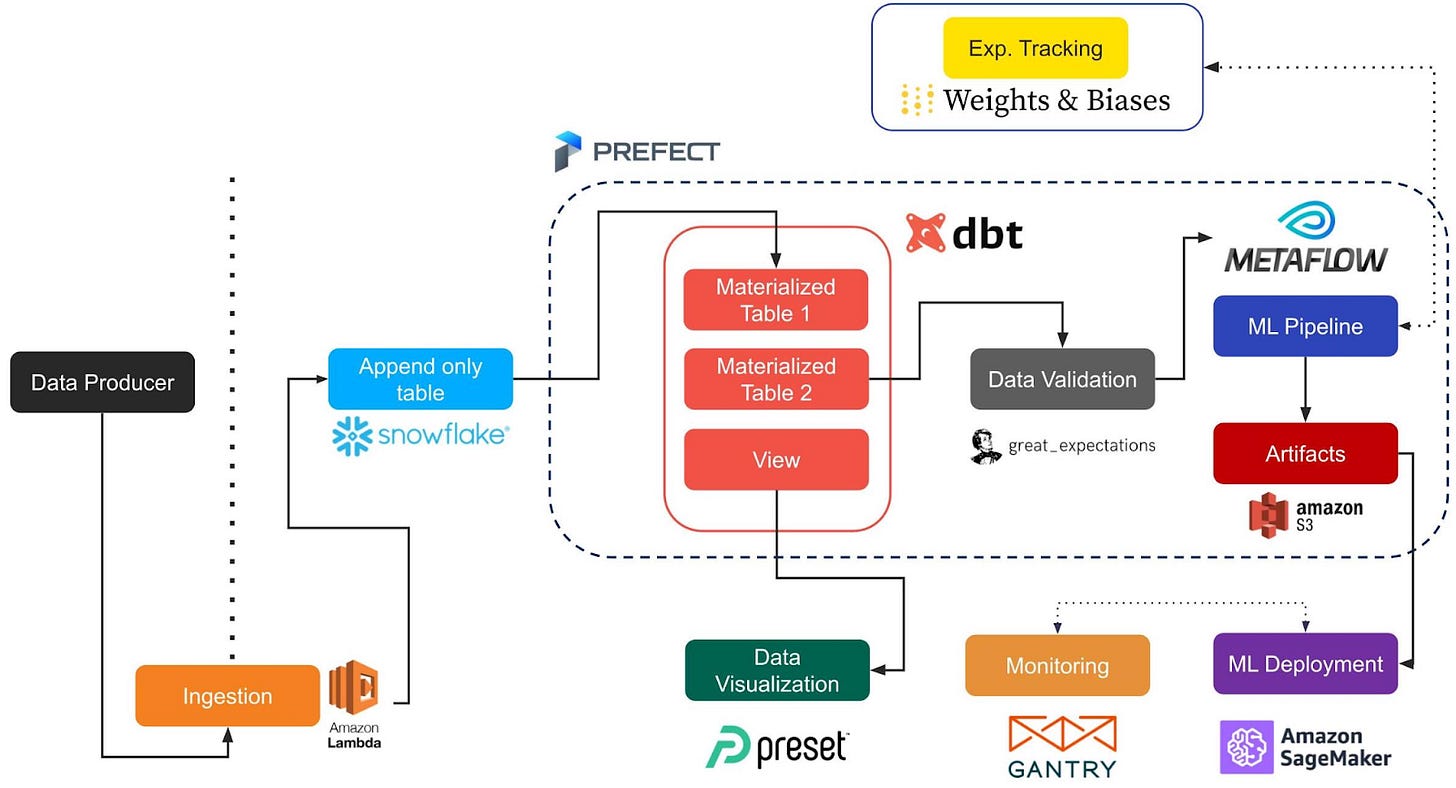

The best reasonable scale MLOps template comes from, you guessed it, Jacopo Tagliabue and collaborators.

In this open-source GitHub repository, they put together an end-to-end (Metaflow-based) implementation of an intent prediction and session recommendation.

It shows how to connect main pillars of MLOps and have an end-to-end working MLOps system you can build on. It is an excellent starting point that lets you use the default or pick and choose tools for each component.

One more great resource that is worth mentioning is the MLOps Infrastructure Stack article.

In that article, they explain how:

“MLOps must be language-, framework-, platform-, and infrastructure-agnostic practice. MLOps should follow a “convention over configuration” implementation.”

It comes with a nice graphical template from folks over at Valohai.

They explain general considerations, tool categories, and example tool choices for each component. Overall a really good read.

What should you do next?

Okay, now use these resources and go build your MLOps stack!

If you need some help, we’re putting together a resource where we:

Talk about tool choices for each stack component

Share how teams approach thinking about different problems (testing, CICD, model deployment and more)

Show even more MLOps tool stacks and stories from teams setting up MLOps at a reasonable scale

Check it out and let us know what you think in the mlops.community slack #neptune-ai channel.