📝 Guest post: Data Aggregation is Unavoidable! (And Other Big Data Lies)

and the Resulting Ugly Truths!

In TheSequence, we like to experiment with different formats, and today we introduce TheSequence Guest Post. Here we give space to our partners to explain in detail what machine learning (ML) challenges they help deal with.

In this post, Molecula’s team talks about:

Pre-aggregation strategies are workarounds to deal with data infrastructure limitations.

Pre-aggregating data often creates performance problems and may mask insights that could be found with more granular data.

Eliminating the need for pre-aggregation enables true real-time at scale for analytics, predictions, and more.

Data Aggregation: An Overview

Data has outgrown the legacy technologies we’ve developed to collect, manage, and act on it to create value. Complex and time-consuming workarounds cover for infrastructure limitations, but they are unable to meet the scale and speed required to maintain a competitive business edge.

In short, data aggregation is the process of gathering raw data and presenting it in a summarized format for statistical analysis. This aggregation, or denormalization, of data is typically done to improve query response times by limiting the amount of data being processed at one time. The specific use of aggregation in this way is called ‘pre-aggregation’, and the short-term gains it returns in processing speed come at higher long-term costs.

With every pre-aggregation, data is duplicated. This means that you must spend engineering resources to build and test the pre-aggregated output and maintain it for every project. Pre-aggregations quickly become a burdensome addition to engineering priorities as data pipelines accumulate, code breaks, and new pre-aggregation requests keep rolling in.

To top it off, pre-aggregated data is stale by definition. It can take minutes, hours, or even days to pre-aggregate raw data. This limits both the relevance of the data and its application to improve business outcomes.

Examples of pre-aggregated data:

Reporting layers or data marts

Materialized views, cubes, roll-ups, summary tables

Data Science training datasets

For a more in-depth explanation of data pre-aggregation, check out our recent blog.

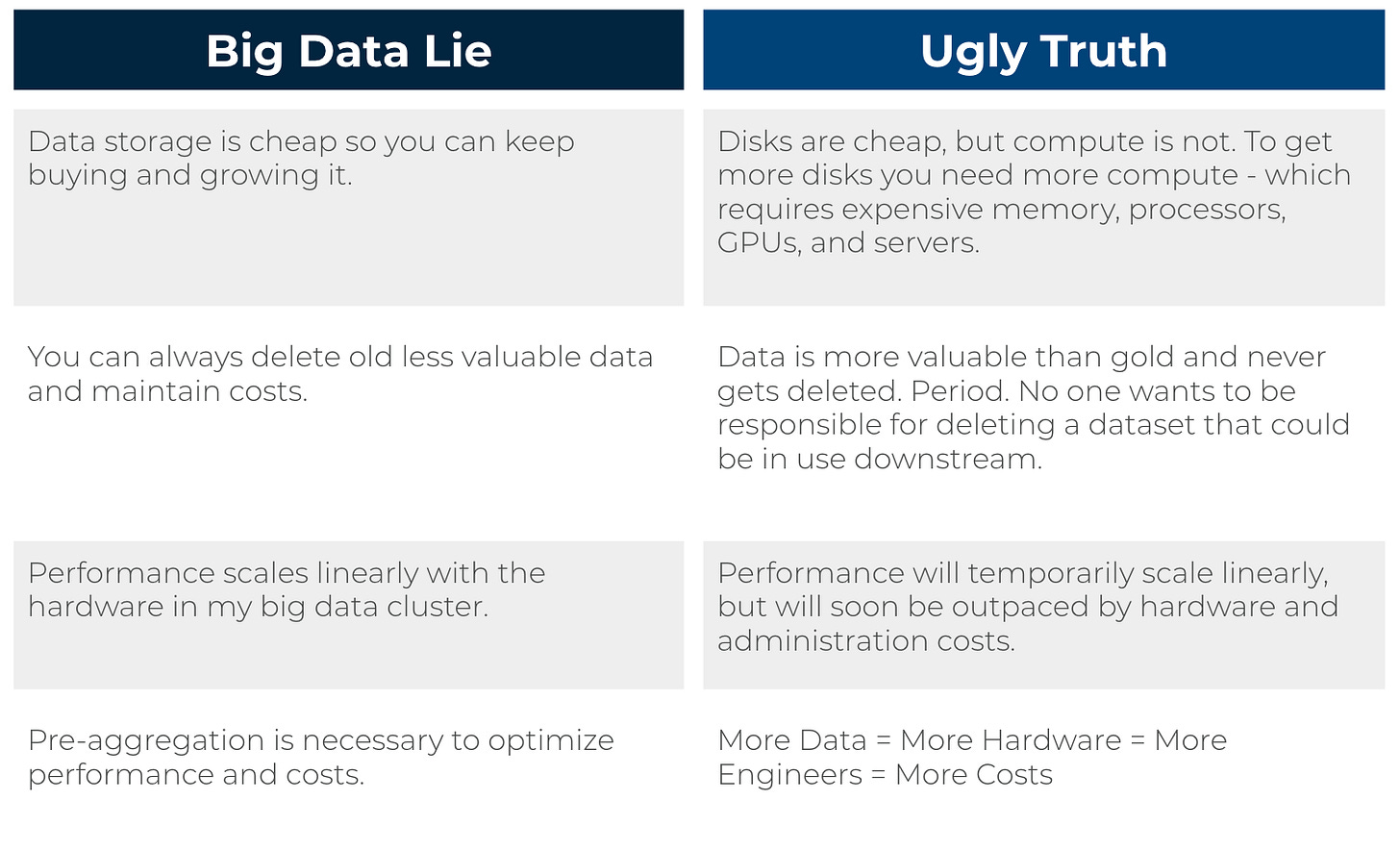

Big Data Lies and the Resulting Ugly Truths

It’s widely accepted that pre-aggregating data to optimize performance is necessary. As we mentioned above, pre-aggregations result in copies. In fact, pre-aggregation is so profuse today that 88% of data is a copy of the original source. Data storage is cheap, though, so it’s no big deal, right? Wrong. This is the first lie of big data. More data copies mean more disk space, which requires more compute, memory, processors, GPUs, servers, people to manage it, and so on. Pre-aggregation is a hidden cost that adds complexity and instability to data ecosystems.

Molecula’s FeatureBase eliminates the need for pre-aggregation.

A Better Way with FeatureBase

FeatureBase is purpose-built to deliver secure, fast, continuous access to all your data in a machine-native format. The first and most crucial step in leveraging big data is ensuring all of the data is ready and accessible.

Rather than the conventional approach of moving, copying, and pre-aggregating data, FeatureBase extracts features from each of the underlying data sources and stores them in a centralized access point, making data immediately accessible, actionable, and reusable. FeatureBase maintains up-to-the-millisecond data updates with no time-consuming, costly pre-aggregation necessary.

With FeatureBase, one customer was able to deliver real-time bids on personalized ads by eliminating 36 hours of pre-aggregation and reducing complex queries from taking 5 minutes to just 41 milliseconds. Check out our on-demand webinar to learn more about how FeatureBase eliminates pre-aggregation and powers true real-time capabilities.