🎙 Mike Del Balso/CEO of Tecton about Operational ML and ML Flywheels

It’s so inspiring to learn from practitioners and thinkers. Getting to know the experience gained by researchers, engineers, and entrepreneurs doing real ML work is an excellent source of insight and inspiration. Share this interview if you like it. No subscription is needed.

👤 Quick bio / Mike Del Balso

Tell us a bit about yourself. Your background, current role and how did you get started in machine learning?

Mike Del Balso (MDB): I am the co-founder and CEO of Tecton, the feature platform for machine learning. Before Tecton, I led product efforts for applied machine learning at Uber and Google.

I spent the last decade building systems to apply ML reliably in the real world at scale. I was the product manager for ML in Google’s ads auction, which requires first-class operational processes (now called MLOps). This same team published the well-known Machine Learning: The High Interest Credit Card of Technical Debt paper. I later helped start the ML team at Uber where we created Uber’s Michelangelo platform and helped scale ML at Uber to tens of thousands of models in production. This very applied background in ML, solving practical challenges, putting ML in production motivated the founding of Tecton.

🛠 ML Work

Michelangelo was a huge success at Uber and it’s become the blueprint for teams building machine learning platforms. What problem did it solve and why was it successful?

MDB: Before starting Michelangelo, Uber had very few models in production and most data scientists were focused on analytics. While they were eager to power live applications with machine learning, engineering teams had to build bespoke one-off systems to deploy models into production, which was a huge bottleneck. Operating a production machine learning application is a completely different beast than building prototypes, and it was almost impossible for these projects to be successful with the tools we had then.

Michelangelo introduced an established path to deploying models into production. Across the company, it gave teams the tools to build, deploy and maintain operational ML applications. We were fortunate to recognize that building and managing data pipelines was the most challenging part of the ML workflow, and that became the major focus for the platform.

While we knew the pain was real, we didn’t expect it to blow up in the way it did. By the time I left Uber, Michelangelo was powering thousands of operational ML applications. ML teams from other tech companies started adopting our design for their own ML platforms, and that’s when we knew there was an opportunity to solve this problem outside of Uber.

You’ve spoken about the complexity of building operational machine learning applications. What exactly is operational machine learning?

MDB: There are two different types of applied machine learning projects: analytical and operational. Analytical machine learning is when we use the results of a model to improve human decision-making. It usually powers reports and dashboards and is consumed by internal business users. Some examples of analytical ML are forecasting sales for next quarter, scoring incoming leads, or calculating a customer’s lifetime value.

Operational machine learning is when an application is using ML to automatically make decisions that impact the business or customer experience in real-time. These are fully autonomous systems that must run in production. Some of the common use cases are recommendations, detecting fraudulent transactions as they occur, underwriting risk in real-time, or predicting delivery ETAs for delivery service.

All the leading tech companies have invested aggressively into operational machine learning, as it is driving the competitive frontier for many consumer experiences. My co-founder wrote an article exactly on this topic: What is Operational ML.

Talking about leading tech companies, you have seen very sophisticated machine learning teams at Uber and Google. Now, you help many other companies scale their machine learning efforts. What differentiates teams that are successful with ML from those who are not?

MDB: In the FAANGs of the world, applied ML Teams are not focused on building individual models. Instead, they’re focused on designing and managing the ML application as a whole, with all the complexity of its related data flows and feedback loops. This is a very powerful paradigm shift and we call this the ML Flywheel. Great ML applications require great ML flywheels and the best ML teams have become great at building and managing these flywheels.

An ML flywheel describes the full data flow loop of operational ML applications. It has four main steps:

First, data needs to be transformed into features that allow models to learn something about the world.

Those features are then served to a live ML model to score predictions and decide on an action.

Once we observe the result of an action, we must collect those labels and log the predictions we made.

That data must then be organized and stored into a data warehouse, from there we can begin extracting better features and repeat the cycle.

The teams that are most successful with ML recognize that the complexity of ML is not in ideation or initial development, but in productionizing and operating these breathing, living systems. They are concerned with the entire ML flywheel. This means having a fully-connected data loop with a unified data model and clear ownership throughout.

Newer generation ML infrastructure at FAANGs are focused on making ML Flywheel management possible and easy. This is the future of widely-used operational ML. At Tecton we’re helping teams build ML flywheels for their use cases. Built on top of the most advanced feature store in the industry, Tecton has an amazing foundation on which to support the full ML Flywheel.

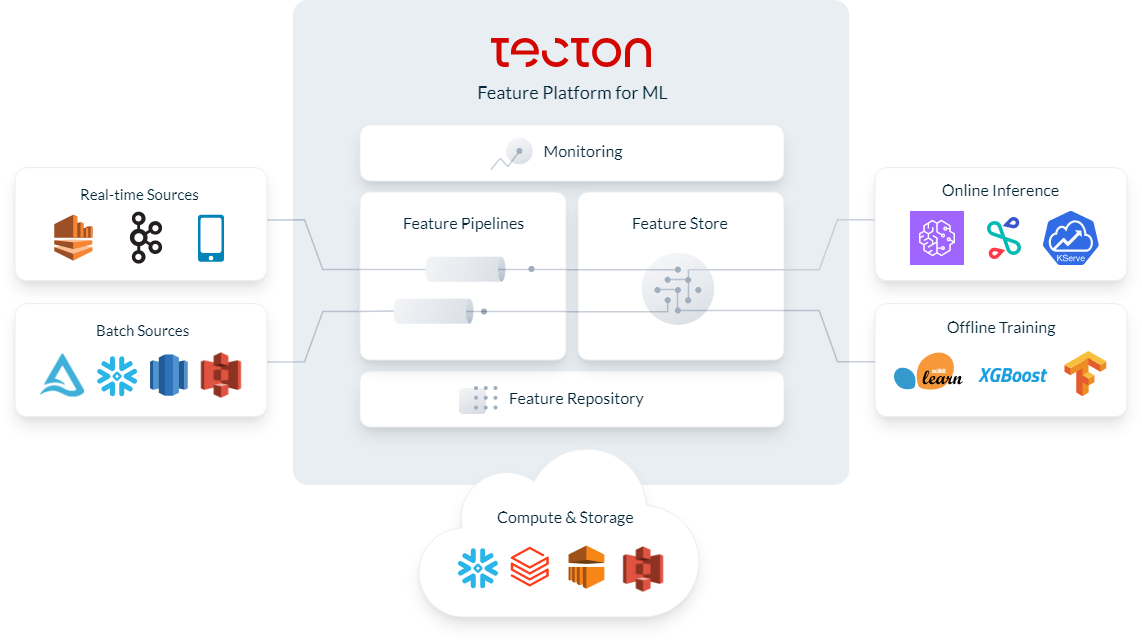

Recently, we covered (Edge#186) how you’re moving from a feature store product to building a complete feature platform. Is that part of this vision?

MDB: Absolutely. At Uber, we originally invented the feature store to manage the data flows in the decide section of the flywheel. Feature stores allow teams to discover features that have already been built, and to serve those features at scale to live models. We needed to expand from there because a lot of work was still needed to continuously process raw data into features.

Tecton began as a feature store but has now become a complete feature platform. It still includes a feature store as part of the product, but it now covers the learn section of the flywheel as well. We allow data scientists to define features in SQL or Python, and Tecton builds and continuously operates data pipelines in the background. Tecton is the only feature platform out there that executes transformations and orchestrates data pipelines to operate and maintain these living, breathing systems. And we’re actively building out the bottom half of the loop to log production events and seamlessly feed them back into the development process that happens at the top half of the loop.

Teams that are serious about building operational machine learning are struggling with these problems and quickly adopting feature platforms. We now work with two of the top three insurance companies in the US, many of the largest banks and fintechs, leading gaming companies, popular e-commerce platforms, etc. We also work with many startups that are building innovative products centered around machine learning. Operational ML is now a topic across many industries and feature platforms like Tecton are accelerating its adoption.

Whether you’re trying to prevent fraud, embed recommendations on your website, or something unique to your business, if you’re powering your product with ML, reach out to us at www.tecton.ai. Our real-time feature infrastructure helps teams avoid months or years of engineering work that’s required to support operational ML applications.

If you just want to get your hands dirty on a feature store to learn the concepts and understand the basics, check out Feast, the leading open source feature store. Tecton is fully compatible with Feast, so any project built with Feast can be upgraded to get the managed experience of Tecton.

💥 Miscellaneous – a set of rapid-fire questions

Is the Turing Test still relevant? Any clever alternatives?

MDB: The Turing test was beaten a long time ago! It just had underwhelming reactions. The next test is if AIs can speed up digital transformation efforts :)

Favorite math paradox?

MDB: It has been said before, but for people using data to guide their product changes, Simpson’s Paradox should be top of mind. It’s extremely practical and a good reminder to think experiment results in-depth. It also underlies some of my favorite interview questions.

Any book you would recommend to aspiring data scientists?

All of Statistics is my favorite 101 + reference book. It gives very applied, but rock-solid first principles intuition about data and statistics, which are the core of ML. A colleague on my ML team at Google lent me this and I read every page and did every exercise. It's dense, but you won't regret any ounce of effort you put into it. You can’t be a great ML engineer unless you get the basics!