📝 Guest post: A Guide to Leveraging Active Learning for Data Labeling

In TheSequence Guest Post, our partners explain in detail what machine learning (ML) challenges they help deal with. In this post, Labelbox’s team offers you a complete guide on putting active learning strategies into practice.

What is Active Learning?

In short, active learning is the optimal selection of data to maximize model performance improvements given limited resources.

Teams spend up to 80% of their time building and maintaining training data infrastructure, including cleansing, transforming, and labeling data in preparation for model training. Without an active learning strategy in place, teams see diminishing returns on their labeled data in terms of impact on model performance. Active learning can help streamline this bottleneck and increase your training data’s ROI.

First, watch this video to understand why active learning is a valuable concept to help teams save time and resources on their path to production AI.

What will active learning help you accomplish?

Label less data

Better understand model performance and edge cases

Find the right data faster

Label less data

With state-of-the-art neural networks available for free and effective AI computation speed doubling every 3-6 months, training data is what fuels an ML team’s competitive advantage. In this data-centric approach, it’s essential for teams to focus on the right data, not just more data.

ML teams spend most of their time and resources managing and labeling data, not building AI systems. Often, teams continue to label data and grow the size of a dataset despite no additional gains in performance.

In fact, depending on the difficulty of the task and the method used for sampling, most teams could label between 10%-50% less data and achieve similar levels of accuracy.

Active Learning is the art and science of identifying what data will most dramatically improve model performance and feeding that insight into the prioritization of data for labeling.

Better understand your model’s performance and edge cases

To find what data will drive the most meaningful improvements, you need to clearly understand where your model performs well and where it needs more attention to accurately address edge cases. This understanding will fuel your iteration cycle and help ensure each new batch of training data remains valuable.

Most ML teams use a patchwork of tools and techniques to measure performance, such as:

Scattered notebooks measuring loss, recall, or other metrics

Countless confusion matrices

Manual calculations shared in email and chat

Active learning strategies are based on uncovering the type of data that will improve performance most dramatically. To do this, it’s essential to learn why your model performs how it does and to diagnose errors quickly. Teams need to:

Track performance metrics in one place:

A single system of record for tracking model performance between iterative model runs and across slices of data will let cross-functional teams better monitor progress.Visualize model predictions alongside ground truth:

Teams can get a more detailed understanding of how their model interacts with data by quickly looking at model predictions alongside human-generated ground truth labels.

Measure performance metrics in one place with Model Diagnostics.

These techniques will help you understand and diagnose model errors and position you to make more impactful improvements to your training data. Once this foundation is in place, you can start identifying and eliminating edge cases. (Read more: Stop labeling data blindly)

In data-centric AI, it’s imperative to train your model to perform well against edge cases. You can relatively quickly train a model on common classes because there’s an abundance of data available for labeling, and these classes will show up regularly if you’re using a random sampling approach for gathering data. To surface edge cases, teams need to employ more sophisticated techniques for finding and prioritizing data for labeling.

Model embeddings are a powerful tool to help understand your data and uncover patterns and outliers. (Learn more about embeddings.) With embeddings, you can:

Visualize your data in 2D space

Cluster similar assets

Identify visually dissimilar outliers

When looking at a selection of data in an embedding projector, visually similar examples will be clustered together. The example below illustrates how a collection of unicycles would show up as edge cases in a dataset mostly focused on bicycles. Using the embedding projector view, you can quickly identify edge cases to prioritize for future labeling iterations.

The embedding projector clusters visually similar data to help identify outliers.

Find the right data faster

Once you’ve diagnosed model errors and identified low-performing edge cases, you can start prioritizing high-value data to label. One of the biggest challenges of active learning at this point is sifting through the broader body of unstructured data available for labeling to actually find the data that’s most important.

Unstructured data is inherently difficult to manage and search efficiently. Teams building ML products often need to access data from multiple locations and struggle seeing all their data in one place and understanding the true depth and breadth of their data. This complexity often stands in the way of teams identifying and prioritizing the most impactful data for labeling, leading to inefficient resource allocation and unnecessary labeling.

(Read more: You’re probably doing data discovery wrong)

To help with data discovery, teams must be able to:

Consolidate unstructured distributed data

ML teams often have to pull together data from across their broader organization to build their ML products. This is a time-intensive process and requires dedicated engineering support as the scope and complexity of projects increases. With data in one place, teams can more quickly understand what’s available and make more impactful decisions.Search for data without code

Finding the right data from across a large organization requires complex database join operations just to break down silos. This slows down iteration velocity and limits data discovery to more technical teammates. With a simple search UI, teams can find data faster without needing to know how to code.Uncover high-value unstructured data

Once you’ve identified an edge case, you need to find more examples of it to label and train your model, but edge cases are rare by definition. Model embeddings can enable searching for data based on visual similarity rather than a specific attribute or piece of metadata. This way, teams can break down data silos, find data visually similar to edge cases, and send it to a project for labeling.

Labeling functions use embeddings to automatically label similar data to improve discoverability.

These best practices will help you better understand what kind of unstructured data is available and help you find high-value data examples to train your model to perform well against edge cases.

Active learning on Labelbox

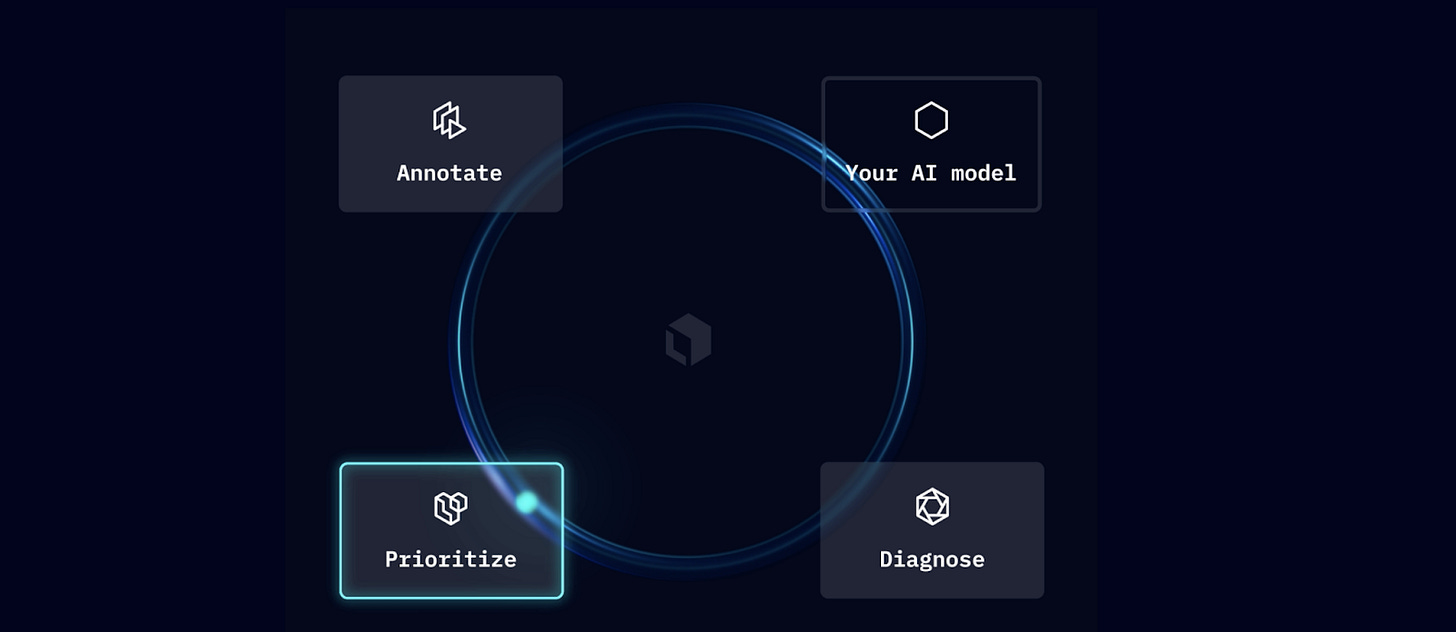

By putting these active learning principles into practice, teams can create a more efficient data engine designed to diagnose model performance and prioritize high-value data for labeling. Labelbox is built around three pillars that help drive your iteration loop: The ability to quickly annotate data, diagnose model performance, and prioritize based on your results. All the tools you need for an active learning workflow are here in one place:

Annotate: Model-assisted labeling saves you time by pre-labeling data with your own model

Diagnose: Model Diagnostics identifies edge cases and areas of lower performance

Prioritize: Catalog helps you find and prioritize the most impactful data for labeling

The Labelbox SDK powers the full active learning workflow, and you can easily get started with end-to-end runnable examples of common tasks. Manage data and ontologies, configure projects programmatically, and configure your pipeline for model-assisted labeling and model diagnostics all through the SDK. Labelbox is designed to be the center of your data engine, enabling a workflow of continuous iteration and improvement of your model.

Learn more about active learning with Labelbox.

P.S. We’re hiring!

Labelbox: Enabling the optimal iteration loop.