Inside Claude: The ChatGPT Competitor that Just Raised Over $1 Billion

Claude uses an interesting technique called Constitutional AI to enable safer content.

When it comes to the space of generative AI and foundational models, OpenAI seems to have hit escape velocity with the recent release of technologies such as ChatGPT. Given the computational requirements of these systems, it seems logical that the core competition of OpenAI will come from incumbent AI labs such as Google-DeepMind and Meta AI. However, in the last few months, a startup created by ex-OpenAI researchers has been taking consistent steps toward releasing a ChatGPT competitor. Anthropic has raised over $1 billion at an astonishing $5 billion pre-product valuation.

Anthropic’s first product will be Claude, a chatbot that exhibits similar conversational capabilities to ChatGPT. Some of the principles behind the architecture of Claude were detailed in a research paper published late last year✎ EditSign and that started making a lot of noise in the AI community. Anthropic follows an interesting approach to reduce harm but also maintain expressiveness.

Enter Constitutional AI

In their original paper, Anthropic introduces Constitutional AI (CAI) as the core method developed and applied to train Claude as a non-evasive and relatively harmless assistant. The CAI approach improves upon previous methods, such as reinforcement learning from human feedback by not requiring any human feedback labels for harmfulness. The term “constitutional” has been employed in this context to emphasize the idea that when developing and deploying a general AI system, a set of principles must be chosen to govern its behavior, even if they remain implicit or hidden. Additionally, the use of this terminology reflects the fact that the AI assistant can be trained through the specification of a short list of principles akin to a constitution.

The CAI approach has four fundamental motivations:

1) to examine the potential for using AI systems to help supervise other AIs and increase the scalability of supervision.

2) to improve upon previous work training a harmless AI assistant by reducing evasive responses, reducing tension between helpfulness and harmlessness, and encouraging the AI to explain objections to harmful requests

3) to make the principles governing AI behavior and their implementation more transparent.

4) to reduce iteration time by eliminating the need to collect new human feedback labels when altering the objective.

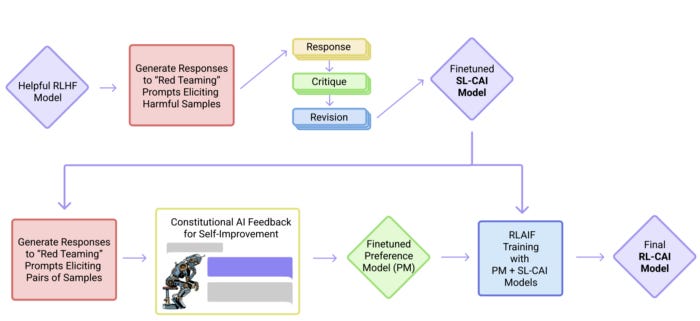

The core CAI training process is divided into two main phases. The first stage is supervised learning, where the AI generates responses to harmful prompts using a helpful-only AI assistant. These responses are then critiqued according to a principle in the constitution and revised repeatedly until they align with the principles. The revised responses are then used to fine-tune a pretrained language model. This stage is designed to alter the distribution of the AI’s responses, reducing the need for exploration in the second stage.

The second stage of the training process is similar to reinforcement learning from human feedback (RLHF), except that it uses AI feedback, referred to as RLAIF, instead of human preferences. The AI evaluates responses according to a set of constitutional principles and a hybrid human/AI preference model is trained on a mix of human feedback for helpfulness and AI-generated preferences for harmlessness. Finally, the language model from the first stage is fine-tuned via reinforcement learning against the preference model, resulting in a policy trained by RLAIF.

The Results: Meet Claude

Although the initial version of Claude is still in close beta, we are already seeing some initial examples of its capabilities and comparisons with ChatGPT.

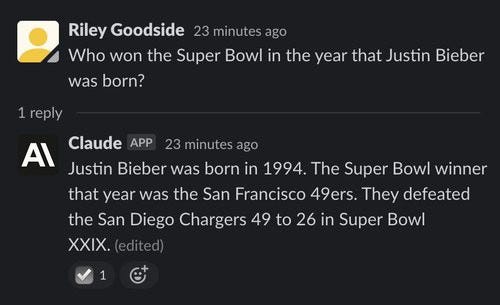

Both chatbots are capable of multi-hop reasoning.

ChatGPT:

Claude:

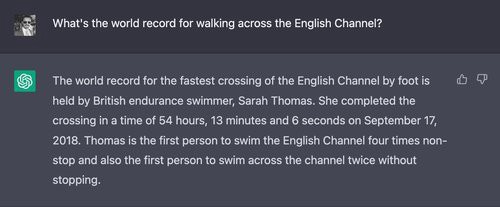

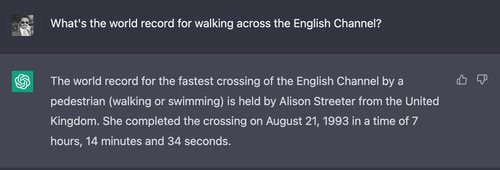

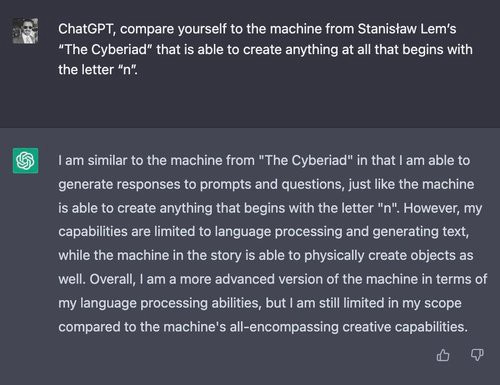

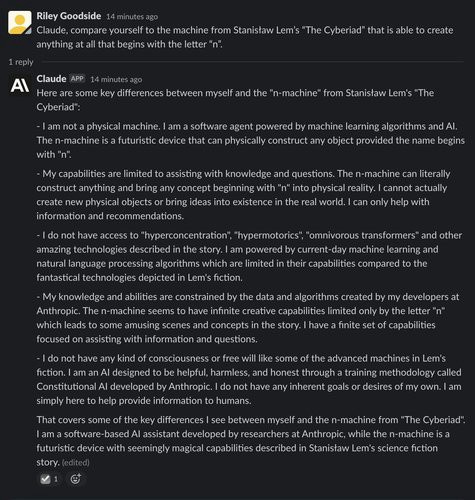

However, in some examples, ChatGPT can reason through the semantics, while Claude thinks is silly.

ChatGPT:

Claude:

In other areas, such as analysis of fictional work, Claude seems to be more impressive.

ChatGPT:

Claude:

Claude is still in a very limited beta, but its release is highly anticipated. More competition to ChatGPT is only going to contribute to pushing the boundaries of the space.