📝 Guest post: Using One Methodology to Solve Three Model Failure Modes

In this guest post, Eric Landau, CEO of Encord, discusses the three major model failure modes that prevent models from reaching the production state and shows how to solve all three problems with a single methodology.

As many ML engineers can attest, the performance of all models – even the best ones – depends on the quality of their training data. As the world moves further into the age of data-centric AI, improving the quality of training datasets becomes increasingly important, especially if we hope to see more models transition from proof-of-concept to production state.

Even now, most models fail to make this transition. The technology is mostly ready, but bridging the proof of concept-production gap depends on fixing the training data quality problem.

With the appropriate interventions, however, machine learning teams can improve the quality of training datasets and overcome the three major model failure modes – suboptimal data, labeling errors, and model edge cases – that prevent models from reaching the production state.

However, by understanding the different problems that are holding models back from reaching production quality, ML engineers can intervene early on and solve all three problems with a single methodology.

Problem One: Suboptimal Curation and Selection

Every machine learning team knows that the data a model trains on has a significant impact on the model’s performance. Teams need to select and curate a sample of training data from a distribution of data that reflects what the model will encounter in the real world. Models need balanced datasets that cover as many edge cases as possible to avoid bias and blind spots.

However, because of cost and time, machine learning teams also want to train the model with as little data as possible. Many ML teams have access to millions of images or videos, and they can’t possibly train a model using all the data that they have. Computation and cost constraints necessitate them to decide which subset of data to send to the model for training.

In making this choice, machine learning teams need to select training data optimized for long-term model performance. If they act proactively, curating and selecting data for the purpose of trying to make the model more robust for real-world application, then they’ll help ensure more predictable and consistent model performance.

Having the right cocktail of data to match the out-of-sample distribution is the first step in ensuring high-quality model performance. Better quality data selection and curation can help machine learning teams avoid the suboptimal data failure mode, yet looking through large datasets to find the best selection of data for training sets is challenging, inefficient, and not always practical.

Problem Two: Poor Quality and Inconsistent Annotations

As they gained access to more and more data, ML teams have found ways to label it more efficiently. Unfortunately, as the amount of labeled data grew, the label quality problem began to reveal itself. A recent study showed that 10 of the most cited AI datasets have serious labeling errors: the famous ImageNet test set has an estimated label error of 5.8 percent.

A model’s performance is not only a function of the amount of training data but also of the quality of that data’s annotations. Poorly labeled data results in a variety of model errors, such as miscategorization and geometric errors. When it comes to use cases where there’s a high sensitivity to error with regards to the consequences of a model’s mistake, such as autonomous vehicles and medical diagnosis, the labels must be specific and accurate– there’s no room for mistakes.

Labels also need to be consistent across datasets. An inconsistency in the way that the data is labeled can confuse the model and harm performance. Inconsistencies often arise from having many different annotators work on a dataset. Likewise, the distribution of the labels also matters. Just like the data layer of training, the label layer needs to reflect balance and representation equal to the distribution a model encounters in the real world.

Unfortunately, searching for and finding labeling errors and inconsistencies is difficult. Often they are too subtle to find in large datasets. Because labeling errors are as varied as the labelers themselves, human reviewers tend to check label quality, so annotation review becomes a time-consuming process that’s also prone to human error of its own.

Problem Three: Determining and Correcting For Model Edge Cases

After curating the best quality data and fixing its labels, ML teams need to evaluate their models with respect to the data. Taking a data-centric approach, they should seek out the failure modes of the model within the data distributions.

To improve the model iteratively, they need to find the areas in which it’s struggling. A model runs on many scenarios, so the ML team needs to find the subset of scenarios on which the model isn’t doing a good job. Pinpointing the specific area in which the model is struggling can provide the ML team with actionable insight for intervening and improving performance. For instance, a computer vision model might perform well on bright images but not on dark ones. To improve model performance, the team first has to diagnose that the model struggles with dark images and then increase the number of dark images that the model trains on.

Unfortunately, ML engineers tend to obtain global metrics about model performance that provide information across a wide swath of data. To improve model performance efficiently, they need to granularly decompose model performance by specific data features to provide targeted interventions that improve the composition of the dataset and by extension model performance.

Encord Active: Using One Methodology to Diagnose and Fix Different Failure Modes

Overcoming these three failure modes may seem daunting, but the key comes from having a better understanding of training data – where it’s succeeding and where it’s failing.

Encord Active, Encord’s open-source active learning tool, uses one methodology to provide interventions for improving data and label quality across each failure mode. By running Encord Active on their data, users can better understand the makeup of their training data and how that makeup is influencing the model performance.

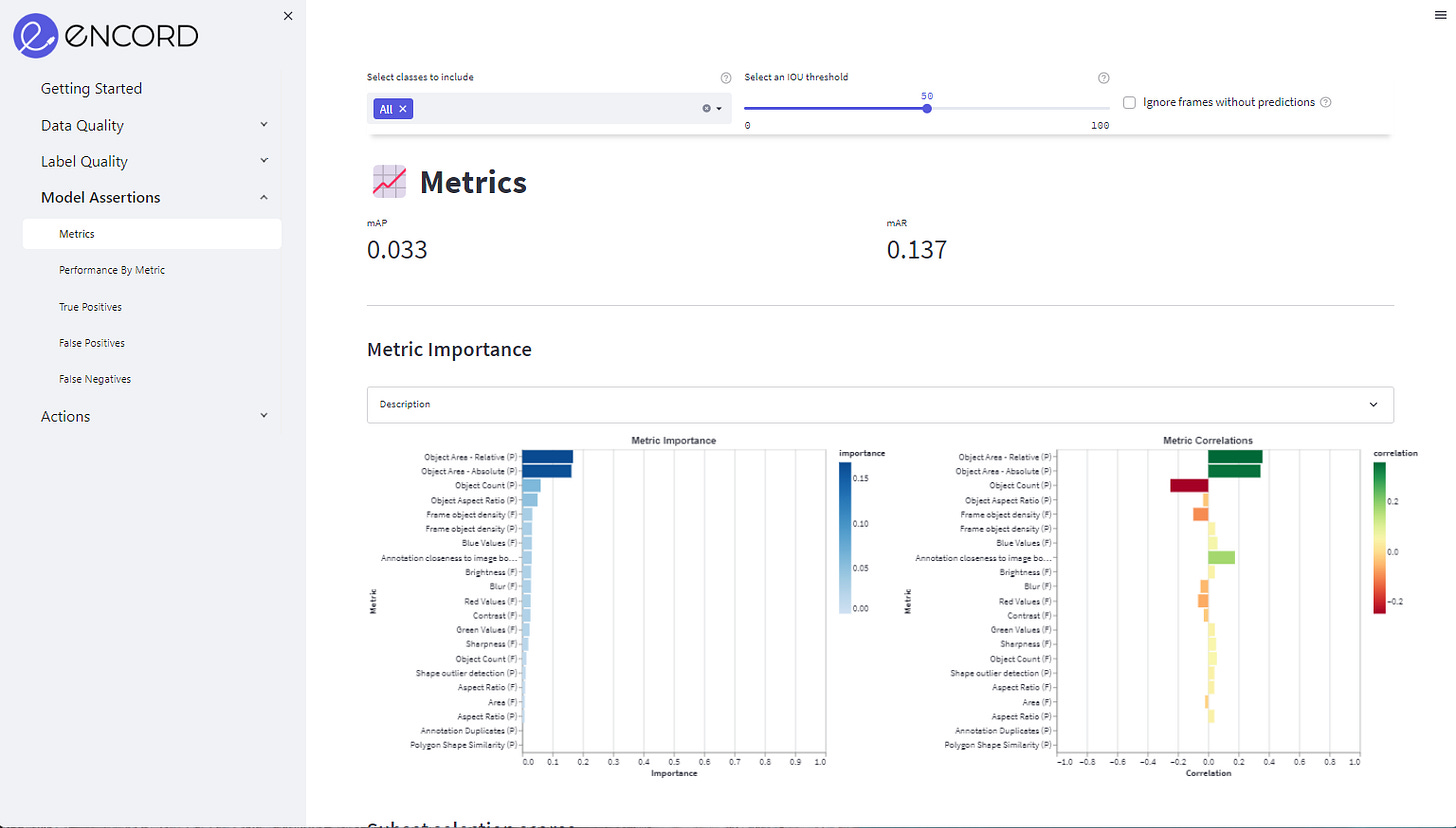

Encord Active uses different metric functions to parametrize the data, constructing metrics on different data features. It then compiles these metrics into an index to provide information for each individual feature. With this metric methodology, users can run different diagnoses to gain insights about data quality, data labels, and model performance with respect to the data, receiving indexes about the features most appropriate to each intervention area.

For data quality, Encord Active provides information about data level features such as brightness, color, aspect ratio, blurriness, and more. Users can dive into the index of individual features to explore the quality of the data relevant to that particular feature in more depth. For instance, they can look at the brightness distribution across their dataset and visualize the data with respect to that parameter. They can examine the feature distribution and outliers within their dataset and set appropriate thresholds to filter and slice the data based on the feature.

When it comes to labels, the tool works similarly across a different set of parameterized features. For instance, users can see the distribution of classes, label size, annotation placement within a frame, and more. They can also examine label quality. Encord Active provides scores based on unsupervised methods, which reflect whether a label, such as the placement of a bounding box, is likely to be considered high quality or low quality by a reviewer.

Finally, for those interested in seeing how their model performs with respect to the data, Encord Active breaks down model performance as a function of these different data and label features. For instance, users can evaluate a model's performance based on the brightness, “redness,” object size, or any metric in the system. Encord Active may show that a model’s true positive rate across images with small objects is low, for instance, suggesting that the model struggles to find small objects. With this information, a user could make informed, targeted improvements to model performance by increasing the number of images containing small objects in the training dataset. Because Encord Active automatically breaks down which features matter most for model performance, users can focus on improving model performance by adjusting their datasets with respect to those features.

Encord Active already contains indexes for many different features. However, because the product is open source, users can build out indexes for the features most relevant to their particular use cases, parametrizing the data as specifically as is necessary to accomplish their objectives. By writing their own metric functions, they can ensure that the data is broken down in a manner most suited to their needs before using Encord Active’s visualization interface to audit and improve it.

To close the proof of concept-production gap, models need better training datasets. Encord provides the tools that help all companies build, analyze, and manage better computer vision training datasets.

Sign up for early access to Encord Active.