📝 Guest Post: RAG Evaluation Using Ragas*

In this guest post, the teams from Zilliz and Ragas discuss key RAG evaluation metrics, their calculation, and implementation using the Milvus vector database and the Ragas package. Let’s dive in!

Retrieval, a cornerstone of Generative AI systems, is still challenging. Retrieval Augmented Generation, or RAG for short, is an approach to building AI-powered chatbots that answer questions based on data the AI model, an LLM, has been trained on.

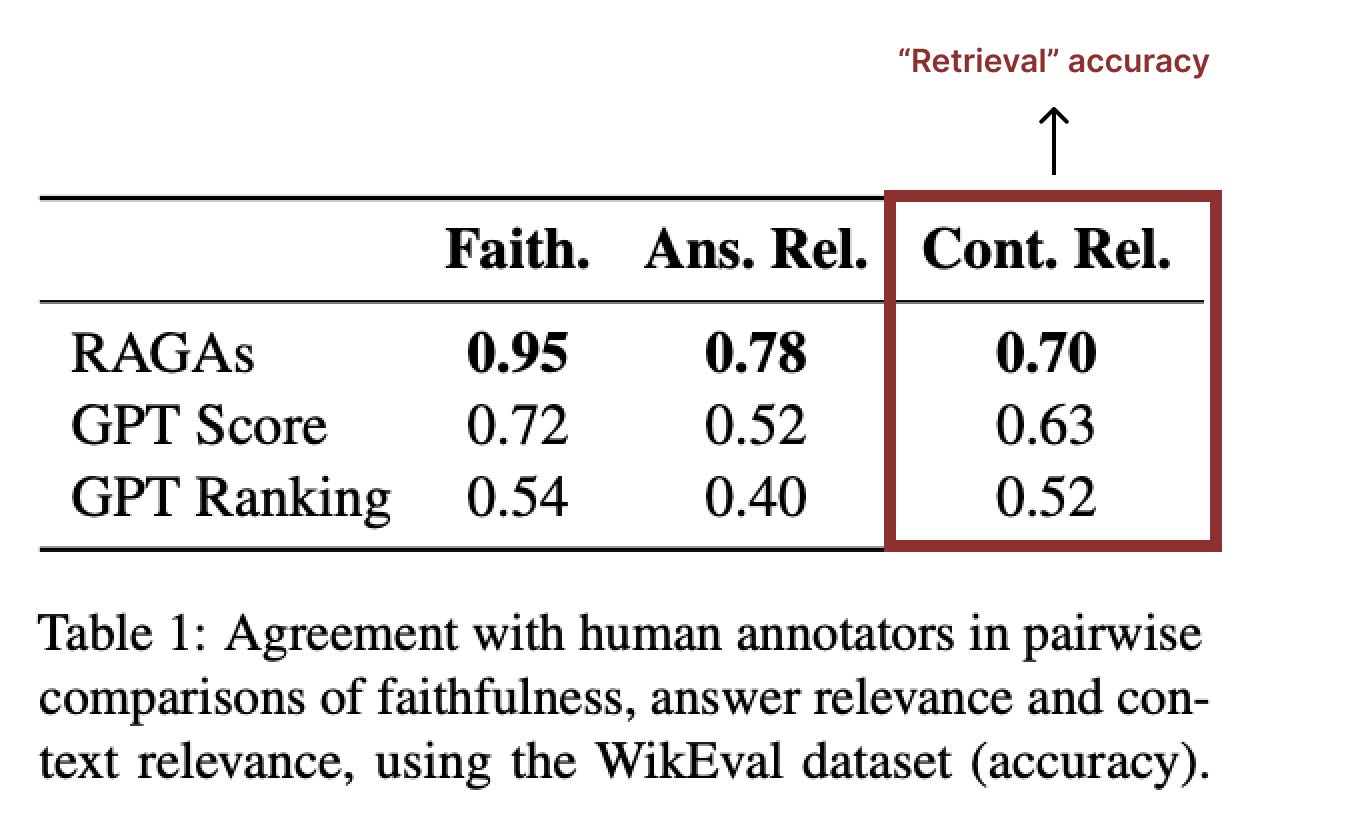

Evaluation data from sources like WikiEval show very low natural language retrieval accuracy. This means you will probably need to conduct experiments to tune RAG parameters for your GenAI system before deploying it. However, before you can do RAG experimentation, you need a way to evaluate which experiments had the best results!

RAG Evaluation

Using Large Language Models (LLMs) as judges has gained prominence in modern RAG evaluation. This approach involves using powerful language models, like OpenAI’s GPT-4, to assess the quality of components in RAG systems. LLMs serve as judges by evaluating the relevance, precision, adherence to instructions, and overall quality of the responses produced by the RAG system.

It might seem strange to ask an LLM to evaluate another LLM. According to research, GPT-4 agrees 80% of the time with human labelers. Apparently, humans (in AI terminology called the “Bayesian limit”) do not agree more than 80% with each other! Using the “LLM-as-judge” approach automates and speeds up evaluation and offers scalability while saving cost and time spent on manual human labeling.

There are two primary flavors of LLM-as-judge for RAG evaluation:

MT-Bench uses an LLM to judge only question-answer pairs that are verified as human ground truth. Humans initially vet the questions and answers to ensure the questions are sufficiently complex to make worthy tests before the LLM uses the 80 Q-A pairs to evaluate different Decoders (generative AI components). Paper, Code, Leaderboard.

Ragas is built on the idea that LLMs can effectively evaluate natural language output by forming paradigms that overcome the biases of using LLM as judges directly and providing continuous scores that are explainable and intuitive to understand). Paper, Code, Docs.

The rest of this blog will showcase Ragas, which emphasizes automation and scalability for RAG evaluations.

Evaluation Data Needed for Ragas

According to the Ragas documentation, your RAG pipeline evaluation will need four key data points.

Question: The question asked.

Contexts: Text chunks from your data that best match the question’s meaning.

Answer: Generated answer from your RAG chatbot to the question.

Ground truth answer: Expected answer to the question.

Ragas Evaluation Metrics

You can find explanations for each metric, including their underlying formulas, in the documentation. For example, faithfulness. Some metrics are:

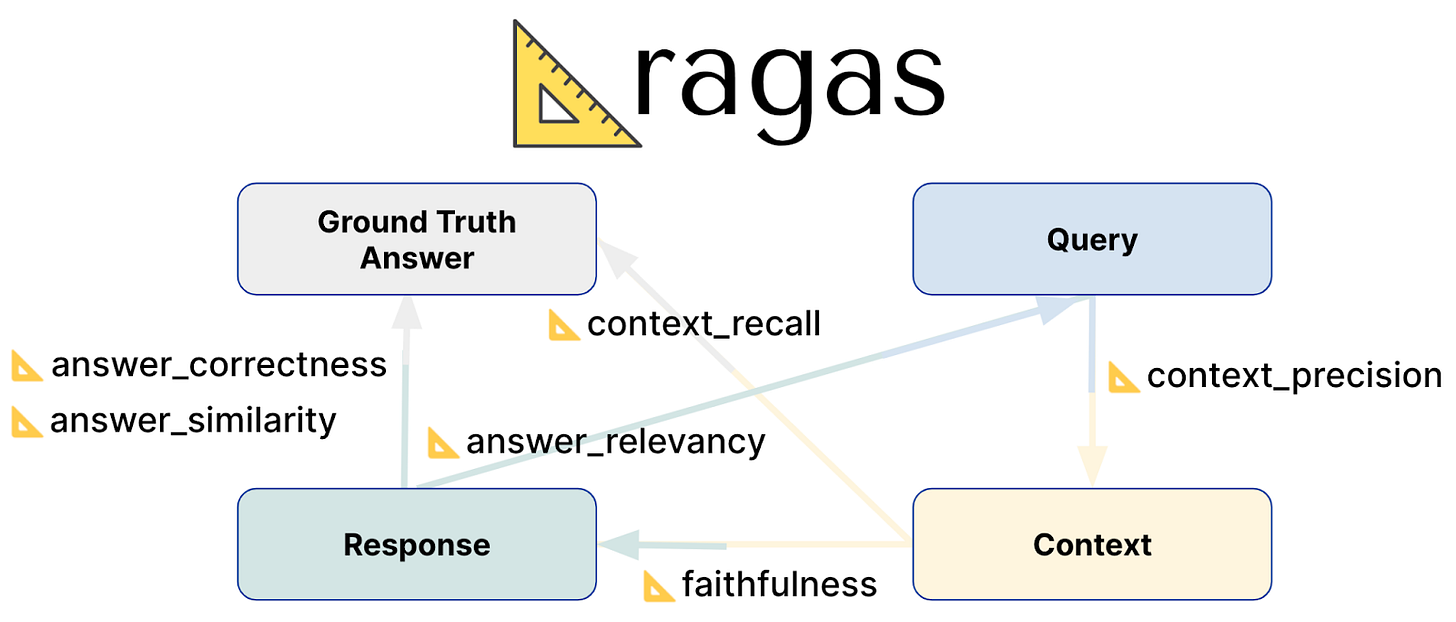

Context Precision: Uses the question and retrieved contexts to measure the signal-to-noise ratio.

Context Recall: Uses the ground truth and retrieved contexts to check if all relevant information for the answer is retrieved.

Faithfulness: Uses the contexts and the bot answer to measure if the claims in the answer can be inferred from the context.

Answer Relevancy: Uses the question and the bot answer to assess whether the answer addresses the question (does not consider factuality but penalizes incomplete or redundant answers).

Answer correctness: Uses the ground truth answer and the bot answer to assess the correctness of the bot answer.

Details about how these metrics are calculated can be found in their paper.

RAG Evaluation Code Example

This evaluation code assumes you already have a RAG demo. For my demo, I created a RAG chatbot using Milvus Technical documentation and Milvus vector database for retrieval. Full code for my demo RAG notebook and Eval notebooks are on GitHub.

Using that RAG demo, I asked it questions, got the RAG contexts from Milvus, and generated bot responses from an LLM (see the last 2 columns below). Additionally, I provide “ground truth” answers to the same questions (“contexts” column below).

You must install OpenAI, (HuggingFace) dataset, ragas, langchain, and pandas.

# ! pip install openai dataset ragas langchain pandas

import pandas as pd

eval_df = pd.read_csv("data/milvus_ground_truth.csv")

display(eval_df.head())

Convert the pandas dataframe to a HuggingFace Dataset.

from datasets import Dataset

def assemble_ragas_dataset(input_df):

question_list, truth_list, context_list = [], [], []

question_list = input_df.Question.to_list()

truth_list = eval_df.ground_truth_answer.to_list()

context_list = input_df.Custom_RAG_context.to_list()

context_list = [[context] for context in context_list]

rag_answer_list = input_df.Custom_RAG_answer.to_list()

# Create a HuggingFace Dataset from the ground truth lists.

ragas_ds = Dataset.from_dict({"question": question_list,

"contexts": context_list,

"answer": rag_answer_list,

"ground_truth": truth_list

})

return ragas_ds

# Create a Ragas HuggingFace Dataset from the pandas df.

ragas_input_ds = assemble_ragas_dataset(eval_df)

display(ragas_input_ds)

The default LLM model Ragas uses is OpenAI’s `gpt-3.5-turbo-16k` and the default embedding model is `text-embedding-ada-002`. You can change both models to whatever you like.

I’ll change the LLM-as-judge model to the pinned `gpt-3.5-turbo` since OpenAI’s latest blog announced this is the cheapest. I also changed the embedding model to `text-embedding-3-small` since the blog noted these new embeddings support compression-mode.

In the code below, I’m only using the RAG context evaluation metrics to focus on measuring Retrieval quality.

import os, openai, pprint

from openai import OpenAI

# Save the api key in an env variable.

openai_api_key=os.environ['OPENAI_API_KEY']

# Choose the metrics you want to see.

from ragas.metrics import ( context_recall, context_precision, faithfulness, )

metrics = ['context_recall', 'context_precision', 'faithfulness']

# Change the llm-as-critic.

from ragas.llms import llm_factory

LLM_NAME = "gpt-3.5-turbo"

ragas_llm = llm_factory(model=LLM_NAME)

# Also change the embeddings.

from langchain_openai.embeddings import OpenAIEmbeddings

from ragas.embeddings import LangchainEmbeddingsWrapper

lc_embeddings = OpenAIEmbeddings( model="text-embedding-3-small", dimensions=512 )

ragas_emb = LangchainEmbeddingsWrapper(embeddings=lc_embeddings)

# Change the default models used for each metric.

for metric in metrics:

globals()[metric].llm = ragas_llm

globals()[metric].embeddings = ragas_emb

# Evaluate the dataset.

from ragas import evaluate

ragas_result = evaluate( ragas_input_ds,

metrics=[ context_precision, context_recall, faithfulness, ],

llm=ragas_llm,

)

# View evaluations.

ragas_output_df = ragas_result.to_pandas()

ragas_output_df.head()

You can see the full code for my demo RAG notebook and Eval notebooks on Git Hub.

Conclusion

This blog explored the ongoing retrieval challenge in Generative AI, focusing on Retrieval Augmented Generation (RAG) for natural language AI. Experimentation is needed to optimize RAG parameters with your data using evaluations. Currently, evaluations can be automated using Large Language Models (LLMs) as judges. I discussed some key RAG evaluation metrics and their calculation, along with an implementation using the Milvus vector database and the Ragas package.