📝 Guest Post: LLMs & humans: The perfect duo for data labeling

How to build a pipeline to achieve superhuman quality

In this guest post, Sergei Tilga, R&D Lead at Toloka AI, offers practical insights on the power of combining Large Language Models (LLMs) and human annotators in data labeling projects to optimize for quality while also reducing costs. Rooted in real-world results and examples, this article provides a valuable guide for anyone looking to maximize the potential of LLMs and human input in data labeling processes.

You may have heard that LLMs are faster, cheaper, and better than humans at text annotation. Does this mean we no longer need human data labeling? Not exactly.

Based on our experience using LLMs on real-world text annotation projects, even the latest state-of-the-art models aren’t meeting quality expectations. What’s more, these models aren’t always cheaper than data labeling with human annotators.

But we’ve found that it is possible to elevate data quality by using an optimal mix of human and LLM labeling.

Comparing the quality of LLMs and human labeling

There are a growing number of general-use LLMs publicly available, and they all belong to one of two camps: open source or closed API. Open source models are generally much cheaper to run.

You can see comparisons of overall model performance on Meta’s benchmarks table, in this paper, and on the LMSYS leaderboard. However, most evaluation projects are based on open-source datasets. To get a clear picture of LLM performance, we need to compare output on real-world projects as well.

We’ve been testing multiple LLMs on our own data labeling projects and comparing them to human labeling with a crowd of trained annotators. To assess the quality of both humans and models, we compare their labels to ground truth labels prepared by experts.

For most of the projects we run, we see good results from two models in particular:

GPT-4 from OpenAI (closed API)

Llama 2 from Meta (open source)

The dilemma of optimizing costs vs quality

If we’re talking about an open source model (Llama 2) where we only pay for GPU usage, data labeling might be hundreds of times cheaper than human crowd labeling. But what’s the tradeoff? It all depends on the complexity of the task.

Our experiments have achieved near-human accuracy with Llama 2 on sentiment analysis, spam detection, and a few other types of tasks. The inference price is generally low, but there are some other expenses involved. You need to have the infrastructure to run fine-tuning and inference, and you need to collect a human-labeled dataset for fine-tuning at the outset.

GPT-4 provides acceptable results right out of the gate. You just need to write a detailed prompt with task instructions and examples in text format. However, the cost of inference is either more expensive or only slightly cheaper than human labeling — and the quality is lower.

The good news is you don’t have to choose between using a model or using human annotation, and you don’t have to sacrifice quality to gain efficiency. We get the best results when we maximize the capabilities of human labeling and LLM labeling at the same time.

By carefully analyzing output, we often find subsets of data where a fine-tuned LLM performs better than humans. We can take advantage of this strength to achieve overall quality that is better than human-only or LLM-only labeling.

Does this mean we have a silver bullet to enhance quality without spending more money? Absolutely.

The silver bullet: intelligent hybrid pipelines

Toloka uses hybrid pipelines, meaning we add an LLM to the data labeling pipeline alongside humans. When done correctly, this approach can optimize costs while achieving unprecedented quality.

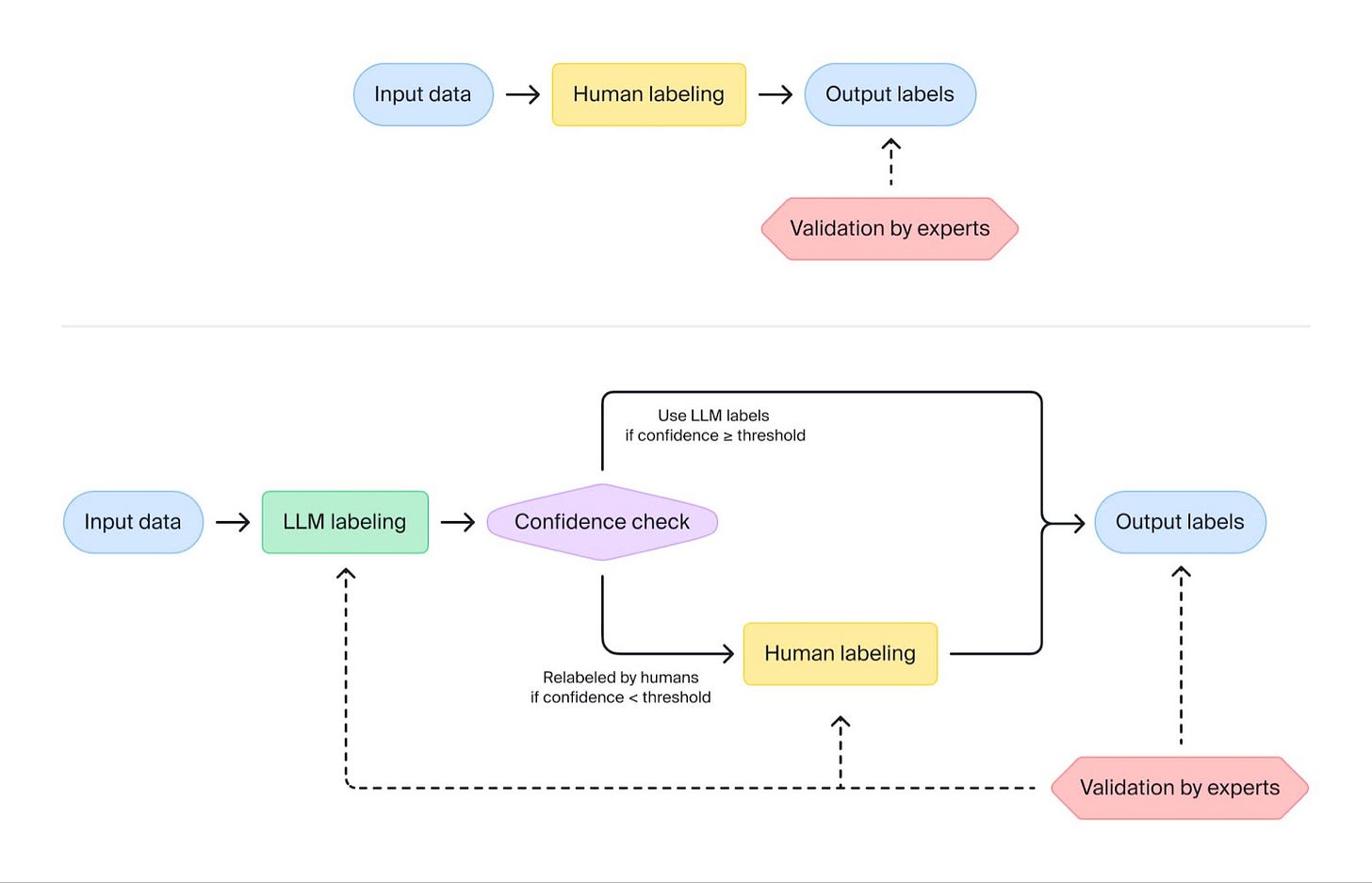

So how do we structure a hybrid pipeline?

When LLMs output a label, they can also output the confidence level. We can use this information in a pipeline that combines LLM and human labeling. The approach is simple: the LLM labels the data, and labels with low confidence are sent to the crowd for relabeling.

We can change the confidence threshold to adjust the amount of data that is relabeled by humans and control the quality of the final labels.

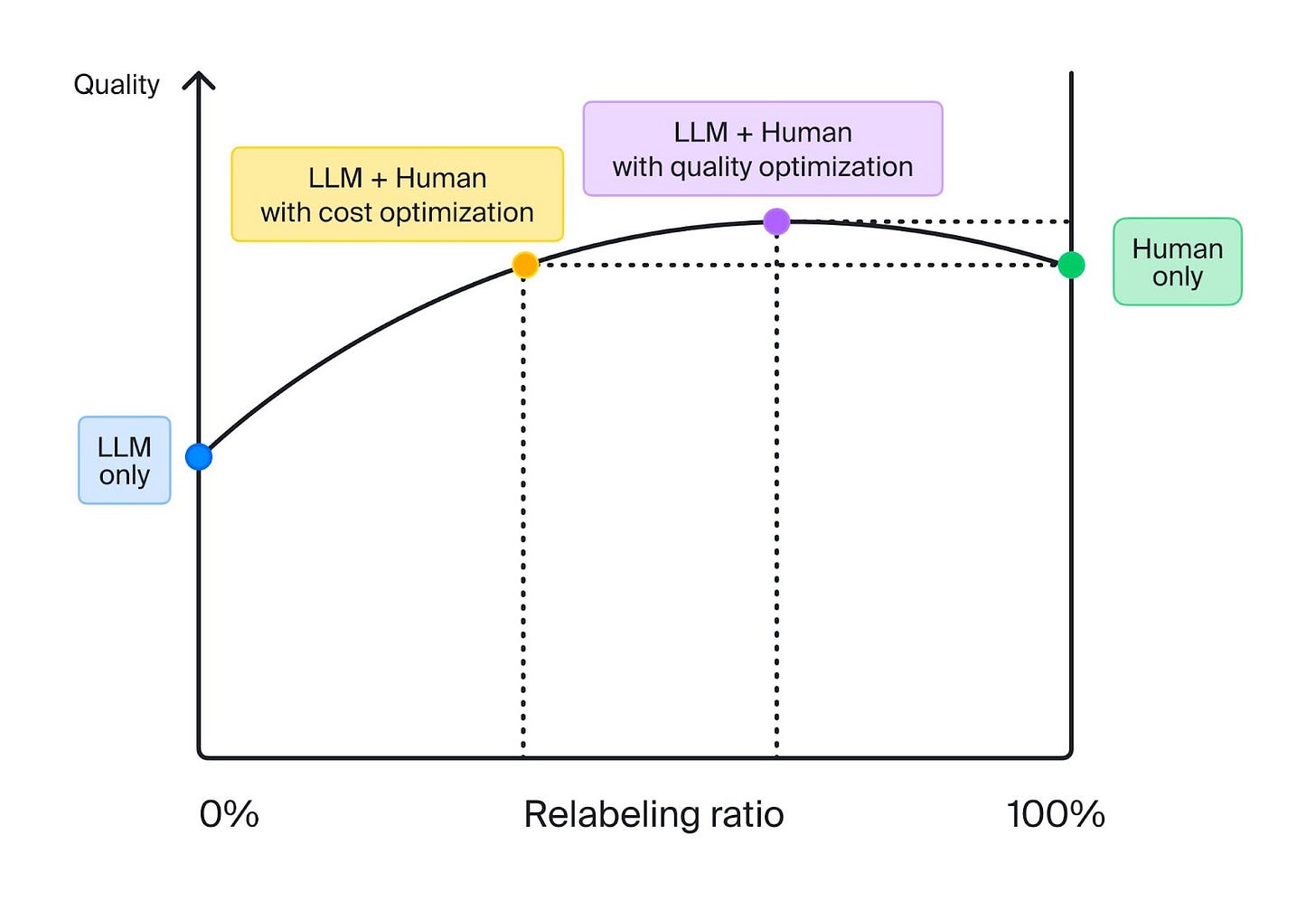

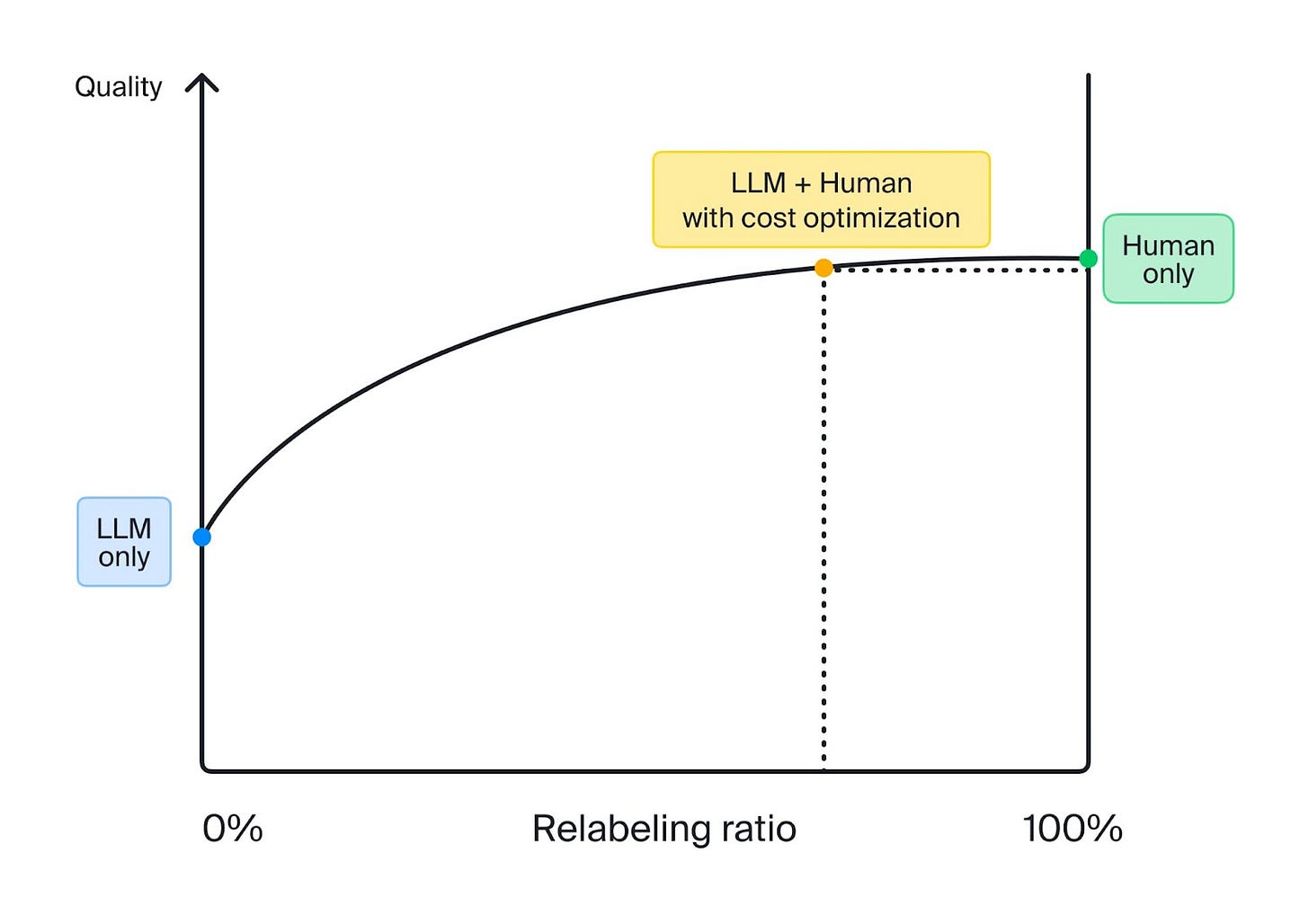

For typical tasks, the more we use human labeling, the higher the cost. The trick is to find the right threshold for model confidence to optimize for quality or cost, depending on our goal. We can choose any point on this curve and achieve the cost-quality trade-off that is needed for a specific task.

In some cases, quality optimization with LLMs just doesn’t work. This usually happens on complex tasks where the model’s quality lags far behind human quality. We can still use the model for cost optimization and get results that are very close to human accuracy, as shown below.

Examples of cost-optimized pipelines

Here are some real-life results of using cost-optimized hybrid pipelines, compared to human-only and LLM-only workflows.

The potential for optimization strongly depends on the complexity of the task. Naturally, the further the model’s output lags behind human quality, the less we can automate.

Examples of quality-optimized pipelines

This table shows results on quality-optimized pipelines, where we leveraged the LLM to achieve better-than-human quality.

On these tasks, the optimized hybrid pipeline performed impressively well compared to humans alone, while also reducing costs.

Here’s how we achieve superhuman accuracy:

Fine-tune models using high-quality datasets labeled by domain experts.

Strategically choose the confidence threshold for labeling with LLMs, and use human labeling to close any gaps.

Identify the subset of data where LLM output is more accurate than human labeling — the bigger the subset, the more cost savings are possible while meeting high quality standards.

Human + LLM pipelines in production

Reliable quality is our number one priority, and we build quality control steps into every data labeling pipeline that goes into production. We check up to 5% of the final labels — both LLM output and human output — using validation by experts. We then use the results to build metrics that help us continually monitor the overall quality of the pipeline.

So how do we mitigate quality issues? If quality drops, the standard solution is to add more data and fine-tune the model again. Sometimes the data keeps getting more complex and we don’t see improvement in the model’s output. In this case, we can adjust the confidence threshold for the model so that a smaller percentage of data is labeled by the model, and more data is labeled by humans.

With the right expertise for fine-tuning and the perfect threshold to balance LLM and human labeling, hybrid pipelines can produce exceptional quality in many cases.

Preparing your own pipeline: paving the way to success

To run your own hybrid data labeling pipeline that leverages LLMs and human insight, you’ll need to lay the groundwork by covering 4 essential steps:

A golden set: To improve the quality of LLM output on your specific task, you need an accurate expert-labeled dataset for fine-tuning. The more data you have and the higher the quality, the better your fine-tuned model will perform — and the more you’ll be able to automate data labeling. You can rely on qualified annotators from the Toloka crowd to collect high-quality training data.

Infrastructure for model training and inference and ML expertise for working with LLMs. Effective GPU utilization is crucial to make model labeling as cost-effective as possible. You will need:

Cloud instance with multiple GPUs

Code for model fine-tuning and inference

Storage and versioning of model weights

Tools for model validation and quality monitoring

An annotator workforce available on demand to handle labels with low confidence or complex tasks. Toloka’s global crowd is available 24/7 with annotators in every time zone.

Quality control strategy. Monitor the quality of output with ongoing human verification. Incorrect labels are sent for relabeling. At Toloka, we use advanced quality control techniques for human and LLM labeling, with continuous quality monitoring.

Toloka can help you in every stage of the AI development process. From finding the right LLM for your task, to taking care of fine-tuning, and designing a hybrid pipeline tailored to your needs — our team is here to support you every step of the way. The decision to optimize for quality or cost is always up to you!