📌 EVENT: Join us at LLMs in Production conference – the first of its kind

How can you actually use LLMs in production? There are still so many questions. Cost. Latency. Trust. What are the real use cases? What are challenges in productionizing them? MLOps community decided to create the first free virtual conference to go deep into these unknowns. Come hear technical talks from over 30 speakers working at companies like You.com, Adept.ai, and Intercom.

We also created a report on the current state of how organizations are using LLMs. Over 100 participants gave candid feedback about whether they are currently using LLMs, their use case, the main challenges and concerns, and what questions they have. Register to receive this report!

A little more about the report:

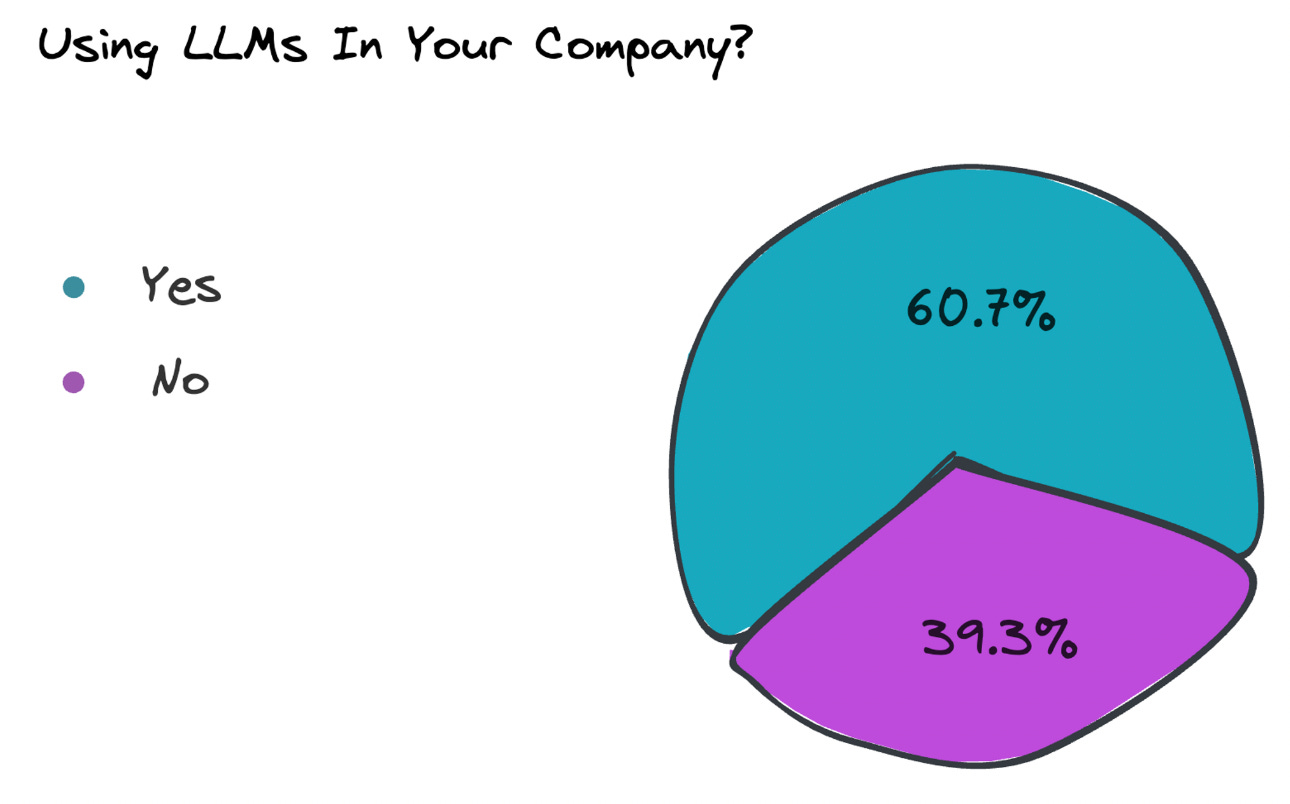

Recently the MLOps Community held a roundtable on using Large Language Models in production and asked participants to fill out a survey if they were leveraging the technology in their organizations.

The success of this survey gave us the idea to host a one-day virtual conference with 35+ speakers, 3 tracks, 2 workshops, all on how to better take advantage of this emerging technology.

LLMs in Production: Survey Highlights

Survey at a glance

The main takeaway from the LLMs in production survey is that most companies are entering the early stages of figuring out how to use LLMs in their business. Challenges in cost, latency, output variability and other infrastructure sophistication efforts still hinder teams from extracting the most value from their AI investments.

As a result, we are seeing a boom in the number of LLM infrastructure and developer tooling companies providing services to overcome these obstacles in the near term.

In this report, we focus on seven key survey findings and what they say about the LLMs in production landscape. Those key findings are as follows:

Common use cases like text generation and summarization are useful, but participants are going deeper exploring ways to use LLMs for data enrichment e.g. data labeling augmentation, synthetic data creation, and question generation for subject matter experts.

The decision to use LLMs within an organization is still unclear due to the high costs and unknown ROI.

Challenges around LLMs inference speeds make certain use cases impossible.

Current infrastructure is not designed for such massive models. This leads teams to implement workarounds for quick fixes.

Companies are building custom tooling to help with pains around output validation and prompt tracking.

Hallucinations present real ethical and business concerns. For some, it is a show-stopper.

Larger questions, such as which part of the current workflows will be replaced versus augmented are still unclear.

Prompting is a valuable skill. However, even the best prompts cannot force the model to give reliable output.

We will release the full report to all the attendees of our LLMs in production virtual conference happening on Thursday, April 13th. Join us to learn more about how organizations such as Intercom, You.com, and Adept AI are powering their LLMs strategy.