📏 Edge#40: On the Measure of Intelligence

TheSequence is a convenient way to build and reinforce your knowledge about machine learning and AI

In this issue:

we dive deep into a paper that challenges some of the best-established principles of AI systems in terms of how to measure intelligence;

🧠 we reward two winners of our last ten quizzes that this time were chosen randomly;

we start the new cycle of the quizzes – participate to win the 6-month subscription.

Enjoy the learning!

Last Thursday we introduced a new format – What’s New in AI, a deep dive into one of the freshest research papers or technology frameworks that’s worth your attention. Our goal is to keep you up to date with new developments in AI in a way that complements the concepts we are debating in other editions of our newsletter.

💥 What’s New in AI: On the Measure of Intelligence is One of the Most Groundbreaking Papers in the Recent Years of Deep Learning

Francois Chollet is the creator of the Keras framework and one of the brightest minds in the artificial intelligence (AI) space. In this paper, he challenges some of the best-established principles of AI systems in terms of how to measure intelligence. Let’s discuss further:

On the Measure of Intelligence

Every once in a while, you encounter a research paper that is so simple yet so profound and brilliant that you wish you would have written it yourself. That’s how I felt when I read François Chollet’s On the Measure of Intelligence. The paper resonated with me not only because it confronts some of the key philosophical and technical challenges about artificial intelligence (AI) systems that I have spent time thinking about, but also because it does so in such an elegant way that it is hard to argue with. Mr. Chollet’s thesis is remarkably simple: for AI systems to reach their potential, we need quantitative and actionable methods that measure intelligence in a way that shows similarities with human cognition.

Mr. Chollet’s thesis might seem contradictory given the recent achievements of AI systems. After all, it is unquestionable that we are producing intelligent algorithms that are achieving superhuman performances in games like Go, Poker or StarCraft, and are capable of driving vehicles, boats and planes. However, how intelligent are those systems? Despite the tangible achievements of AI, we continue measuring “intelligence” by the effectiveness of accomplishing a single task. But is that a real measure of intelligence? Just because a system has the ability to play Go, doesn’t mean that it can understand Shakespeare or reason through economic problems. As humans, we judge intelligence based on abilities such as analytical and abstract reasoning, memory, common sense and many others. In history or science, there have been two fundamental schools of thought that marked specific definitions of intelligence.

The Inspiration: Darwin-Turing and Psychometrics

One of the fascinating things about Mr. Chollet’s paper is its deep scientific roots. Many of the ideas can be found in the historical theories of intelligence as well as in the relatively obscure field of psychometrics.

Throughout the history of science, there have been two dominant views of intelligence: the Darwinist view of evolution and Turing’s view of machine intelligence. Darwin’s theory of evolution and human cognition is the result of special-purpose adaptations that arose to solve specific problems encountered by humans throughout their evolution. A contrasting and somewhat complementary perspective of the Darwinist view of intelligence was pioneered by Alan Turing. Turing’s vision of intelligence is inspired by British philosopher John Locke’s Tabula Rasa theory, which sees the mind as a flexible, adaptable, highly general process that turns experience into behavior, knowledge, and skills.

A lot of Mr. Chollet’s theory of quantifiable intelligence has been inspired by the field of psychometrics. Conceptually, psychometrics focus on studying the development of skills and knowledge in humans. A fundamental notion in psychometrics is that intelligence tests evaluate broad cognitive abilities as opposed to task-specific skills. Importantly, an ability is an abstract construct (based on theory and statistical phenomena) as opposed to a directly measurable, objective property of an individual mind, such as a score on a specific test. Broad abilities in AI, which are also constructs, fall into the exact same evaluation problematics as cognitive abilities from psychometrics. Psychometrics approaches the quantification of abilities by using broad batteries of test tasks rather than any single task, by analyzing test results via probabilistic models.

A Quantifiable Measure of Intelligence

Using some of the ideas from psychometrics, Chollet arrives to the following definition of intelligence:

The intelligence of a system is a measure of its skill-acquisition efficiency over a scope of tasks, with respect to priors, experience, and generalization difficulty.

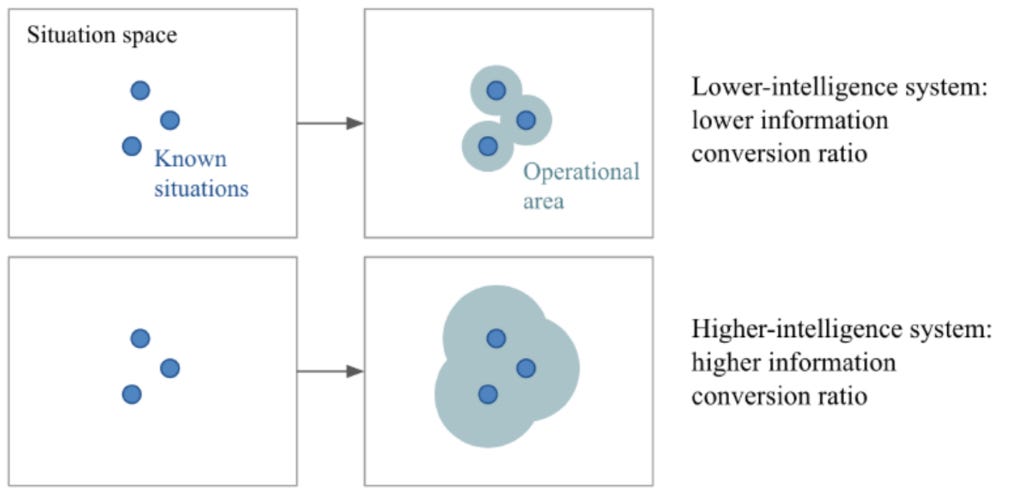

This definition of intelligence includes concepts from meta-learning priors, memory, and fluid intelligence. From an AI perspective, if we take two systems that start from a similar set of knowledge priors, and go through a similar amount of experience (e.g. practice time) with respect to a set of tasks not known in advance, the system with higher intelligence is the one that ends up with greater skills. Another way to think about it is that “higher intelligence” systems “cover more ground” in future situation space using the same information.

Image credit: The original paper

The previous definition of intelligence looks amazing from a theoretical standpoint, but how can it be included in the architecture of AI systems?

An intelligent system would be an AI program that generates a specific skill to interact with a task. For instance, a neural network generation and training algorithm for games would be an “intelligent system”, and the inference-mode game-specific network it would output at the end of a training run on one game would be a “skill program”. A program synthesis engine capable of looking at a task and outputting a solution program would be an “intelligent system”, and the resulting solution program capable of handling future input grids for this task would be a “skill program”.

Image credit: The original paper

Now that we have a canonical definition of intelligence for AI systems, we need a way to measure it 😉

ARC

Mr. Chollet is not only a great AI researcher but a stellar programmer. It was only natural that his theory of intelligence was complemented with a practical way to measure it.

Abstraction and Reasoning Corpus (ARC) is a dataset proposed by Chollet intended to serve as a benchmark for the kind of intelligence defined in the previous sections. Conceptually, ARC can be seen as a psychometric test for AI systems that tries to evaluate a qualitative form of generalization rather than the effectiveness of a specific task. ARC comprises a training set and an evaluation set. The training set features 400 tasks, while the evaluation set features 600 tasks. The evaluation set is further split into a public evaluation set (400 tasks) and a private evaluation set (200 tasks). All tasks are unique, and the set of test tasks and the set of training tasks are disjoint. Given a specific task, the ARC test interface looks like the following figure.

Image credit: The original paper

The initial release of ARC is available on GitHub.

The rapid growth of AI has caused an explosion in the volume of published research. Every day, there are dozens of machine learning papers published in different outlets. In that ocean of complex information, it’s hard to find papers that jump off the page. On the Measure of Intelligence can be unquestionably categorized as one of the most groundbreaking papers in the recent years of AI research. The controversial, yet clear and practical ideas expressed by Mr. Chollet are likely to play a role in the next decade of the machine learning field.

🧠 The Quiz

After ten quizzes, we are ready to announce two winners. This time we decided to let a machine make a random choice.

Our readers with email daniel.m…c…@gmail.com and nikos……@gmail.com receive a 6-month subscription. Congratulations!

Now, to our regular quiz. Participate to check your knowledge and become a winner. The question is the following:

The “On the Measure of Intelligence” paper proposes a new method to quantify intelligence in AI agents. What’s the fundamental unit of measure of the proposed methodology?

Thank you. See you on Sunday 😉

TheSequence is a summary of groundbreaking ML research papers, engaging explanations of ML concepts, and exploration of new ML frameworks and platforms. TheSequence keeps you up to date with the news, trends, and technology developments in the AI field.

5 minutes of your time, 3 times a week – you will steadily become knowledgeable about everything happening in the AI space.