📊 Edge#37: What is Model Drift?

TheSequence is a convenient way to build and reinforce your knowledge about machine learning and AI

In this issue:

we explain what model drift is;

we overview the pillars of robust machine learning summarized by DeepMind;

we discuss Fiddler, an ML monitoring platform with built-in model drift detection.

Enjoy the learning!

💡 ML Concept of the Day: What is Model Drift?

“A model that predicts stupid things is worse than one that doesn’t predict at all.” A not-so-polite phrase, which was regularly used by one of my mentors in the machine learning space, expresses the importance of evaluating the accuracy of predictions in machine learning models over time. Sadly, every machine learning practitioner needs to confront the situation in which the performance of a production model degrades over time. One of the causes of that phenomenon is known as model drift and is one of the key aspects in the maintenance and versioning of machine learning solutions.

Conceptually, model drift describes a scenario in which the model target has changes relative to its baseline. This could be due to changes in the distribution of the data, specific features or internals of the prediction model. In practice, model drift can take different shapes and forms:

Concept Drift: The best known form of model drift, concept drift happens when there is a change in the intrinsic relationship between inputs and outputs in the machine learning model. This could be due to changes in the context of the predicted value over time.

Data Drift: Similarly to concept drift, data drift also models changes in the relationship between inputs and outputs. However, data drift is more focused on scenarios in which the changes are a direct consequence of the evolved distribution of the input dataset.

There are several statistical techniques, such as the Kolmogorov-Smirnov test or Kullback–Leibler divergence, that are typically used for model drift analysis.

Regardless of how it manifests, model drift is one of those undesirable but omnipresent stages in the lifecycle of machine learning models. Surrounding machine learning programs with robust monitoring and interpretability components are one of the best solutions to address model drift.

🔎 ML Research You Should Know: The Pillars of Robust Machine Learning

In the insightful blog post Identifying and eliminating bugs in learned predictive models, researchers from DeepMind summarized ideas about several papers related to methods for increasing the robustness of machine learning systems.

The objective: Outlines three fundamental techniques that enable the implementation of robust machine learning solutions.

Why is it so important: Phenomena such as model drift are a reflection of the fragility of machine learning models. DeepMind’s research presents some key ideas that can address these challenges.

Diving deeper: The notion of robustness in machine learning systems is typically associated with the performance of a model. However, that definition is fairly simplistic. The notion of robustness in machine learning models should go beyond performing well against training and testing datasets. But machine learning models should also behave according to a predefined set of specifications that describe the desired behavior of the system.

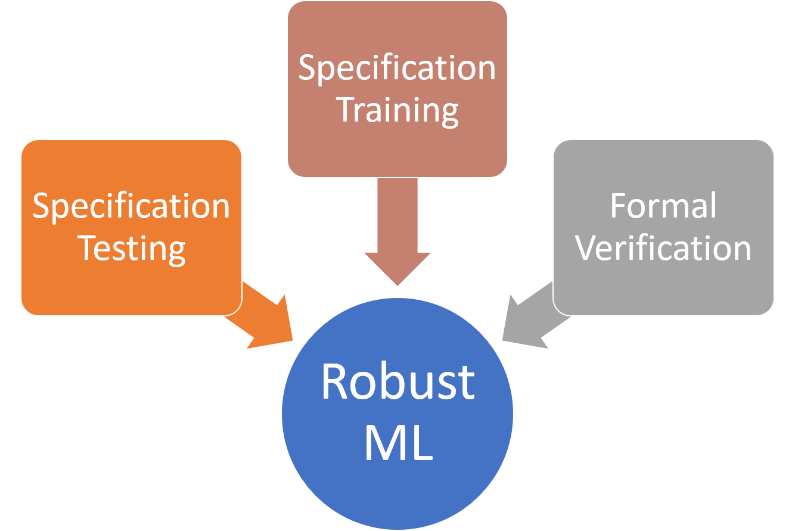

Writing robust machine learning programs is a combination of many aspects, ranging from accurate training datasets to efficient optimization techniques. However, most of these processes can be modeled as a variation of three main pillars that constitute the core focus of DeepMind’s research:

Testing Consistency with Specifications: Techniques to test that machine learning systems are consistent with properties (such as invariance or robustness) desired by the designer and users of the system.

Training Machine Learning models to be Specification-Consistent: Even with copious training data, standard machine learning algorithms can produce predictive models that make predictions inconsistent with desirable specifications like robustness or fairness — this requires us to reconsider training algorithms that produce models that not only fit training data well but also are consistent with a list of specifications.

Formally Proving that Machine Learning Models are Specification-Consistent: There is a need for algorithms that can verify that the model predictions are proven consistent with a specification of interest for all possible inputs. While the field of formal verification has studied such algorithms for several decades, these approaches do not easily scale to modern deep learning systems, despite impressive progress.

The combination of testing, training and formal verification of specifications constitutes three key pillars for the implementation of robust machine learning models. These ideas can also help improve the performance of models over time, avoiding challenges such as model drift.

🤖 ML Technology to Follow: Fiddler is an ML Monitoring Platform with Built-In Model Drift Detection

Why should I know about this: Fiddler is one of the most complete ML Monitoring and Expansibility platforms in the market.

What is it: Monitoring is one of the most important elements in the lifecycle of ML solutions. From the ML market standpoint, ML Ops Monitoring is an area sparking a new wave of startups and platforms trying to address the many difficult challenges of the space. Among that new generation of companies, Fiddler has distinguished itself with one of the most complete ML Ops Monitoring offerings in the market.

Fiddler’s approach to ML Ops Monitoring is based on addressing three fundamental challenges:

Model drift and outliers: The performance of ML models regularly drifts over time and is particularly impacted by outlier values that deviate from the distribution of the training set.

Uncertain feedback: Scores such as prediction scores often result inaccurate for evaluating the performance of an ML model.

Debugging: The process of debugging ML models remains incredibly tedious and challenging.

Fiddler tries to address these three challenges with an ML Ops Monitoring suite that includes capabilities such as drift detection, outlier analysis, data integrity analysis, alerting and many others. In the case of model drift, Fiddler includes an entire set of capabilities that analyzes the performance of the model over time and detects potential drift conditions.

Image credit: Fiddler

Fiddler is integrated into many machine learning frameworks such as TensorFlow, PyTorch and Scikit-Learn. The platform has also been adopted by several major industry players such as Uber, Nvidia, Facebook and many others.

How can I use it: Fiddler is commercially distributed at https://www.fiddler.ai/

🧠 The Quiz

Now, to our regular quiz. After ten quizzes, we will reward the winners. The questions are the following:

What’s the difference between model and data drift?

When should you consider using Fiddler AI?

That was fun! Thank you. See you on Thursday 😉

TheSequence is a summary of groundbreaking ML research papers, engaging explanations of ML concepts, and exploration of new ML frameworks and platforms. TheSequence keeps you up to date with the news, trends, and technology developments in the AI field.

5 minutes of your time, 3 times a week – you will steadily become knowledgeable about everything happening in the AI space.

Can we expands on research or practices on deep understanding methods on find out and address model drifting in future?