〰️ Edge#198: Neptune.ai, Flexible and Expressive Tool for Experiment Tracking and Model Registry

On Thursdays, we do deep dives into one of the freshest research papers or technology frameworks that is worth your attention. Our goal is to keep you up to date with new developments in AI and introduce to you the platforms that deal with the ML challenges.

💥 Deep Dive: Neptune.ai, Flexible and Expressive Tool for Experiment Tracking and Model Registry

Machine learning (ML) is a highly scientific discipline that requires rigorous experimentation. This can include trying new heuristics, changing hyperparameter values, adding new input features, testing different machine learning algorithms, and a host of other activities.

It is important to track these experiments so that results can be reproducible and comparisons can be made between different runs. Additionally, it is important to keep track of machine learning models so that they can be reused and compared against each other.

This is where experiment tracking and management platforms come in. There are many different options available on the market, each with its own strengths and weaknesses.

In this deep dive, we will take a look at Neptune.ai that offers impressive experiment tracking and machine learning model management capabilities.

Enter Neptune.ai

In simple terms, we can think about Neptune.ai as a metadata store for MLOps. In practice, it means being an essential part of the MLOps stack that deals with model building metadata management. That includes logging, storing, querying, displaying, organizing, and comparing all their machine learning model metadata in a single place.

The other way to think about Neptune is being Database + Dashboard which was built for model building metadata.

They have fascinating collaboration tools that allow teammates to run model training on a laptop, cloud environment, or a computation cluster and log all model building metadata to a central metadata store.

The platform is designed to be highly flexible and expressive, making it easy to log any metadata in any structure. This makes it possible to combine and display different metadata in a custom dashboard.

Why do you need an ML metadata store for?

Building robust, accurate, and scalable machine learning models is hard enough. Keeping track of all the different experiments and models can be even harder.

Basically, metadata store is your data bookkeeping, and we all know how important it is. Struggling to track and share your work results is highly annoying, and to lose them is discouraging.

Therefore, the key components of ML metadata store are experiment tracking, model registry, and data versioning.

Experiment Tracking

There are three important aspects of experiment tracking: logging, organization, and visualization & comparison.

Logging: Neptune.ai makes it easy to log machine learning experiments. The tool supports any framework, any metadata type, and any pipeline. This includes popular frameworks like TensorFlow, PyTorch, and Keras; metadata like metrics, parameters, and dataset & model versions; and pipelines like single-script, multi-step pipelines, and distributed training.

Organization: Neptune.ai provides a way to organize machine learning experiments using a nested metadata structure. This makes it easy to keep track of different experiments and compare them. The platform also offers custom dashboards so that users can quickly see the most important information. Finally, users can save table views for easy access to specific data.

Visualization & Comparison: Neptune.ai offers a number of ways to visualize and compare machine learning experiments. For example, users can compare learning curves for different metrics, view interactive tables of all metadata, or compare parallel coordinates for different parameters. Additionally, the platform offers image comparison and hardware metrics so that users can understand how different models are performing.

Model Registry

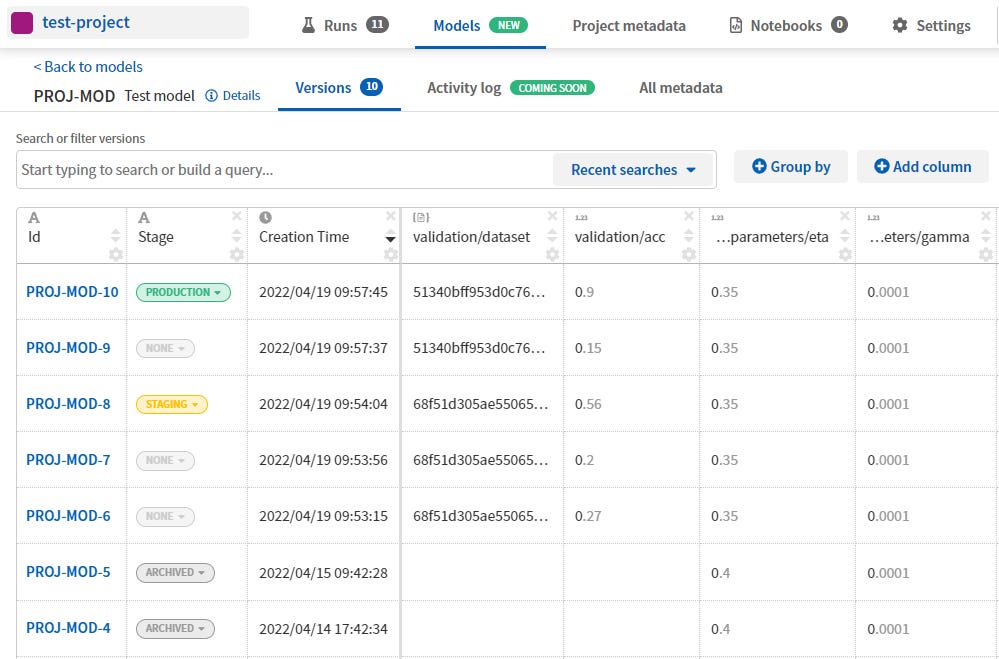

The Neptune.ai platform also offers a model registry so that users can save and manage production-ready models. The model registry makes it easy to version models, change stages, and save custom metadata. Users will soon be able to review and approve models.

Data Versioning

As part of experiment tracking and model registry, Neptune.ai supports dataset and model versioning.

Collaboration

Being able to collaborate sometimes makes all the difference. Neptune.ai offers a number of features to facilitate collaboration between data scientists and machine learning engineers.

For example, the platform provides a shared UI so that everyone can see the same information. Users can also send persistent links to specific experiments, models or dashboards so that others can easily access them.

Moreover, Neptune.ai provides a Query API so that users can programmatically access experiment and model data.

The platform also offers user management to help organizations keep track of who has access to what information.

Deeper, Not Wider

Broadly speaking, there are two kinds of product strategies: Narrow and deep versus wide and flat. The former focuses on doing a few things really well, while the latter tries to cover as many use cases as possible.

Neptune.ai has taken the narrow and deep approach. The platform is focused on experiment tracking and machine learning model registry This allows the team to focus on delivering best-in-class capabilities for these two important tasks.

Narrow and deep is often a preferred approach for a few reasons. First, it allows the team to focus on delivering great experiences for the user. Second, it allows the team to better integrate with other tools in the ecosystem. And third, it makes it possible to deliver a more robust platform overall.

In practice, this means they don't offer HPO (hyperparameter optimization), workflow orchestration, or model deployment. Instead, they integrate with or support best-in-class tools for these tasks. This includes platforms like Optuna, or Kedro.

This is also known as the canonical stack approach. The idea is that each tool should focus on doing one thing really well and then let other tools handle the rest. This results in a more robust overall ecosystem and makes it easier for users to swap out different components as needed.

Conclusion

There are a bunch of platforms that tackle the challenges of ML experimentation. What we liked about Neptune is that it is designed to be highly flexible and expressive, making it easy to log any metadata in any structure.

Also important is that it has an intuitive and user-friendly interface built for teamwork. Research and production teams that run a lot of experiments will find Neptune.ai to be a great option for experiment tracking and collaboration.

Neptune.ai is available as a hosted SaaS, on-premises, or in a private cloud. The platform offers a freemium product with a transparent usage-based pricing model.