In this issue:

we start a new series about high scale ML training;

we explain SeedRL, an architecture for massively scaling the training of reinforcement learning agents;

we overview Horovod, an open-source framework created by Uber to streamline the parallelization of deep learning training workflows.

Enjoy the learning!

💡 ML Concept of the Day: A New Series About High Scale ML Training

Training is one of the most important aspects of the lifecycle of ML models. In an ecosystem dominated by supervised learning techniques, having proper architectures for training is paramount for building robust ML systems. In the context of ML models, training is one of those aspects that is relatively simple to master at a small scale. But its complexity grows exponentially (really) with the size and complexity of a neural network. Over the last few years, the ML community has made significant advancements in both the research and implementation of high scale ML training methods. We will dedicate the next few weeks of TheSequence Edges to exploring the latest ML training methods and architectures powering some of the largest ML models in production.

The complexity of ML training architectures grows with the size and number of models. What does it take to train a large transformer network like GPT-3? How to distribute the training process across many servers while ensuring the models can generalize? Similarly, what types of training architectures are better suited to train a large number of ML models?

Not surprisingly, the vast majority of research in high scale ML training architectures has come from the AI labs in large technology companies like Microsoft, Google, Uber, and Facebook. These companies are building massively large ML models that require incredibly complex training infrastructures. As part of those efforts, tech incumbents have open-sourced many of the frameworks powering the training of those super large models. Systematizing the training of ML models requires as much ML research as engineering sophistication. In the next few weeks, we will dive deeper into some of the most advanced frameworks and research methods that can be used to train ML models at scale.

🔎 ML Research You Should Know: SeedRL is an Architecture for Massively Scaling the Training of Reinforcement Learning Agents

In a recent paper, “SEED RL: Scalable and Efficient Deep-RL with Accelerated Central Inference”, Google Research proposed a reinforcement learning architecture that can be trained using thousands of machines.

The objective: Building an efficient and massively scalable reinforcement learning architecture.

Why is it so important: SEED RL is one of the first architectures that applies highly distributed training to reinforcement learning agents.

Diving deeper: The challenges of implementing reinforcement learning models in the real world are directly tied to their architecture. Intrinsically, DRL is comprised of heterogeneous tasks such as running environments, model inference, model training, and replay buffers. Learning purely by exploration requires massive amounts of compute resources. Most modern DRL architectures fail to efficiently distribute compute resources for these tasks making it unreasonably expensive to train. Among the recent generation of reinforcement learning architectures, IMPALA sets a new standard for the space. Proposed by DeepMind in a 2018 research paper, IMPALA introduced a model that made use of accelerators specialized for numerical calculations, taking advantage of the speed and efficiency from which supervised learning has benefited for years.

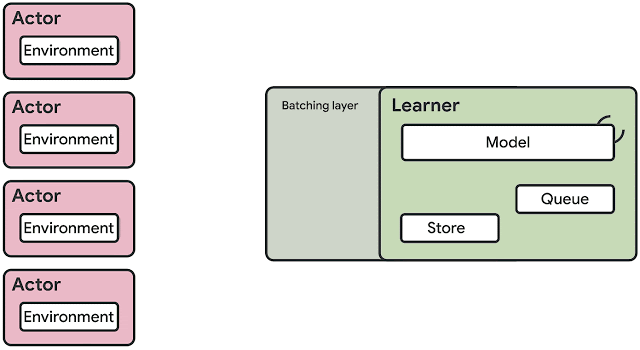

At a high level, Google’s SEED (Scalable, Efficient Deep-RL) architecture looks incredibly similar to IMPALA. But it introduces a few variations that address some of the main limitations of the DeepMind model. In SEED RL, neural network inference is done centrally by the learner on specialized hardware (GPUs or TPUs), enabling accelerated inference and avoiding the data transfer bottleneck by ensuring that the model parameters and state are kept local. For every single environment step, the observations are sent to the learner, which runs the inference and sends actions back to the actors. This clever solution addresses the inference limitations of models like IMPALA but might introduce latency challenges. To minimize the latency impact, SEED RL relies on gRPC for messaging and streaming. Specifically, SEED RL leverages streaming RPCs where the connection from actor to learner is kept open, and metadata is sent only once. Furthermore, the framework includes a batching module that efficiently batches multiple actor inference calls together.

The SEED RL architecture allows learners to be scaled to thousands of cores. The number of actors can be scaled to thousands of machines to fully utilize the learner, making it possible to train at millions of frames per second, given that the SEED RL is based on the TensorFlow 2 API and its performance was accelerated by TPUs.

Google used common reinforcement learning benchmark environments such as Arcade Learning Environment, DeepMind Lab environments, and the recently released Google Research Football environment. The results showed SEED outperforming large-scale reinforcement learning architectures across a variety of tests.

🤖 ML Technology to Follow: Horovod

Why Should I Know About This: Horovod is a popular open-source framework created by Uber to streamline the parallelization of deep learning training workflows.

What is it: Why Uber named this framework after a Russian folk dance remains a mystery 😉 After a few years of running deep learning models at scale, Uber started running into roadblocks, keeping up with the training times required by some of those models. After evaluating different data parallelism approaches, Uber landed on a very innovative architecture that became the inception of Horovod.

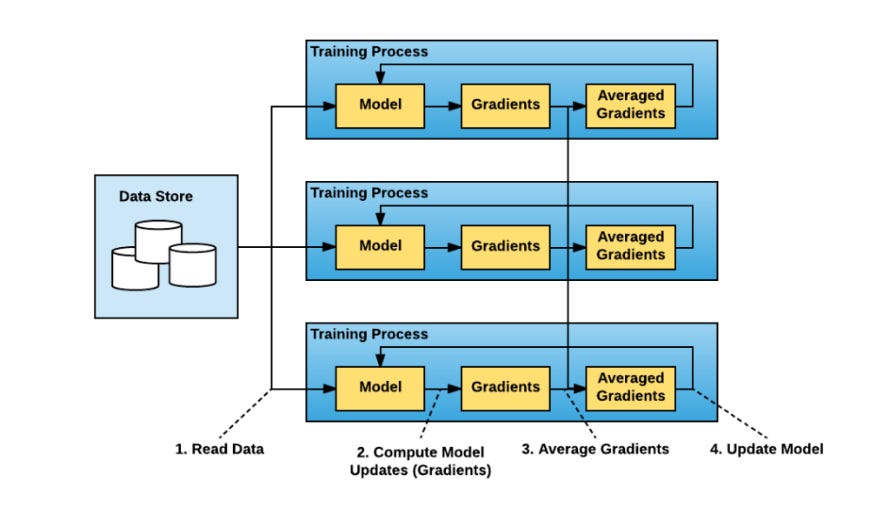

The Horovod framework takes a different approach to training data parallelism using a very innovative distributed computing technique. The traditional approach to data parallelism can be summarized in a few basic steps:

Read training batch

Train the model

Compute the model gradients

Average gradients across multiple nodes

Repeat the whole process again

At the scale of Uber models, traditional data parallelism methods hit a roadblock as the nodes that needed to average the gradients became a performance bottleneck. To address that, Uber decided to adopt a very clever distributed computing algorithm known as ring-allreduce, which creates a topology in which each node communicates with two of its peers, exchanging the training gradients. Research shows that the ring-allreduce architecture leads to optimal load distribution.

The ring-allreduce method became the core idea behind Horovod. Uber based its initial implementation on TensorFlow and a message passing interface framework called OpenMPI, which is used for distributing the copies of the deep learning programs. Since its initial launch, Horovod has become one of the go-to frameworks for distributed training and has received regular contributions from the deep learning community.

How Can I Use it: Horovod is open-sourced and hosted by the LF AI Foundation (LF AI). The project can be downloaded at https://github.com/horovod/horovod