🔷🟥 Edge#110: How The Pachyderm Platform Delivers the Data Foundation for Machine Learning

This is an example of TheSequence Edge, a Premium newsletter that our subscribers receive every Tuesday and Thursday. On Thursdays, we do deep dives into one of the freshest research papers or technology frameworks that is worth your attention. It helps you become smarter about ML and AI.

💥 What’s New in AI: The Pachyderm Platform Delivers the Data Foundation for Machine Learning

Managing the lifecycle of experiments is one of the overlooked aspects of data science pipelines. People typically associate data science experimentation with model design but, in reality, machine learning engineering plays an equally important role. Sometimes, data engineers spend as much as 60-80% of their time ingesting, cleaning and transforming data and, yet, the majority of the tools on the market seem to focus on training to deployment process and skip the data engineering altogether.

Imagine a large organization with hundreds of machine learning models that need training and regular evaluation. Any small change in training datasets can significantly affect the model output. Change bounding boxes slightly or crunch down file sizes and you could get very different inference results. Scale that scenario to hundreds of models, and it quickly becomes unmanageable. Being able to systematically reproduce those experiments requires rigorous data versioning, data lineage and model lifecycle management techniques.

But it goes beyond tracking just the data changes or taking snapshots randomly throughout the lifecycle. You want an immutable data lake that takes snapshots automatically along the way and tracks the lineage of what’s in those snapshots with a Git-like commit system. You also need the system to track the complex interplay between your models, your code, and your data as they change all at the same time. If you run 50 experiments on different variations of your data, with different code changes along the way, you want to be able to roll back to the exact snapshot and code base that led to your final result. The challenge of reproducing machine learning experiments is different from anything we have experienced in traditional software development. The closest analogy in traditional software is source control and continuous integration stacks, which maintain versions of code files and test suites to validate different functionalities. Those systems don’t adapt perfectly to machine learning experiments because you need version control not just for the code of the model but on the underlying datasets. Managing the lifecycle of experiments requires versioning, lineage and continuous integration capabilities for both models and data.

Enter The Pachyderm Platform

The easiest way to think about Pachyderm is as a versioned controlled data pipeline for data science experiments. What does that mean exactly?

Well, Pachyderm enables capabilities such as data ingestion, cleaning, wrangling, processing, modeling among other fundamental building blocks of data versioning and data science pipelines. From the functional standpoint, the Pachyderm platform delivers the following capabilities:

Version Control: Pachyderm takes automatic snapshots at every step in the data science pipeline, without the programmer needing to remember to call snapshots.

Data Lineage: Pachyderm accurately tracks the provenance of what is in the training snapshots, as well as the relationship between the data, the code and the model.

Containerization: Pachyderm runs in container infrastructures such as Docker and Kubernetes removing dependencies from any data science framework.

Parallelization: Because it leverages Kubernetes, Pachyderm can automatically scale up and parallelize data processing with no changes to the code, slicing up data into small chunks that are processed in parallel.

Code Agnosticism: The platform lets you run models in any framework or programming language, such as Python, R, Java, Rust, C++, Bash or others.

If you take a detailed look at the aforementioned features, you can see that the first two deliver the data snapshotting and lineage capabilities while the last three are key for building scalable data science pipelines.

Data Versioning & Lineage

You can think of the Pachyderm version-control system as a Git for datasets. The reason Pachyderm’s version control system is needed is precisely because Git doesn’t translate well to datasets. In particular, pushing and pulling multiple terabytes of data down to laptops all over the world just won’t work. Git was designed for smaller code files. That’s why Pachyderm uses a centralized data repository, which consists of a copy-on-write file system that fronts on object store like Amazon S3 or Azure blog storage, effectively giving you near-infinite storage.

Pachyderm’s versioning system architecture includes the following concepts:

Repository: This is the highest level data object for any given dataset.

Commit: This represents immutable snapshots in time of specific datasets.

Branch: This represents an alias of a specific commit.

Provenance: This describes the relationships between different repositories, commits and branches.

Data-Driven Pipelines

Data-driven pipelines are pipelines where the data can drive the actions of the pipeline itself. Most machine learning systems today mirror the logic of hand-coded development patterns of the past, with data as a secondary resource. In traditional software design, if you write a login for a website, you hand-code the logic and only touch the data briefly to get the username and password. But with machine learning, data is central and the model learns from that data. New data flowing into the system can and should be able to automatically trigger actions in the system, kicking off a new pipeline run that updates the training of a model.

The data-driven aspects of the platform also give users the power of incrementality. That means that when new data flows into the system, a pipeline can run only the new bits of data, keeping the old parts of the model intact. That can save a massive amount of time if you’re talking about 10s or 100s of terabytes. You don’t want to be retraining on the entire dataset every time you run the pipeline again, just the new data.

Pachyderm pipeline system (PPS) can be seen as a Hadoop for data science computations. PPS is specifically optimized for performing computation transformations in datasets. The PPS architecture includes the following components:

Pipeline: This encapsulates computations that execute when new data arrives in the repository. Pipelines are defined by JSON or YAML and include a virtual input and output repo to the underlying Pachyderm filesystem. Each step in the pipeline executes code in a container to ingest, transform, clean, train or deploy a model.

Job: A job is an individual execution of a pipeline.

Datum: This represents individual units of work within a job that can be executed sequentially or in parallel. In short, it’s a smaller chunk or slice of your data that allows for granular control of that data.

These capabilities represent the core of the Pachyderm architecture and they are included in its open-source community edition. Pachyderm also provides a different version optimized for enterprise use cases, whether on-premise or in the cloud.

Pachyderm Enterprise

Like a traditional enterprise open-source playbook, Pachyderm offers a commercial edition of the platform designed for large organizations looking to scale and version their model creation capabilities and data science pipelines. Pachyderm Enterprise complements the open-source edition with some interesting capabilities:

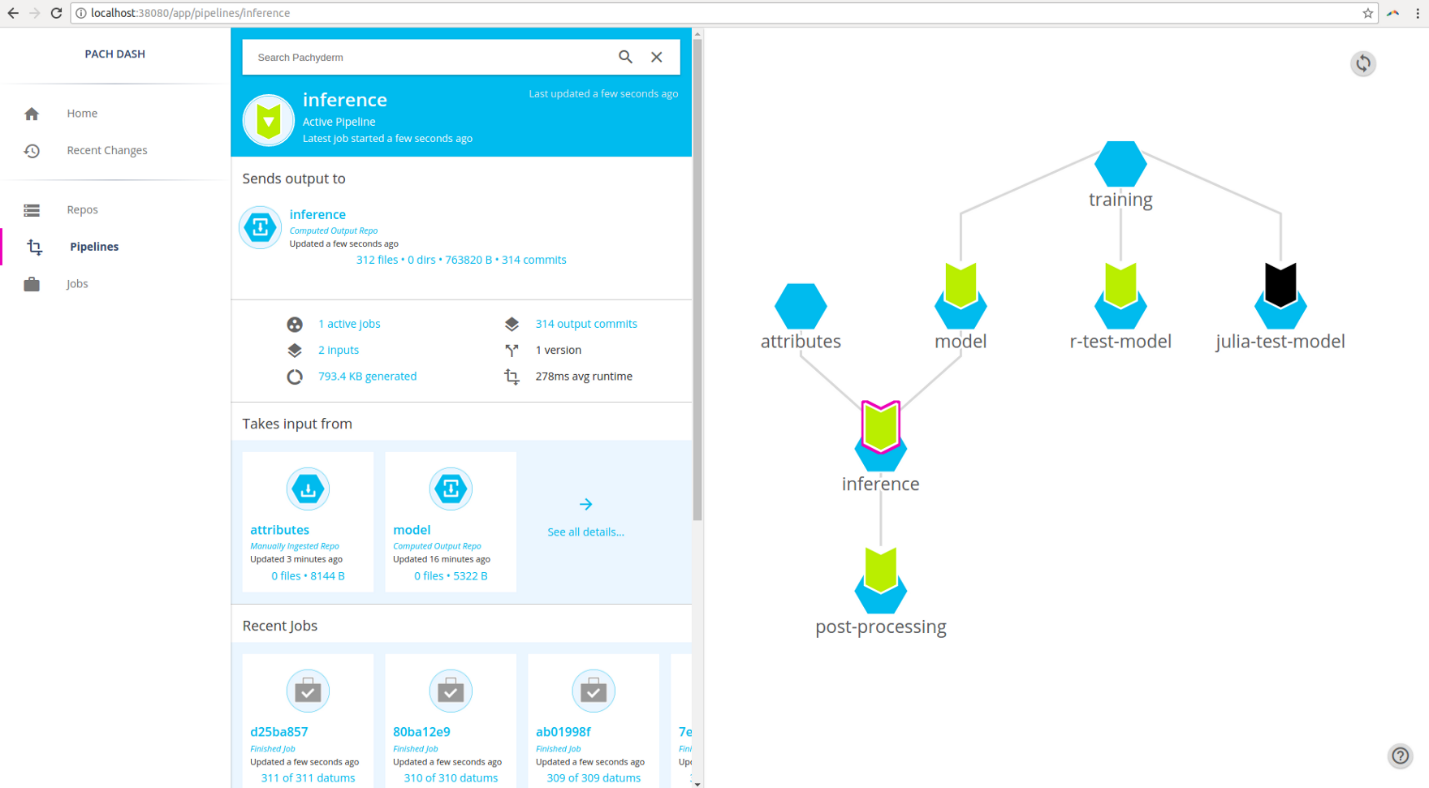

Pipeline Visualization and Data Exploration: Pachyderm Enterprise enables the visualization of data science pipelines using a graph structure. Additionally, the visual interface allows the interactive exploration of datasets used for training machine learning models.

Access Control: Security is a key aspect of enterprise machine learning solutions. Pachyderm Enterprise offers access control policies for constraining authorization to the different machine learning assets in enterprise infrastructure and integrates with existing RBAC systems in large organizations.

Model Execution Metrics: Pachyderm Enterprise enables advanced statistics and other metrics that quantify the execution of machine learning models.

Pachyderm Hub

Pachyderm can be deployed both on-premise or in the cloud environments. However, those deployments require machine learning engineering teams to manage a Kubernetes cluster. To remove that friction, Pachyderm Hub provides a cloud-native, full feature deployment SaaS version that abstracts the underlying infrastructure from the data science team. That means your team doesn’t need to know how to run Kubernetes but it can still leverage the automatic scaling Kubernetes deliver.

Pachyderm Hub combines the key capabilities of the Pachyderm platform such as data versioning, lineage analysis and pipeline execution with dynamic computational resource management which makes it extremely easy for data science teams to get started.

Conclusion

Managing the data engineering aspect of the machine learning lifecycle is one of the key capabilities of large-scale data science pipelines. Among the emerging group of MLOps platforms, Pachyderm has quickly turned into one of the most complete technology stacks to address this challenge. The key innovations of Pachyderm are its data versioning, lineage and a flexible pipelining system that lets one run any kind of code or framework. The platform provides open-source, enterprise and cloud editions which facilitate its adoption across different types of data science teams.

Further Reading: You can read more about the platform here