🤖 Edge#109: What are Transformers?

The technique behind a few major breakthroughs in deep learning

In this issue:

we start the new series about the Transformer Architectures. The technique that made possible a few major breakthroughs in deep learning;

we explore deeper Google’s Attention paper that started the transformer revolution;

we overview Tensor2Tensor, a modular library for using transformer models in TensorFlow.

💡 ML Concept of the Day: What are Transformer Architectures?

As we go past the first hundred editions of TheSequence newsletter, I can’t think of a better topic to cover than transformer architectures, which are considered by many the most important development in the recent years of deep learning. Interestingly enough, transformers were sort of the first topic we covered in TheSequence but it was really introductory. Today, we will start a new series that dives deep into this fascinating technique that has been at the center of major milestones in the deep learning space.

Transformer architectures were specialized in the processing of sequential datasets, which are relevant in domains such as computer vision or natural language processing (NLP). Before the inception of transformers, that space was dominated by recurrent neural network (RNNs) models, such as long-short term memory (LSTM) networks. Transformers challenged the conventional wisdom in RNN architectures by not relying on an ordered position of the input data. Instead, transformers relied on unique attention mechanisms that provided context for any position in the sequence. For instance, in NLP scenarios, that means that a transformer can process the middle or end of a sentence before the beginning, depending on which areas it “should pay attention to”. This enables the processing of large input datasets using parallelization, instead of relying on sequential techniques.

At the core of transformer architectures, we have an encoder-decoder model complemented with a multi-head attention mechanism. The encoder-decoder model translates one input dataset into another. However, the transformer model also incorporates a multi-head attention mechanism that determines which parts of the input are more relevant than others. For example, in question-answering or machine translation scenarios, different words in a sentence have different levels of importance given the target task. Transformer models help focus the “attention” to these parts. Conceptually, attention mechanism looks at an input sequence and decides at each step which other parts of the sequence are important.

Image credit: Jay Alammar's blog

Transformers have been the cornerstone of such groundbreaking models as Google BERT and OpenAI GPT3, which set up new milestones in NLP scenarios. In recent months, transformers are also making important inroads in other areas such as computer vision and time-series analysis.

🔎 ML Research You Should Know: Google’s Attention Paper that Started the Transformer Revolution

In the paper “Attention is All You Need”, Google Research introduced the architecture that started the transformer revolution.

The objective: Introduce a new architecture that uses attention mechanisms to enhance the processing of large sequential data inputs.

Why is it so important: This paper is considered one of the most important research milestones in the last decade of deep learning.

Diving deeper: Google’s Attention paper proposes an alternative architecture that tries to address some of the limitations of RNN models in sequence-to-sequence problems. Until transformers came into the deep learning scene, the traditional way to tackle a sequence-to-sequence problem was to use complex recurrent or convolutional neural networks that include an encoder and a decoder. Although efficient in some scenarios, these architectures struggle when tackling large input datasets as they lose their ability to retain information about the first elements in a large sequence.

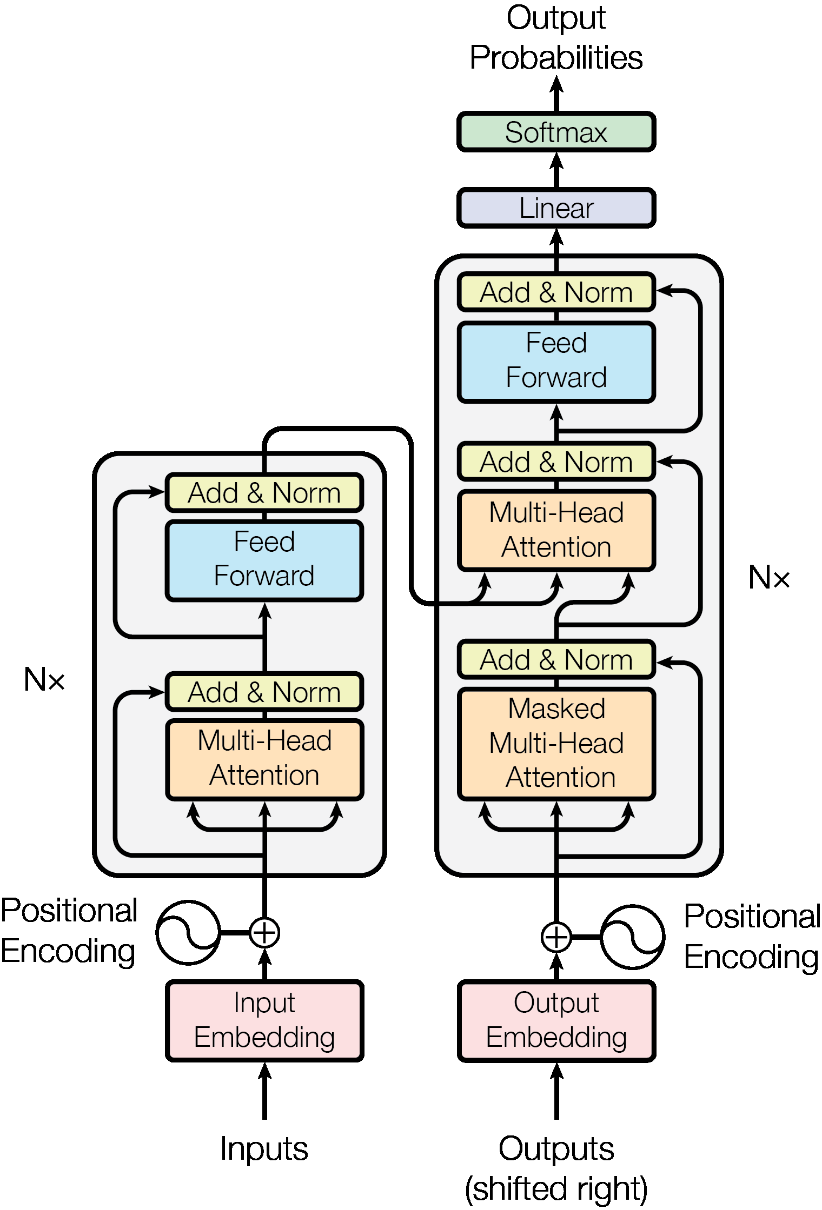

In their 2017 paper, Google Research proposed a new architecture to tackle this type of large sequence transduction problem. The transformer architecture extended traditional encoder-decoder models with an attention mechanism that draws dependencies between the input and the output. The self-attention module is the main contribution of the transformer architecture. Conceptually, self-attention is a mechanism that relates different positions of a single sequence in order to compute a representation of the sequence. The transformer architecture incorporates self-attention in an encoder-decoder module as illustrated in the following figure:

Image credit: Google Research

Google’s transformer architectures are based on three fundamental building blocks:

Encoder: The encoder model is composed of six layers with two sub-layers each. The first sub-layer contains the self-attention mechanism while the second sub-layer is based on a simple feed-forward network.

Decoder: The decoder also includes six layers but with three sub-layers instead. In addition to the two layers included by the encoder sub-layers, the decoder includes a multi-head attention sub-layer that transforms the input of the encoder.

Attention: The attention module is a sequence-to-sequence operation that takes a series of vectors as an input and produces a different sequence of vectors. Some attention calculations are as simple as a weighted average of all the input vectors.

Since the publication of the Google Attention paper, the transformer architecture has become the dominant model to tackle large scale sequence-to-sequence problems. Transformers have completely revolutionized the NLP space and have been at the center of some of the most important milestones of the last decade of deep learning.

🙌 Let’s connect

Follow us on Twitter. We share lots of useful information, events, educational materials, and informative threads that help you strengthen your knowledge.

🤖 ML Technology to Follow: Tensor2Tensor is a Modular Library for Using Transformer Models in TensorFlow

Why should I know about this: Tensor2Tensor was one of the first libraries to incorporate transformer architectures in TensorFlow.

What is it: Transformer architectures might be revolutionizing deep learning, but implementing it in real-world applications is far from trivial. Tensor2Tensor (T2T) is a framework open-sourced by Google Research a few years ago to accelerate ML research. Among its many capabilities, T2T provides access to state-of-the-art transformer architectures using a very simple programming model.

T2T includes several key benefits for ML developers looking to incorporate state-of-the-art architectures in their solutions:

Model Catalog: T2T includes a rich catalog of many cutting-edge deep learning architectures, including transformer models.

Dataset Catalog: T2T includes datasets across different modalities such as text, audio, image and others.

Large Scale Models: T2T supports model training parallelization using multiple GPUs and workers.

Programming Interface: T2T provides a very simple programming model that allows data scientists to easily incorporate advanced deep learning models into their architectures.

In the case of transformer architectures, T2T incorporates many models for tasks such as mathematical understanding, question answering, image classification, language modeling, sentiment analysis, image generation and many others.

The biggest differentiator of the T2T library is that it incorporates best practices across different components of a deep learning solution, such as data-sets, model architectures, optimizers, learning rate decay schemes, hyperparameters and many others. From that perspective, a data science team can pick different types of data-set, model, optimizer or hyperparameter combinations and see how they perform. The modular design also facilitates the incorporation of new state-of-the-art techniques while maintaining the same developer interface.

How can I use it: The current release of Tensor2Tensor is open source at https://github.com/tensorflow/tensor2tensor

Why no discusson on Trax, in addition to or instead of T2T? According to the TensorFlow github page, T2T is deprecated and users are enouraged to use Trax instead.