🗂 Edge#107: Crowdsourced vs. Automated vs. Hybrid Data Labeling

Three main data labeling schools explained

In this issue;

we explain three main approaches to data labeling;

we explore some best practices used for implementing crowdsourced data labeling at scale;

we overview three platforms that use crowdsourcing for data labeling.

💡 ML Concept of the Day: Crowdsourced vs. Automated vs. Hybrid Data Labeling

Data labeling is one of the essential elements of any machine learning workflow. Systematically building high quality labeled datasets for training and validation is one of the most expensive aspects of the lifecycle of machine learning solutions. Not surprisingly, the market has seen a new generation of data labeling platforms trying to streamline the creation of labeled datasets for different data types (video, text, audio, etc.) and even vertical domains. In its own right, data labeling has become one of the most important standalone markets in the machine learning space.

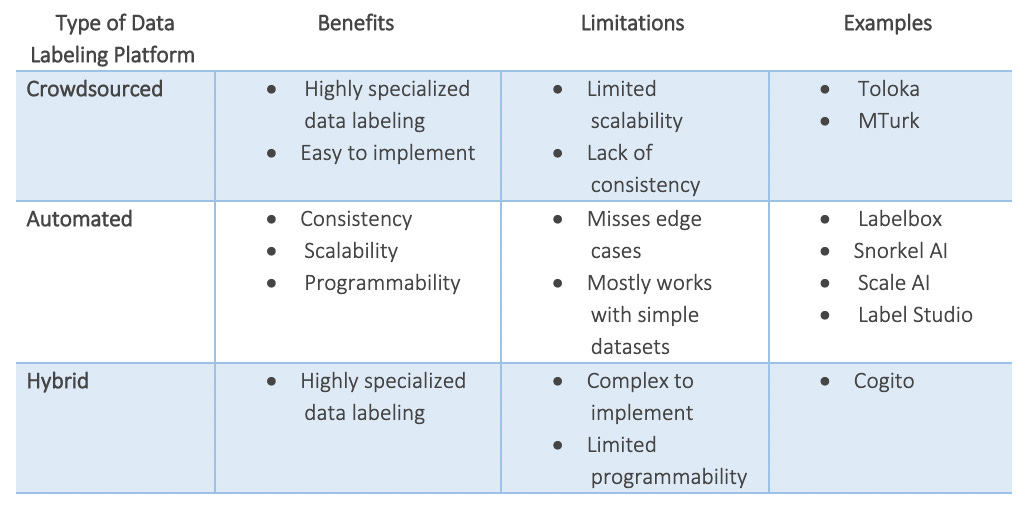

These days, the process of evaluating and selecting a data labeling platform can be overwhelming. There is a taxonomy that I have found relatively efficient to provide some clarity with respect to ML data labeling platforms. Despite the growing number of offerings in the market, most data labeling technologies can be classified in one of the following three groups:

Manual/Crowdsourced: This type of data labeling platform leverages knowledge workers for attaching labels to specific datasets. The crowdsourcing paradigm tends to work well in scenarios that require a certain level of reasoning to infer a label. Think about highly specialized medical domains or scenarios that derive semantic information from images, which will be relatively hard for automated models to achieve.

Automated: Contrasting with the manual approach, automated data labeling platforms rely on programming rules or even machine learning models to detect relevant labels in an input dataset. This approach provides very consistent results in scenarios that don’t require complex labeling paradigms.

Hybrid: An emerging set of data science platforms tries to combine the benefits of the automated and manual methods to label training datasets. Some techniques, such as semi-supervised or transfer learning, are starting to play a relevant role in these new types of data labeling platforms.

The following matrix provides a high level criteria that could help with the evaluation of data labeling platforms.

The previous categories capture the main data labeling schools in machine learning. There is no magic answer to select a data labeling platform. The selection of one approach versus others is highly dependent on the underlying machine learning scenario. All three areas are seeing robust levels of innovations and new startups that are capturing a relevant market share trying to simplify the data labeling experience for machine learning teams.

🔎 ML Research You Should Know: The Crowdsource Data Labeling Best Practices Powering the Toloka Platform*

We've covered hybrid approach in Edge#92, today I'd like to overview the crowdsourced approach in more detail. In the paper Practice of Efficient Data Collection via Crowdsourcing at Large-Scale, researchers from Toloka explore some of the best practices used for implementing crowdsourced data labeling at scale.

The objective: Explore best practices and techniques used in crowdsourced data labeling methods.

Why is it so important: Toloka is one of the largest crowdsourced data labeling platforms for machine learning projects.

Diving deeper: Crowdsourcing is one of the main mechanisms to streamline the creation of high quality datasets. Using large pools of human data labellers becomes particularly relevant in scenarios in which the output labels require some subjectivity knowledge, or they are particularly complicated for automatic labeling algorithms. For instance, when analyzing images from a basketball game, algorithmic data labeling techniques could easily identify players or the referees but it might be quite difficult to classify whether a player is attempting to shoot or pass the ball or whether there is a defensive mismatch. This type of output requires the type of knowledge inference that humans excel at.

Whereas the concept of crowdsourced data labeling seems easy at first glance, it’s quite challenging to implement at scale. Introducing humans as part of the labeling process often results in inconsistencies that can translate into error in machine learning models. Solving those problems at scale requires applying systematic best practices, which are far from trivial. This was the main subject of Toloka’s research. At a high level, the research paper identifies two main areas for efficiently building effective data-labeling crowdsourced pipelines.

Task decomposition

Task evaluation and compensation

Partitioning tasks is a key element of effective crowdsourced data labeling strategies. In general, the Toloka paper proposes a methodology for decomposing large data-labeling tasks into smaller tasks that can be easily evaluated. The paper proposes complementing the task decomposition with quality control methods that evaluate the task before, during and after its execution. The following figure shows this task decomposition approach for a normal image classification data-labeling task. The image on the right is more general, ambiguous and subject to inconsistencies by human data-labelers.

Image credit: Toloka

The second aspect of Toloka’s best practices for crowdsourced data labeling focuses on the strategies to evaluate the tasks performed by crowdsource workers and compensate accordingly. In their paper, researchers outline three fundamental steps for efficient task evaluation and compensation:

Aggregation Methods: These methods aggregate the output from multiple crowdsource workers to arrive at a final classification. Algorithms such as majority vote, minimax entropy and Dawid-Skene are relevant to implement this type of technique. In general, these methods allocate the same number of labels to certain and uncertain samples.

Incremental relabeling: This type of technique allocates a larger number of labels to uncertain samples and refines the classification process from there.

Dynamic Pricing: The final step is to dynamically adjust the pricing mechanisms based on the performance of the labeling tasks.

Image credit: Toloka

Crowdsourced labeling is far from being an empirical science and is highly reliant on processes. The building blocks outlined in Toloka’s research provide a robust guidance for designing crowdsourced data labeling pipelines in a scalable and highly efficient way.

*We’ve partnered with Toloka to present you a free hands-on tutorial about Crowdsourcing Natural Language Data at Scale. Exclusively for the readers of TheSequence, leading researchers and engineers will share their industry experience in achieving efficient natural language annotation with crowdsourcing. They will introduce data labeling via public crowdsourcing marketplaces and present the key components of efficient label collection. In the practice session, participants will choose one real language resource production task, experiment with selecting settings for the labeling process, and launch their label collection project. All projects will be run on the real crowd!

🤖 ML Technology to Follow: Platforms That Use Crowdsourcing For Data Labeling

Why should I know about this: Crowdsourced data labeling platforms are not as well known as their automated alternatives. Here are a few platforms you should pay attention to.

What is it: Crowdsourcing might be the most straightforward data labeling practice and, yet, the platforms that enable these types of capabilities remain relatively well known. While we have seen dozens of automated data labeling platforms go to raise sizable rounds of venture capital, the crowdsourced data labeling space has remained relatively under the radar. From that perspective, evaluating crowdsourced data labeling stacks is far from being an easy endeavor. Here are a few platforms that have achieved meaningful traction in the space.

Amazon SageMaker Ground Truth

Mechanical Turk (MTurk) is the most widely adopted crowdsourced platform in the market. However, MTurk was designed as a generic crowdsourced platform without any specific optimizations for data labeling workflows. To address that limitation, AWS introduced the Ground Truth data labeling service as part of the SageMaker platform. Ground Truth builds on top of MTurk to enable crowdsourced data labeling capabilities across a wide variety of datasets. Complementary, the Ground Truth platform enables automated data labeling that can streamline training workflows in certain scenarios.

Benefits: Some of the key advantages of the Ground Trust services include the Integration with SageMaker and combining both crowdsourced and automated data labeling workflows in a single stack.

Limitations: The crowdsourced data labeling tasks enabled by Ground Truth remain relatively basic compared to competitive alternatives.

Further Information: Additional information about AWS Ground Truth can be found at: https://aws.amazon.com/sagemaker/groundtruth/

Toloka

Toloka is a crowdsourced data labeling platform that leverages state-of-the-art techniques to scale crowdsourced data labeling workflows across several data types such as image, videos, language or audio. Despite using human data labeling processes as a first-class citizen, the Toloka platform includes a developer kit that enables its integration in deep learning models implemented in frameworks such as TensorFlow or PyTorch. Toloka has built a strong customer base that includes companies like AliPay, Samsung and Yandex.

Benefits: Some of the advantages of Toloka include the implementation of streamlined, large-scale crowdsourced data labeling pipelines and the support for diverse datasets and use cases.

Limitations: The exclusive focus in crowdsourced scenarios limits the adoption of Toloka in many data labeling use cases better suited for automated techniques.

Further Information: More information about Toloka can be found at: https://toloka.ai/

Cogito

Cogito is a hybrid data labeling platform that relies on an approach known as model assisted labeling (MAL). Conceptually, MAL leverages a human workforce to label a small but relevant portion of the training dataset which is used to train a specific model. From there, the Cogito solution will use the outputs of a model to train another model that can refine the labeling of a larger dataset without requiring major human intervention. The current version of the Cogito platform supports diverse datasets such as image, text, video, audio, 3d point clouds and several others. The platform has a stellar customer base that includes companies like AWS, Vimeo, National Geographic and Tumblr.

Benefits: Some of the benefits of the Cogito platforms include a large specialized workforce across different verticals.

Limitations: Lack of programmability might be the top limitation of the Cogito platform.

Further Information: More information about Cogito can be found at: https://www.cogitotech.com/