💥The “What’s New in AI” recap#1️⃣

TheSequence is the best way to build and reinforce your knowledge about machine learning and AI

Every six months we provide a summary of what we’ve recently covered in TheSequence. Catch up with what you missed and prepare for the next half of the year. This issue is the first part of the “What’s New in AI” recap. What’s New in AI is a deep dive into one of the freshest research papers or technology frameworks that is worth your attention.

Please consider sharing this recap with those who can benefit from reading TheSequence—>

(publicly open article, no subscription is needed) Edge#52: Google Meena is a Language Model That Can Chat About Anything: It is unquestionable that Meena represents a major milestone in the implementation of conversational interfaces ->read how Google addressed the challenge to measure the human-likeness of a dialog

Edge#54: Facebook ReBeL That Can Master Poker: Recently, Facebook used poker as the inspiration for the new reinforcement learning model that is able to master several imperfect-information games ->learn about the challenges that Facebook tries to address with ReBeL

Edge#56: DeepMind’s MuZero that Mastered Go, Chess, Shogi and Atari Without Knowing the Rules: MuZero represents a major evolution in the use of RL algorithms for long-term planning ->read why MuZero might become a foundational block of a new set of deep learning models with short and long-term planning capabilities

Edge#58: OpenAI’s CLIP and DALL·E Draw Inspiration from GPT-3 to Connect Language and Computer Vision: OpenAI released two new transformer architectures that combine image and language tasks in a fun and almost magical way. Wait, did I just say that transformers are being used in computer vision tasks? ->you definitely want to know more about this, it’s outstanding

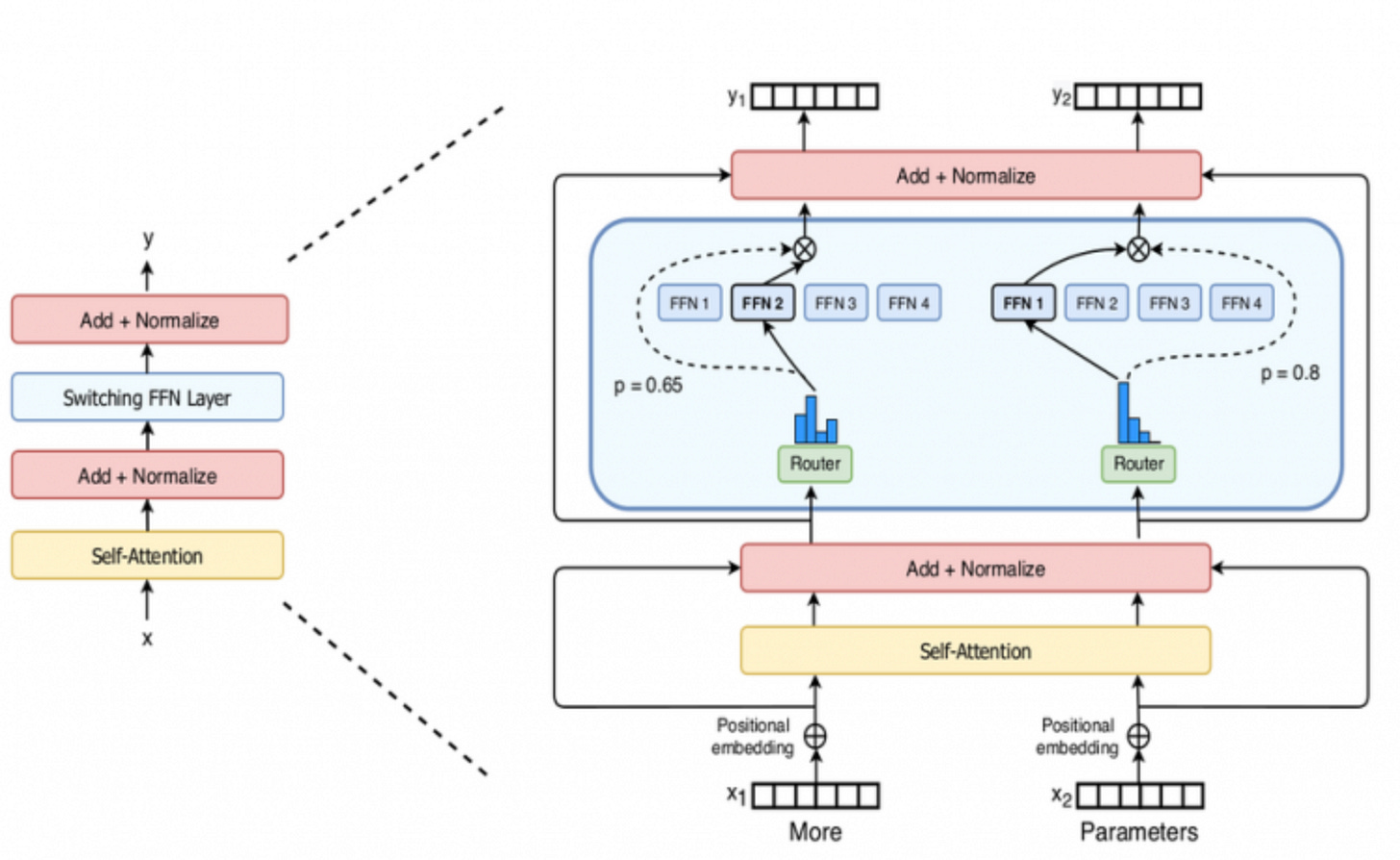

Edge#60: Google’s Switch Transformer is the Biggest Language Model in History with Over One Trillion Parameters: how would you feel about a model that is 6 times larger than GPT-3? ->read to better understand the Switch Transformer architecture

Edge#62: A View Into the Data Discovery and Management Architectures Implemented at LinkedIn, Uber, Lyft, Airbnb and Netflix ->this long-read is really worth it 😉

Edge#64: The Architectures Powering ML at Google, Facebook, Uber, LinkedIn: What are the key architecture building blocks that organizations should put in place when adopting machine learning solutions? ->read our overview of how some powerhouses laying down the path for what can become reference architectures

Edge#66: Pluribus – superhuman AI for multiplayer poker: we discuss some insights about the deep learning techniques that powered this fascinating milestone in modern deep learning ->read how Facebook mastered the most difficult game in the world

Edge#70: LinkedIn Uses Typed Features to Accelerate Machine Learning Experimentation at Scale: recently, LinkedIn unveiled some details about their approach to feature engineering for enabling rapid experimentation, which contains some very unique innovations ->read further