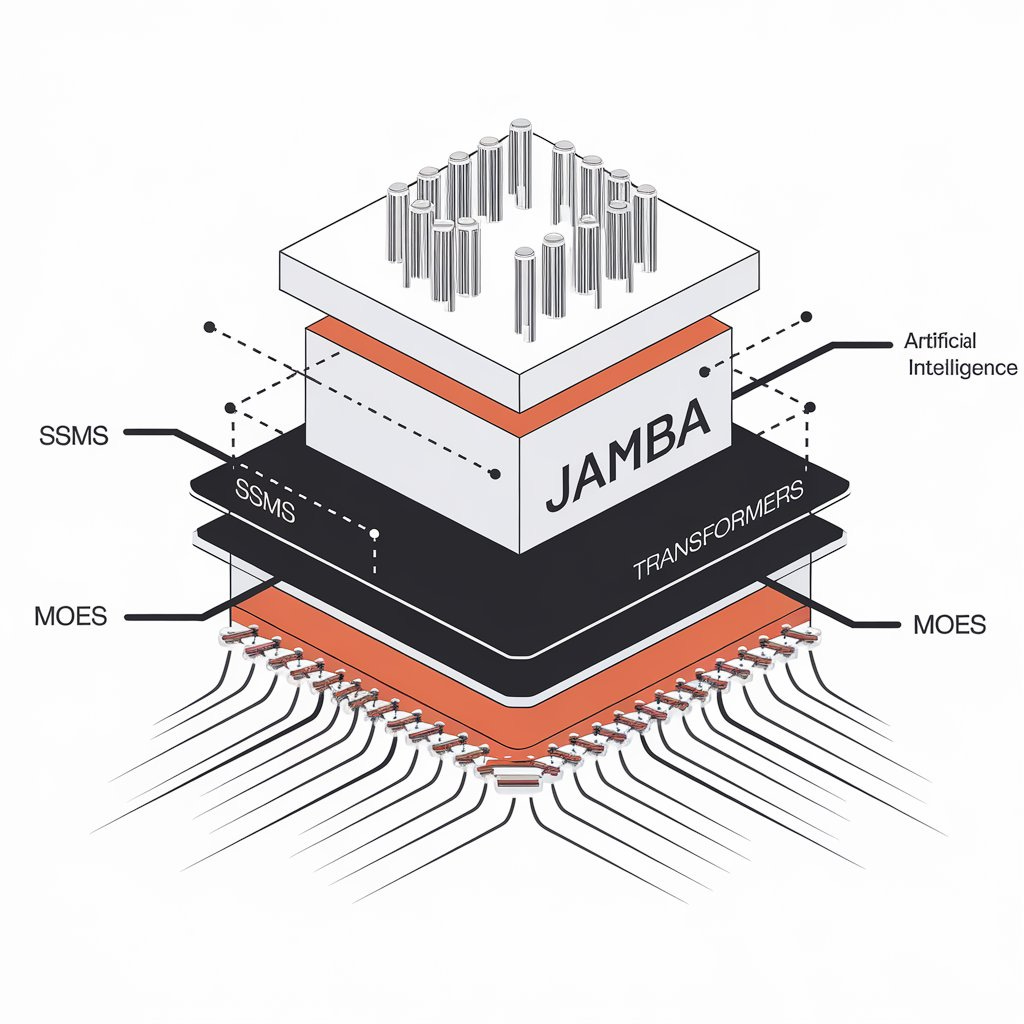

Edge 427: Jamba Combines SSMs, Transformers and MOEs in a Single Model

Can a hybrid design outperform each one of the baseline architectures?

In this issue:

An overview of the concepts in the Jamba model that combines transformers and SSMs.

A review of the original Jamba paper by AI21 Labs.

A walkthrough DeepEval, a framework for LLM evaluation.

💡 ML Concept of the Day: Understanding Jamba

Regularly, State Space Models(SSMs) are positioned as an alternative to transformer models but if that doesn’t have to be the case. This was the thesis behind a new model called Jamba released by the ambitious team at AI21 Labs. Jamba combines transformers and SSMs in a single architecture that could open new avenues for the future of LLMs. Jamba tries to address some of the limitations of SSM’s performance relative to transformer in many traditional scenarios.