Edge 390: Diving Into Databricks' DBRX: One of the Most Impressive Open Source LLMs Released Recently

The model uses an MoE architecture which exhibits remarkable perfromance on a relatively small budget.

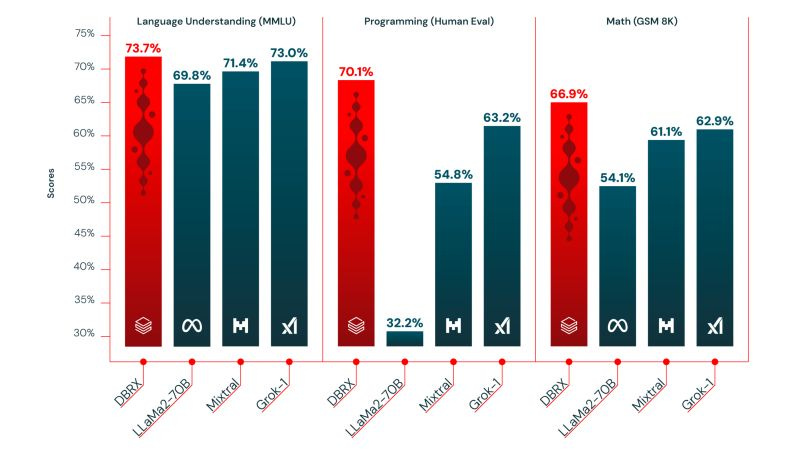

The open-source generative AI landscape is experiencing tremendous momentum. Innovation comes not only from startups like HuggingFace, Mistral, or AI21 but also from large AI labs such as Meta. Databricks has been one of the tech incumbents exploring different angles in open source generative AI, mainly after the acquisition of MosaicML. A few days ago, Databricks open sourced DBRX, a massive general-purpose LLM that show incredible performance across different benchmarks.

DBRX builds on the mixture-of-experts(MoE) approach used by Mixtral which seems to be more and more the standard to follow in transformer based architecutures. Databricks released both the baseline model DBRX Base as well as the intstruction fine-tuned one DBRX Instruct. From the initial reports, it seems that Databricks’ edge was the quality of the dataset and training process although there are few details in those.