📝 Guest post: Right-Sizing Training Workloads with NVIDIA A100 and A40 GPUs*

In this article, CoreWeave’s team explains how it is helping companies deploy more timely and efficient AI applications by right-sizing projects to optimize training on both NVIDIA A100 and A40 GPU configurations.

Manage the Right Portfolio of NVIDIA Compute

As model training and serving explodes around the globe, the NVIDIA A100 Tensor Core GPU has become the industry standard, but demand for NVIDIA A100 instance time frequently exceeds available capacity.

NVIDIA A100 GPUs are the flagship offering of NVIDIA's data center platform, suited for any AI training or inference workload. In addition to NVIDIA A100s, CoreWeave invests heavily in NVIDIA A40 GPUs to meet the needs of smaller AI projects with more flexibility at a lower on-demand cost. See how Bit192, Inc. recently employed our A40s to help bring a new Japanese GPT-NeoX-20B model to Japan.

Because of NVIDIA’s unified architecture and software platform stack, AI workloads can be run on either A100 or A40 GPU instances with high performance and fast time to solution.

Performance Benchmarks

When training NLP models with our recent clients, we’ve seen a 20B parameter model take about two months to train on a CoreWeave cluster of 96 NVIDIA A100 GPUs. We have been able to achieve a similar performance-adjusted cost to train with a cluster of ~200 A40 GPUs, which offers companies added flexibility given the high on-demand availability of A40 GPUs.

This translates to an estimated 30% overall cost savings versus other major cloud providers, with savings that continue to scale linearly.

What We Like About the NVIDIA A40 GPU

Released in October of 2020, the NVIDIA A40 GPU features 37.4 teraflops of FP32 performance, 10,752 CUDA cores, 336 Tensor Cores, 48GB of graphics memory and 696GB/s of graphics memory bandwidth.

Built on the NVIDIA Ampere architecture, the A40 GPU gives data scientists and engineering teams the ability to render, process and analyze at blazing speed.

The NVIDIA A40 is a leap forward in performance and multi-workload capabilities from the data center, combining best-in-class professional graphics with powerful compute and AI acceleration to meet today’s design, creative and scientific challenges.

CoreWeave deploys the largest inventory of NVIDIA A40 GPUs in North America, with ultra-fast GDDR6 memory, scalable up to 96GB with NVIDIA NVLink. This feature allows users to connect two A40 GPUs to increase GPU-to-GPU interconnect bandwidth and provide a single scalable memory space to accelerate graphics and compute workloads for tackling large datasets.

"NVIDIA A100 GPUs have a different usage profile than the other SKUs we offer on-demand, due to the characteristics of large scale training workloads. With a limited selection of GPUs available on other cloud providers, many businesses struggle to access the scale of compute needed to accelerate training workloads. As an Elite CSP within the NVIDIA Partner Network, we care deeply about offering our clients the broadest range of GPUs at high-availability. We invested heavily in scaling our fleet of NVIDIA A40 GPUs to ensure our clients can access the scale and computing power their workloads require." - Brian Venturo, CTO, CoreWeave

You can find additional details about the NVIDIA A40 GPU and full performance specs here.

What We Like About the NVIDIA A100 Tensor Core GPU

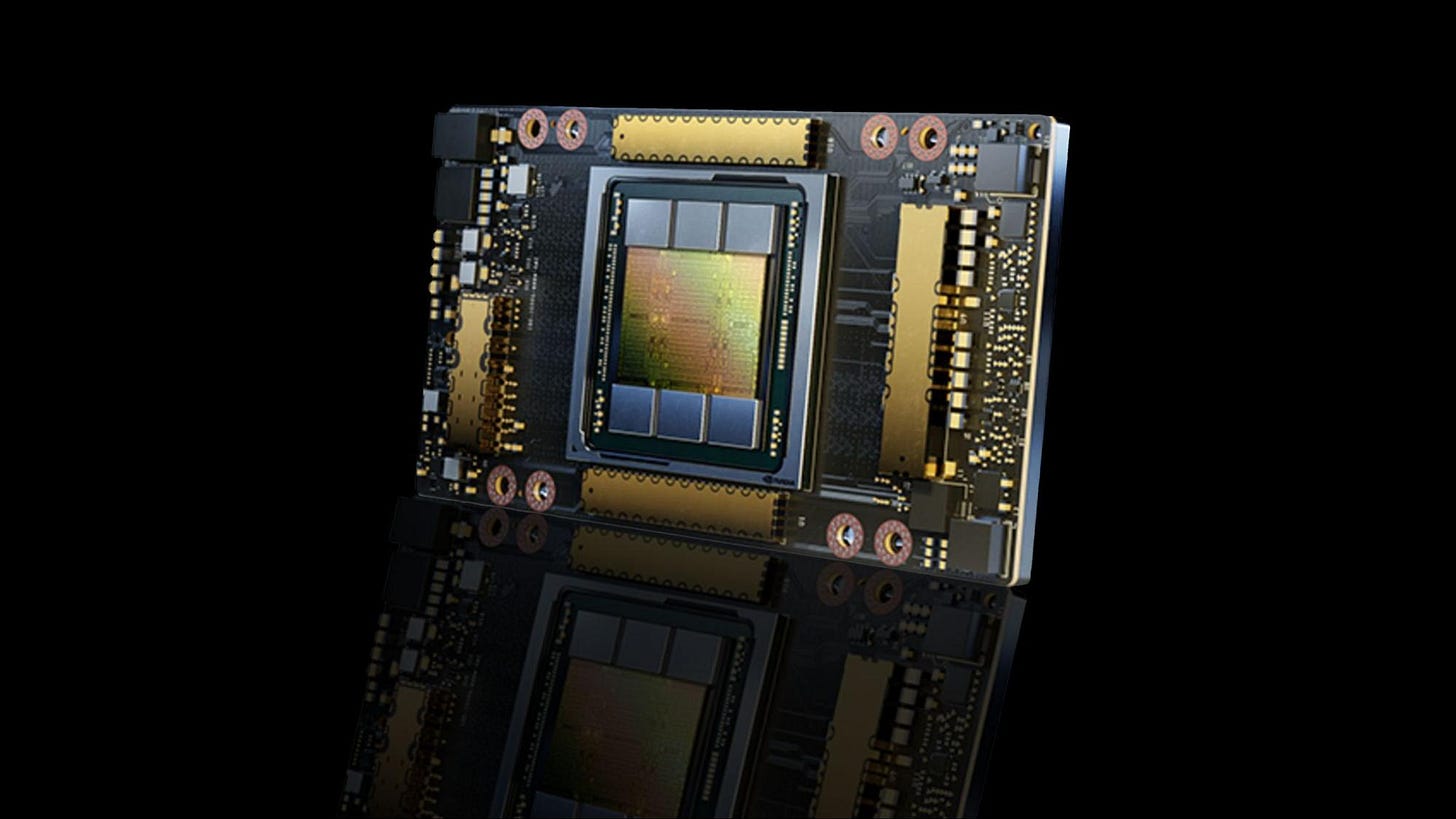

Released in May of 2020, the NVIDIA A100 Tensor Core GPU features 19.5 teraflops of FP32 performance, up to 312 teraflops of TF32 performance, 6,912 CUDA cores, 432 Tensor Cores, up to 80GB of graphics memory and 1.6TB/s of graphics memory bandwidth.

The A100 is the supercharged engine of NVIDIA’s data center platform, delivering unprecedented acceleration at every scale to power the world’s highest-performing elastic data centers for AI, data analytics and high-performance computing.

Powered by the NVIDIA Ampere architecture, the NVIDIA A100 Tensor Core GPU provides up to 20X higher performance over the prior generation of GPU and can be partitioned into seven GPU instances to dynamically adjust to shifting workload demands.

Available in 40GB and 80GB memory versions in CoreWeave’s cloud instances, the A100 80GB includes the world’s fastest memory bandwidth at over 2 terabytes per second (TB/s) to run the largest models and datasets.

For the largest models with massive data tables, like deep learning recommendation models (DLRM), CoreWeave’s 8-way 80GB A100 HGX systems reach up to 640GB of unified memory with NVIDIA NVLink.

“Our clients often find that other cloud providers build their distributed training clusters with legacy networking, leading to substantially degraded performance as distributed training scales. We build our A100 distributed training clusters with a rail-optimized design using NVIDIA Quantum InfiniBand networking and in-network collections using NVIDIA SHARP to deliver the highest distributed training performance possible.” - Peter Salanki, Director of Engineering, CoreWeave

You can find additional details about the NVIDIA A100 GPU and full performance specs here.

Different Training Courses for Different Horses

The NVIDIA A100 GPU is more than twice as fast as the NVIDIA A40 when it comes to graphics memory bandwidth and has almost 100 more Tensor Cores, giving the A100 a more than double lead in raw throughput.

However, with significantly higher on-demand availability on CoreWeave Cloud, A40 GPUs may actually be preferable to A100 GPUs when performance-adjusted cost is taken into consideration.

CoreWeave clients training AI models of any size have the freedom and flexibility to choose the best NVIDIA GPU in our fleet based on the compute and usage requirements they need to be successful.

“The NVIDIA A40 and A100 GPUs offer powerful, world-class results for compute-intensive use cases. Being able to scale into CoreWeave’s offering of either GPU on-demand gives customers the flexibility to right-size their acceleration for the task at hand.” - Paresh Kharya, Senior Director, Product Management, Accelerated Computing Group, NVIDIA

If you are searching for the perfect portfolio of NVIDIA A40 and A100 GPUs, CoreWeave can help you optimize the right mix of cost and on-demand availability! Contact a CoreWeave engineer today to chat through how we can fine-tune your upcoming projects.