📝 Guest post: Prevent AI failure with data logging and ML monitoring*

Monitoring and observability for AI applications are on every organization’s roadmap right now. In this guest post, our partner WhyLabs highlights the need for data and machine learning-specific logging. They describe whylogs, the open-source standard for data logging, which enables monitoring and more in AI and data applications. You can dive directly into whylogs with the getting started example or read on to learn more.

First, what is data logging?

Logs are an essential part of monitoring and observability for classic software applications. Logs enable you to diagnose what happened to an application, track changes over time, and debug issues that arise.

When it comes to ML applications, however, it’s not enough to log the traditional software metrics such as uptime, bounce rate, and load time. Data behaves differently than code and necessitates collecting different signals from the application. That’s where data logging comes in. Data logs capture data quality, data drift, model performance, and other data-specific health signals.

With data logs, you can monitor model performance and data drift, validate data quality, track data for ML experiments, and institute data auditing and governance best practices.

But how can you implement data logs in practice?

That’s where whylogs comes in

The team at WhyLabs built whylogs as the open-source standard for data logging to address all the logging needs of AI builders. With whylogs, the data flowing through AI and data applications gets continuously logged.

With whylogs, you can generate statistical summaries, whylogs profiles, from data as it flows through your data pipelines and into your machine learning models. With these statistical summaries, you can track changes in the data and model over time, picking up on issues like data drift or data quality degradation.

Who uses whylogs?

Today, whylogs is used by thousands of AI builders, from startups to Fortune 100 companies. Users solve for a wide variety of use cases, data types and model types.

We designed whylogs in collaboration with data scientists, data engineers, and machine learning engineers across the ML community. The mission of whylogs is simple: create a platform-agnostic library that captures all key statistical properties of a dataset.

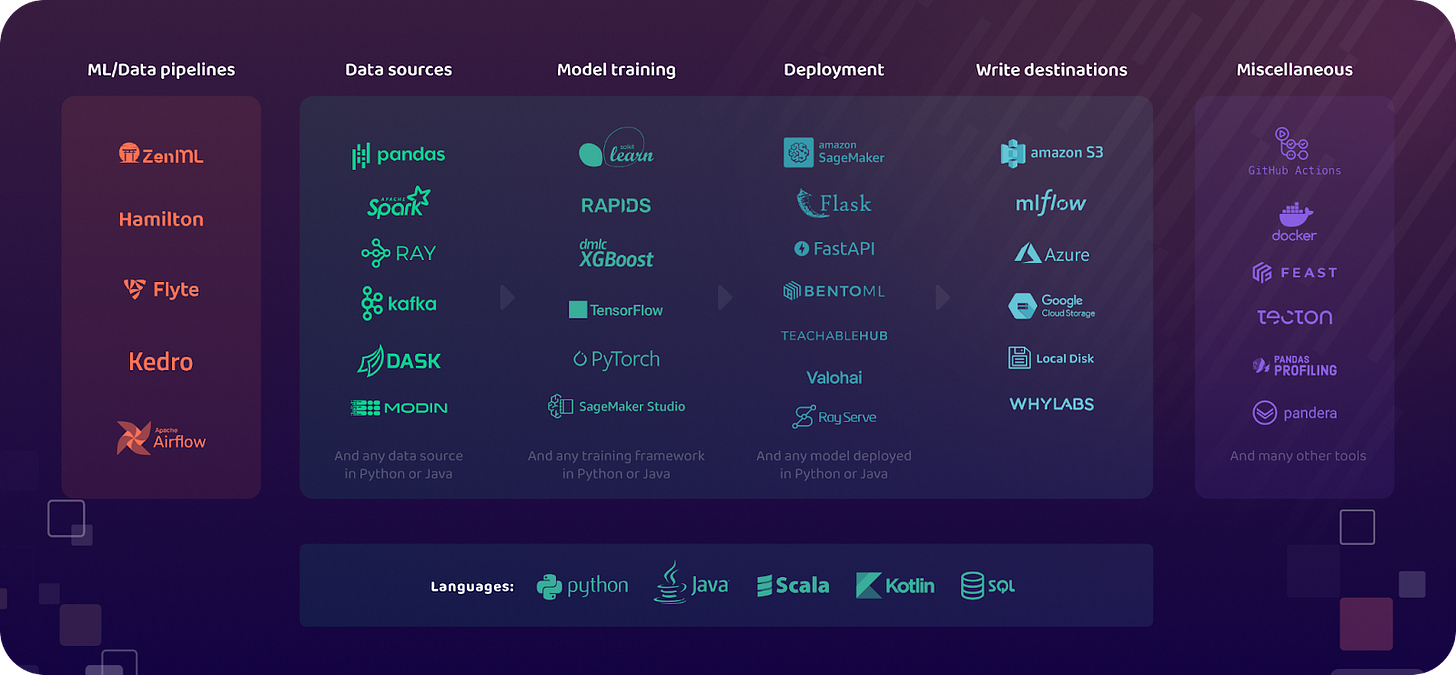

By design, whylogs works natively with both streaming and batch data. It works out of the box on tabular, image, and text data, and can be extended to handle arbitrary data types, such as embeddings, audio, video, etc. The library runs natively in Python or Java environments, so it can be used with Pandas, Dask, Modin, Ray, Apache Spark, or many other data storage and processing tools. In fact, with over 35 integrations available today and more in progress, it’s safe to say that whylogs can work with whatever tool stack you’re using.

What can I use whylogs for?

Setting up whylogs takes less time than brewing a cup of coffee. Simply pip install whylogs and then, in your Python environment, run:

import whylogs as why

profile = why.log(pandas_df) # to generate a profile of a pandas dataframe

After generating a whylogs profile, you can:

Track changes in your dataset

Generate data constraints to know whether your data looks the way it should

Quickly visualize key summary statistics about your datasets

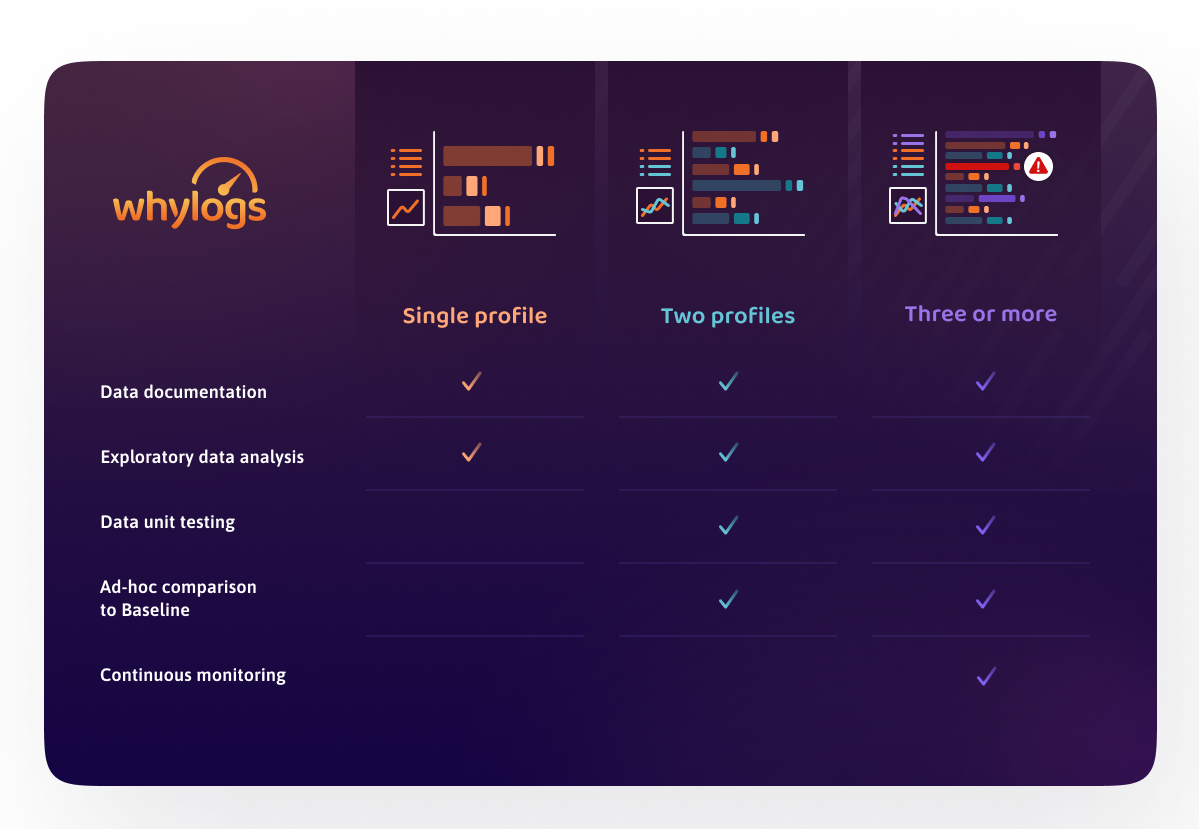

These three functionalities unlock a variety of use cases for data scientists, data engineers, and machine learning engineers:

Detect data drift in model input features

Detect training-serving skew, concept drift, and model performance degradation

Validate data quality in model inputs or in a data pipeline

Perform exploratory data analysis of massive datasets

Track data distributions & data quality for ML experiments

Enable data auditing and governance across the organization

Standardize data documentation practices across the organization

And more

What’s new with whylogs v1?

With the launch of whylogs v1, WhyLabs released a host of new features and functionalities, improving the library in four key development areas:

API simplification: profile data with just one line of code

Profile constraints: automatically generate constraints for data validation

Profile visualizer: visualize all key statistics of a dataset

Performance improvements: profile 1M rows/second

API Simplification

Generating whylogs profiles takes a single line of code. Logging your data is as easy as running results = why.log(pandas_df) in your Python environment.

Data scientists and ML engineers will find it even easier to log data with the simplified API. This means higher data quality, more ML models reliably deployed in production, and more best practices followed.

Profile Constraints

With profile constraints, you can save hours of extra work by preventing data bugs before they have an opportunity to propagate throughout the entire data pipeline. Simply define tests for your data and get alerted if data doesn’t look the way you expect it to. This enables data unit testing and data quality validation.

Constraints might look like:

I want to get notified if a value in my “credit score” column < 300 or > 850

I want to get notified if my “name” column has integers in it

I want to get notified if my “user_id” column has duplicate values

Better yet, setting up constraints is even easier with the generate_constraints() function. By calling this function on a whylogs profile, users can automatically generate a suite of constraints based on the data in that profile, and then check every future profile against that suite of constraints.

To get started with constraints, check out this example notebook.

Profile Visualizer

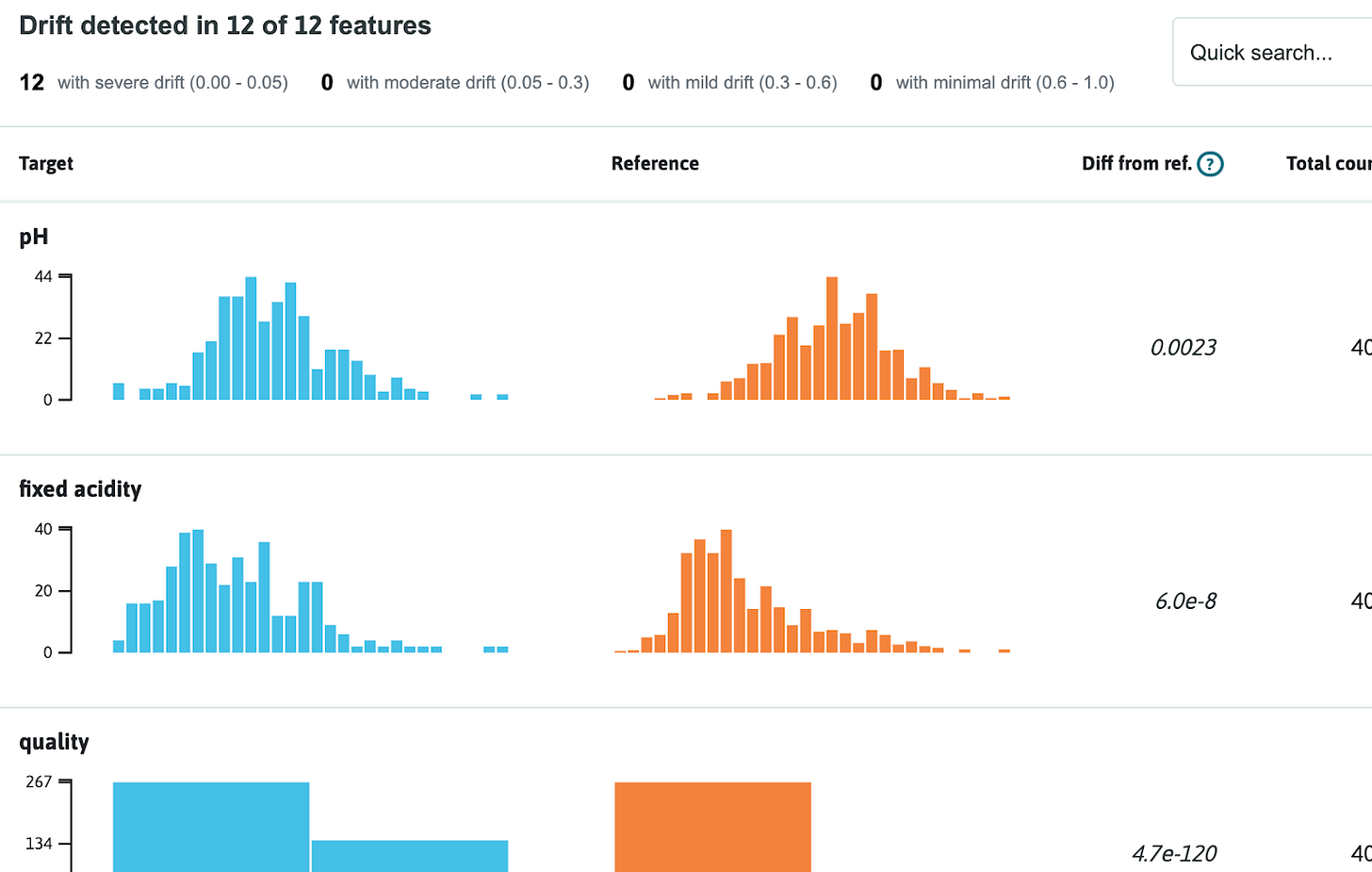

In addition to being able to automatically get notified about potential issues in data, it’s also useful to be able to inspect your data visually. With the profile visualizer, you can generate interactive reports about your profiles (either a single profile or comparing two profiles) directly in your Jupyter notebook environment. This enables exploratory data analysis, data drift detection, and data observability.

The profile visualizer lets you create some useful visualizations of your data:

Summary drift report: an interactive feature-by-feature breakdown of two profiles with matching schemas

A feature statistics report: a detailed summary of a single feature from a single profile

Distribution drift histogram: high fidelity visualization overlaying the distributions of a single feature from two profiles

To learn more about the profile visualizer, check out this example notebook.

Performance improvements

With the latest performance improvements in v1, you can now profile 1M rows per second. Across all benchmarks, there was a more than 500x improvement in the ability of whylogs to profile large datasets compared to previous versions. Wow!

The secret to this performance improvement is vectorization; the library now utilizes lightning-fast C performance for data summarization. The time it takes to profile a dataset grows sub-linearly, so profiling larger datasets takes less time per row than profiling smaller ones, while smaller datasets are still easily profiled in under a second. With such performance, whylogs will serve teams who work with data of any size, from a few million rows per week to billions of transactions per minute.

Conclusion

The whylogs project is the open-source standard for data logging, enabling applications spanning from data quality validation to ML model monitoring. With whylogs v1, users get more value with the library than ever before.

You can get started with the library or check out the whylogs GitHub to learn more.