📝 Guest post: How to Measure Your GPU Cluster Utilization, and Why That Matters*

In this article, Run:AI’s team introduces rntop, a new super useful open-source tool that measures GPU cluster utilization. Learn why that’s a critical measure for data scientists, as well as IT leaders controlling hardware budgets.

Why measure GPU cluster utilization?

Ask any data scientist what they think their GPU cluster’s utilization is, and they’ll probably say it’s above 60%–at least. When AI teams and models begin to scale, it can certainly feel like compute resources are always in use, which is extremely frustrating for data scientists who are eager to train, validate and test their models. As one customer recently put it, “We need a scheduler…otherwise, we might have blood on the floor as users will fight for the GPUs.” Yikes. But the truth is, most GPU clusters are at less than 20% utilization.

Why the disconnect between guesstimate and reality? A mismatch between allocation (an amount or portion of a resource assigned to a particular user) and utilization (the amount practically and effectively being used). It’s almost impossible to get an accurate measurement of GPU cluster utilization without a tool, even in the most advanced teams running AI in production. For example, when we met autonomous vehicle leaders Wayve, they had 100% of their resources allocated, but less than 45% utilized at any given time. Because GPUs were statically assigned to researchers, when the researchers weren’t using their assigned GPUs others could not access them, creating the illusion that GPUs for model training were at capacity. Meanwhile, if IT has no visibility into utilization, they might misdiagnose the problem, assuming it’s time to purchase more hardware. Over time, you can end up with an ever-growing cluster of expensive GPUs, and very little ROI to show for it.

We open-sourced rntop so that IT and DL teams can know for sure if they have a shortage of compute resources or just inefficient scheduling. If you’ve ever wondered whether you're getting all you can from your GPUs, go ahead and follow this tutorial–it takes just two minutes to set up and start measuring your cluster.

What is rntop?

rntop (pronounced “run top”) available here on GitHub, enables GPU utilization monitoring, anywhere, anytime. It uses NVIDIA’s software (nvidia-smi in particular) for monitoring GPUs on a node, but does this on multiple nodes across a cluster. It then collects the monitoring information from every node and calculates relevant metrics for the entire cluster. For example, GPU available and used memory is calculated by summing the results from all the nodes. GPU utilization is calculated by averaging the utilization of all the GPUs in the cluster. The current version requires only SSH connection, and can be launched by Docker without any installation.

rntop Tutorial

preconfiguration

rntop uses SSH connections for monitoring the remote GPU machines, so you must have SSH access to all the machines you want to monitor.

Connecting with password is not supported at the moment, so set up your SSH configuration to work with SSH keys if needed.

**You can verify the SSH connection to a GPU machine by running ssh user@server nvidia-smi

Execution - interactive mode

Run rntop using the following command and place the machine hostnames or IPs instead of the ... placeholder:

docker run -it --rm -v $HOME/.ssh:/root/.ssh runai/rntop …

Passing a username

It is possible to specify a username for the connections.

If you are using the same username for all machines, pass it as the argument --username. If you are using different usernames for different machines you can pass them as part of the hostname (e.g. john@server).

Note that we mount the SSH directory from the host to the container so that it is able to use the SSH configuration file and keys to establish the SSH connections.

Output file

rntop can save metrics to a file, with outputs looking like the below:

You can then open this with Board for rntop to visualize the exported information, such as cluster utilization and memory usage (as we show in the last section of this tutorial).

This is possible by specifying a path with the argument --output. rntop will create the file if it does not already exist, or append to it if it does exist because of previous runs.

Make sure the file is accessible from the host so that you are able to upload it easily.

You can use the following command and replace the ... placeholder with the machine hostnames or IPs:

docker run -it --rm -v $HOME/.ssh:/root/.ssh -v $HOME/.rntop:/host runai/rntop --output /host/rntop.log …

Running as a daemon

When saving information to an output file, one might want to run rntop for long periods of time, like days or even weeks to see utilization over time. To do so, rntop should not be attached to a specific terminal session, so that it can continue running when the session exits.

We recommend using tmux for this.

Make sure you are running on a machine that will not shutdown, restart or hibernate. A personal laptop is not a good choice.

Here is an example of how to use tmux for running rntop in the background:

Create a new tmux session and name it

rntopwith the command:

tmux new -s rntop

Make sure you save the output file to a directory that is accessible from the host.

You can detach from the session with Ctrl-b + d

Then reattach after time has passed with the command:

tmux attach-session -t rntop

Board for rntop

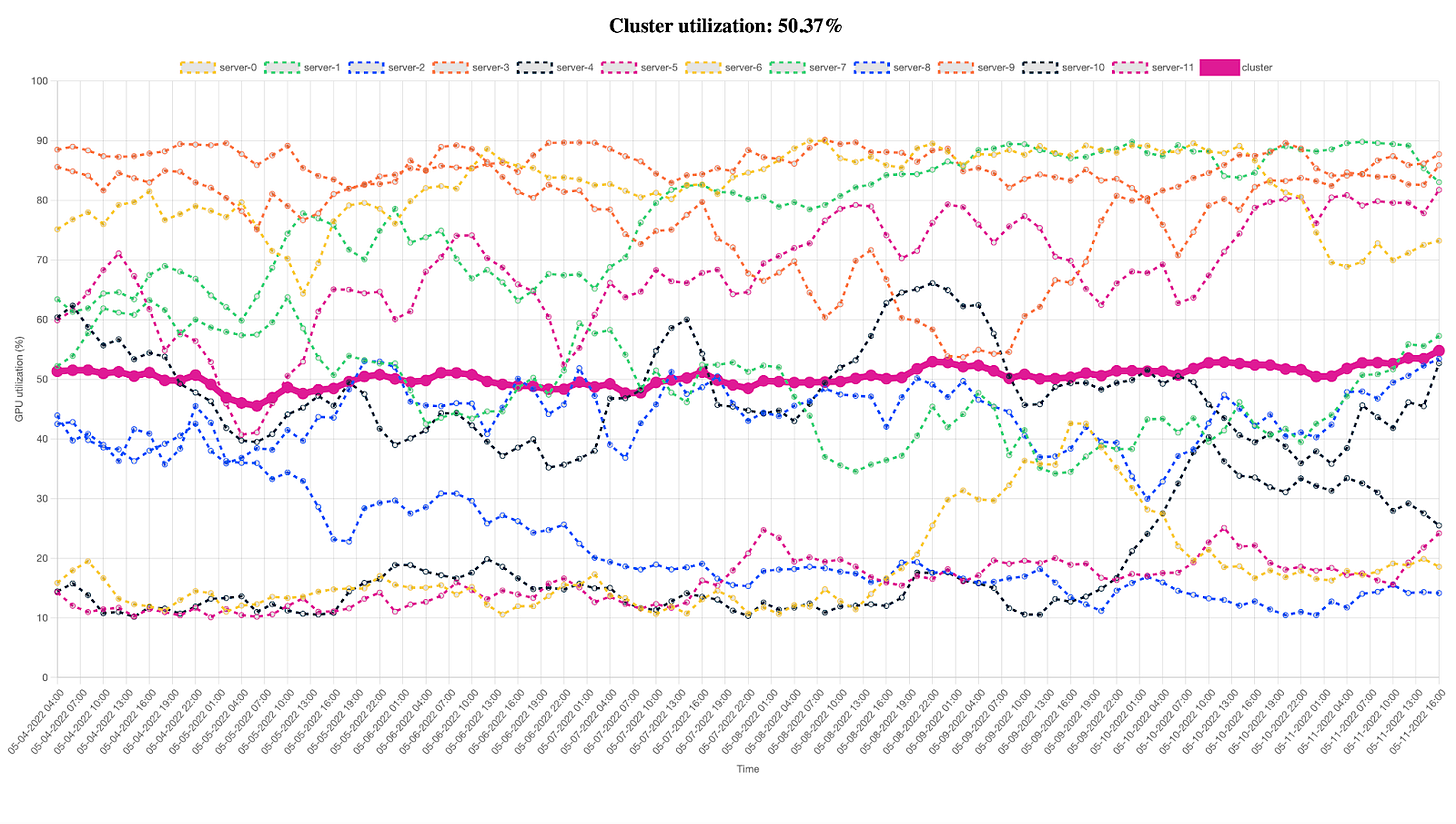

Once rntop has saved information to an output file, you can go to Board for rntop, load this file and see an analysis of the information recorded by rntop over time, like GPU utilization across the cluster and more.

As Board for rntop shows us here, the utilization of this cluster is currently just over 50%, and you can see the varied utilization of the individual servers as well as the cluster as a whole.

Did you find rntop useful? Please give us a star on GitHub!