📝 Guest post: Getting ML data labeling right*

What’s the best way to gather and label your data? There’s no trivial answer as every project is unique. But you can get some ideas from a similar case to yours. In this article, Toloka’s team shares their data labeling experience, looking into three different case studies.

Introduction

The number of AI products is skyrocketing with each passing year. Just a few years ago, AI and ML were accessible mostly to the industry’s bigger players – little companies didn’t have the resources to build and release quality AI products. But that landscape has changed, and even small enterprises have entered the game.

While building production-ready AI solutions is a long process, it starts with gathering and labeling the data used to train ML models.

What’s the best way to make that happen? There’s no easy answer to that question since every project is unique. But this article will look at three different case studies we’ve worked on.

1. Audio transcription

For this project, we needed to transcribe large amounts of voice recordings to train an Automatic Speech Recognition (ASR) model. We divided the recordings into small audio clips and asked the Toloka crowd to transcribe what they heard. The interface was similar to what you can see below.

The transcriptions were easy to pause as needed. We also distributed the same audio clip to a large crowd to ensure quality, only accepting their work when there were a certain number of matches. The pipeline below visualizes that process.

The annotators who took part in the project went through training as well. Almost no matter the project, we recommend including this step. The training can include tasks similar to the ones required for the project and offer a careful explanation when the annotators make mistakes. That teaches them what exactly they need to do.

2. Evaluating translations

Another interesting project we worked on was evaluating translation systems for a major machine translation conference. Each annotator was given a source text and a couple of candidate translations. They were then asked how well each translation conveyed the semantics of the original text, the process shown in the interface below.

With this project, we were very careful about crowd selection, starting with a mandatory language test and exam.

During the evaluation process, we constantly monitored participant performance using control tasks, which we knew the answer to. These tasks were mixed in with regular tasks so they couldn’t be detected. If annotator quality fell below 80%, they were required to pass the initial test again.

As a result of this project, we have annotated more than one hundred language pairs, including some common ones but also covering more exotic cases such as Xhosa – Zulu or Bengali – Hindi. Those are especially challenging due to a limited number of annotators.

3. Search relevance

One of the most popular use cases of Toloka is offline metrics evaluation of search relevance. Offline evaluation is needed since online feedback is not explicit, long-term, and hard to judge, for example, dwell-time & clicks. Offline metrics evaluation enables us to focus on separate search characteristics and receive explicit signals about search relevance.

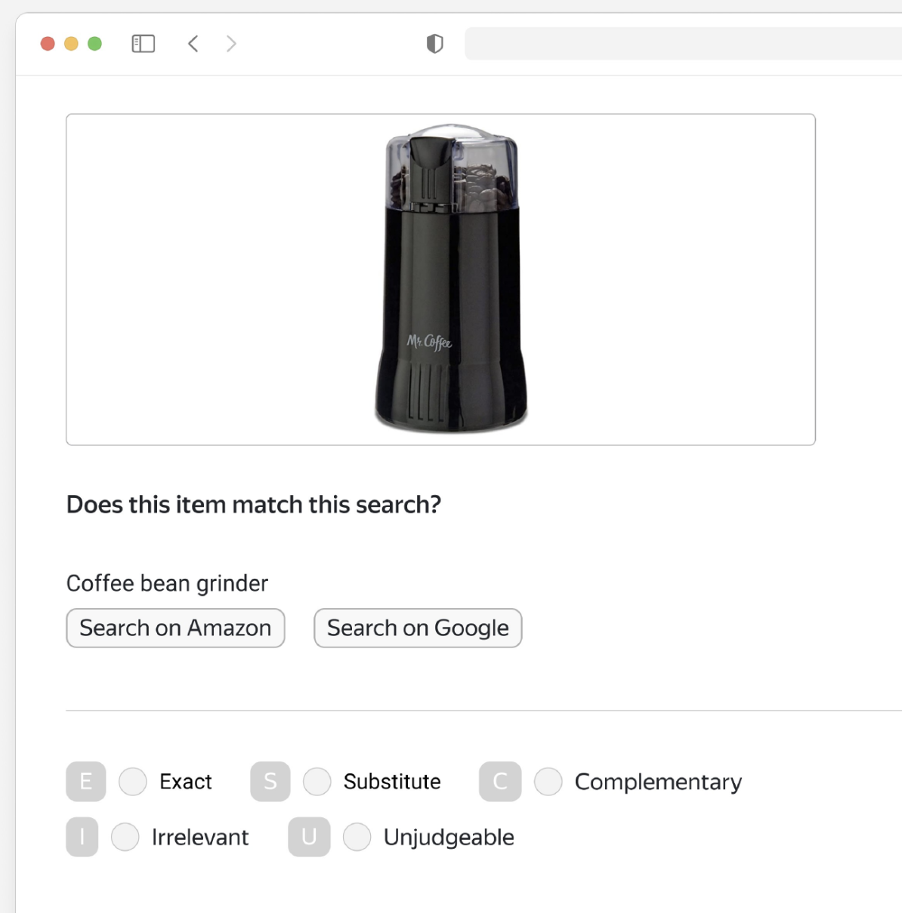

To do that, we show annotators a search query and a picture of a potentially matching item and ask them to rate relevance. The interface we use for this type of task is shown below.

We add buttons to search on Google or Amazon. That helps when the query is a bit obscure or difficult to understand because the annotator can follow the links and see what products match the query in those search engines.

Annotators often pick surprising answers. Intuitively, many clients go with a scale system like the one in the red box below when designing tasks.

With that said, our experiments have shown it is better to choose the categories seen in the green box: exact match, possible replace, accessory, and irrelevant. But that makes it incumbent on us to clearly define the categories in the instructions and during training.

In this project, we had to provide detailed descriptions of what accessory and possible replace classes are. Additionally, each task was sent to multiple annotators whose quality was constantly checked using hidden control tasks. Our experience has shown that quality control checks are crucial components of each annotation project, and we always do it on projects we design.

Summary

This article has covered three case studies from our data labeling experience. We have shown you examples of data annotation projects for audio transcription, MT evaluation, and search engine evaluation. We hope they’ve given you a taste of how we approach this problem and an idea of how you can prepare data for your own ML project. But since every project is different, you’re welcome to join our slack channel if you have a challenge you’d like to discuss.