📝 Guest post: Fast Access to Feature Data for AI Applications with Hopsworks*

In this article, Hopsworks’s team dives into the details of the requirements of AI-powered online applications and how the Hopsworks Feature Store abstracts away the complexity of a dual storage system.

Enterprise Machine Learning models are most valuable when they are powering a part of a product by guiding user interaction. Oftentimes these ML models are applied to an entire database of entities, for example users identified by a unique primary key. An example for such an offline application, would be predictive Customer Lifetime Value, where a prediction can be precomputed in batches in regular intervals (nightly, weekly), and is then used to select target audiences for marketing campaigns. More advanced AI-powered applications, however, guide user interaction in real-time, such as recommender systems. For these online applications, some part of the model input (feature vector) will be available in the application itself, such as the last button clicked on, while other parts of the feature vector rely on historical or contextual data and have to be retrieved from a backend storage, such as the number of times the user clicked on the button in the last hour or whether the button is a popular button.

Machine Learning Models in Production

While batch applications with (analytical) models are largely similar to the training of the model itself, requiring efficient access to large volumes of data that will be scored, online applications require low latency access to latest feature values for a given primary key (potentially, multi-part) which is then sent as a feature vector to the model serving instance for inference.

To the best of our knowledge, there is no single database accommodating both of these requirements at high performance. Therefore, data teams tended to keep the data for training and batch inference in data lakes, while ML engineers built microservices to replicate the feature engineering logic in microservices for online applications.

This, however, introduces unnecessary obstacles for both Data Scientists and ML engineers to iterate quickly and significantly increases the time to production for ML models:

Data science perspective: Tight coupling of data and infrastructure through microservices, resulting in Data Scientists not being able to move from development to production and not being able to reuse features.

ML engineering perspective: Large engineering effort to guarantee consistent access to data in production as it was seen by the ML model during the training process.

Hopsworks Feature Store: A Transparent Dual Storage System

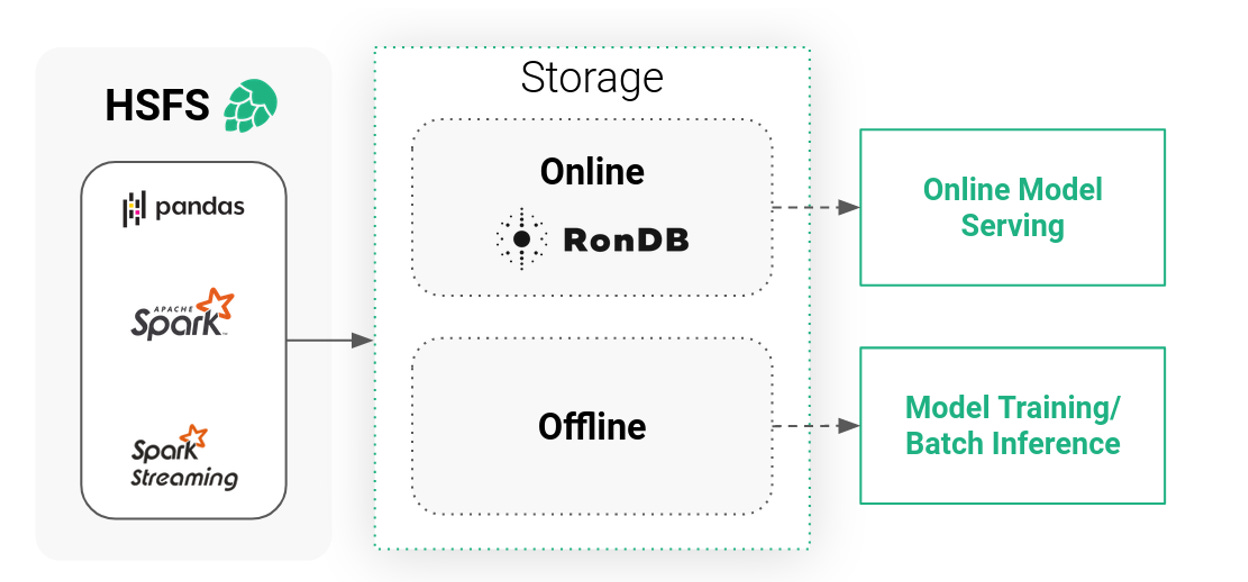

The Hopsworks Feature Store is a dual storage system, consisting of the high-bandwidth (low-cost) offline storage and the low-latency online store. The offline storage is a mix of Apache Hudi tables on our HopsFS file system (backed by S3 or Azure Blob Storage) and external tables (such as Snowflake, Redshift, etc), together , providing access to large volumes of feature data for training or batch scoring. In contrast, the online store is a low latency key value database that stores only the latest value of each feature and its primary key. The online feature store thereby acts as a low latency cache for these feature values.

In order for this system to be valuable for data scientists and to improve the time to production, as well as providing a nice experience for the end user, it needs to meet some requirements:

Consistent Features for Training and Serving: In ML it’s of paramount importance to replicate the exact feature engineering logic for features in production as was used to generate the features for model training.

Feature Freshness: A low latency, high throughput online feature store is only beneficial if the data stored in it is kept up-to-date. Feature freshness is defined as the end-to-end latency between an event arriving that triggers a feature recomputation and the recomputed feature being published in the online feature store.

Latency: The online feature store must provide near real-time low latency and high throughput, in order for applications to be able to use as many features as possible with its available SLA.

Accessibility: The data needs to be accessible through intuitive APIs, and just as easily as the extraction of data from the offline feature store for training.

The Hopsworks Online Feature Store is built around four pillars in order to satisfy the requirements while scaling to manage large amounts of data:

HSFS API: The Hopsworks Feature Store library is the main entry point to the feature store for developers, and is available for both Python and Scala/Java. HSFS abstracts away the two storage systems, providing a transparent Dataframe API (Spark, Spark Structured Streaming, Pandas) for writing and reading from online and offline storage.

Metadata: Hopsworks can store large amounts of custom metadata in order for Data Scientists to discover, govern, and reuse features, but also to be able to rely on schemas and data quality when moving models to production.

Engine: The online feature store comes with a scalable and stateless service that makes sure your data is written to the online feature store as fast as possible without write amplification from either a data stream (Spark Structured Streaming) or from static Spark or Pandas Dataframes. By write amplification, we mean that you do not have to first materialize your features to storage before ingesting them - you can write directly to the feature store.

RonDB: The database behind the online store is the world’s fastest key-value store with SQL capabilities. Not only building the base for the online feature data but also handling all metadata generated within Hopsworks.

RonDB: The Online Feature Store, Foundation of the File System and Metadata

Hopsworks is built from the ground up around distributed scaleout metadata. This helps to ensure consistency and scalability of the services within Hopsworks as well as the annotation and discoverability of data and ML artifacts.

Since the first release, Hopsworks has been using NDB Cluster (a precursor to RonDB) as the online feature store. In 2020, we created RonDB as a managed version of NDB Cluster, optimized for use as an online feature store.

However, in Hopsworks, we use RonDB for more than just the Online Feature Store. RonDB also stores metadata for the whole Feature Store, including schemas, statistics, and commits. RonDB also stores the metadata of the file system, HopsFS, in which offline Hudi tables are stored. Using RonDB as a single metadata database, we use transactions and foreign keys to keep the Feature Store and Hudi metadata consistent with the target files and directories (inodes). Hopsworks is accessible either through a REST API or an intuitive UI (that includes a Feature Catalog), or programmatically through the Hopsworks Feature Store API (HSFS).

With the underlying RonDB and the needed metadata in place, we were able to build a scale-out, high throughput materialization service to perform the updates, deletes, and writes on the online feature store - we simply named it OnlineFS.

OnlineFS: The Engine for Scalable Online Feature Materialization

With the underlying RonDB and the needed metadata in place, we were able to build a scale-out, high throughput materialization service to perform the updates, deletes, and writes on the online feature store - we simply named it OnlineFS.

OnlineFS is a stateless service using ClusterJ for direct access to the RonDB data nodes. ClusterJ is implemented as a high performance JNI layer on top of the native C++ NDB API, providing low latency and high throughput. We were able to make OnlineFS stateless due to the availability of the metadata in RonDB, such as avro schemas and feature types. Making the service stateless allows us to scale writes to the online feature store up and down by simply adding or removing instances of the service, thereby increasing or decreasing throughput linearly with the number of instances.

The steps that are needed to write data to the online feature store:

Features are written to the feature store as Pandas or Spark Dataframes.

Encode and produce

Consume and decode

Primary-key based upsert

To learn more about each step and transparency in distributed systems please continue to read here. In our blog, we also provide some benchmarks for quantitative comparison.