🔐 Edge#30: Privacy-preserving machine learning

TheSequence is a convenient way to build and reinforce your knowledge about machine learning and AI

In this issue:

🧠 we reward the most active participant of our quizzes, and start a new cycle!

we explore privacy-preserving machine learning;

we overview Google’s PATE method for scalable private machine learning;

we deep dive into the PySyft open-source framework for private deep learning.

Enjoy the learning!

💡 ML Concept of the Day: What is privacy-preserving machine learning?

Privacy remains one of the biggest challenges of modern machine learning applications. From social networks to healthcare applications, machine learning models are becoming ubiquitous in scenarios that involve user-sensitive datasets. In traditional supervised learning, these datasets will be used to train a machine learning model to gather insights from user’s sensitive data. Additionally, models that rely on clear training datasets could be vulnerable to all sorts of data manipulation attacks. Basically, many machine learning techniques are forced to balance the friction between intelligence and privacy. What if there was a middle ground solution?

Privacy-preserving machine learning (PPML) is a bunch of techniques enabling the training of machine learning without relying on private data in its original form. More specifically, PPML methods use a series of data manipulations to ensure the protection of sensitive information in training datasets. Most PPML techniques can be classified into two main groups:

Cryptographic Methods: PPML cryptographic methods rely on modern cryptographic protocols, such as secure multi-party computations or homomorphic encryption, to train machine learning models using encrypted datasets. Essentially, a machine learning model will be able to perform mathematical operations against a dataset without having access to its original clear version.

Perturbation Methods: PPML perturbation methods focus on adding random noise to training datasets in order to prevent attacks such as membership inference. Differential privacy has become one of the most important schools in this area.

With massive efforts surrounding the protection of private information, PPML methods are likely to become increasingly relevant in the next few years for the machine learning industry. Not surprisingly, we are already seeing an acceleration in the research and development of technologies in the ML space.

🔎 ML Research You Should Know: Google’s PATE and Scalable Private Learning

In the paper Scalable Private Learning with PATE, researchers from Google, Pennsylvania State University and University of California San Diego presented a method known as Private Aggregation of Teacher Ensembles (PATE) to ensure privacy in training datasets.

The objective: Present a method that can ensure privacy while scaling to large machine learning scenarios.

Why is it so important: PATE has been one of the most influential models in private machine learning, having been adopted by several frameworks and tools.

Diving deeper: The PATE method is built on an incredibly intuitive idea. Imagine that two different classifiers, trained on two different datasets with no training examples in common, produce similar outputs. In that case, we can conclude that their decision does not reveal information about any single training example, which is another way to say it ensures the privacy of the training data.

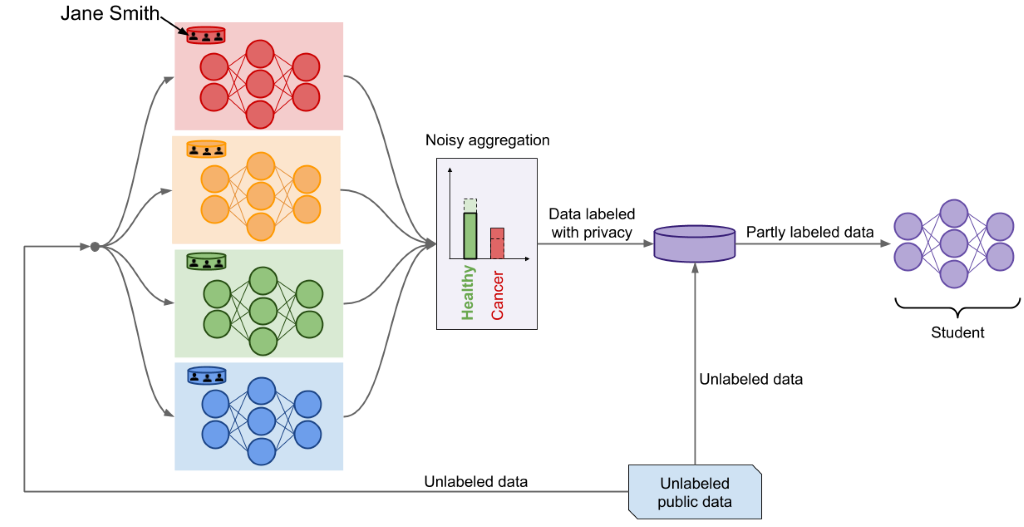

Building on that idea, PATE uses a perturbation technique that structures the learning process using an ensemble of teacher models, which are trained on different partitions of the data, communicating their knowledge to a student model. The PATE framework is based on three fundamental components:

Teacher Models: These models are trained on subsets of sensitive data without any overlapping among one another.

Aggregation Mechanisms: These components evaluate when the teacher models achieve consensus on output in order to transfer the knowledge to the student model.

Student Models: The student model is trained on the results of the aggregated teacher model combined with other unlabeled data.

Image credit: Cleverhans

PATE research has gone through several iterations and remains one of the most influential research in private machine learning. Implementations of PATE have been incorporated into different privacy frameworks.

🤖 ML Technology to Follow: PySyft, an open-source framework for private deep learning

Why should I know about this: PySyft is one of the most advanced frameworks in the market for building private deep learning models.

What is it: PySyft is a framework that enables secure, private computations in deep learning models. PySyft combines several privacy techniques, such as federated learning, secured multiple-party computations and differential privacy, into a single programming model integrated into different deep learning frameworks such as PyTorch, Keras and TensorFlow. The principles of PySyft were originally outlined in a research paper and were then implemented in its open-source release.

The core component of PySyft is an abstraction called the SyftTensor. SyftTensors are meant to represent a state or transformation of the data and can be chained together. The chain structure always has the PyTorch tensor at its head. The transformations or states embodied by the SyftTensors are accessed downward using the child attribute and upward using the parent attribute.

Image credit: "A generic framework for privacy-preserving deep learning" research paper

PySyft’s rich collection of privacy techniques represents a unique differentiator. But even more impressive is its simple programming model. Incorporating PySyft into models in Keras or PyTorch is relatively seamless and it doesn’t require deviating from the core structure of the program. It’s not a surprise that PySyft has been widely adopted within the deep learning community and integrated into many frameworks and platforms.

How can I use it: PySyft is open source and available at https://github.com/OpenMined/PySyft

🧠 The Quiz

After ten consecutive quizzes, we are ready to announce two winners.

Our reader with email yas..…..@outlook.de receives an annual gift subscription. He got 52 points participating in all ten quizzes. Our reader with email gmaria….@ and the total score of 35 points for eight quizzes, gets a 6-month subscription. Congratulations!

Now, the new quiz after reading TheSequence Edge#30. The questions are the following:

What is the essence of cryptographic private machine learning methods?

What type of private machine learning classification better describes Google’s PATE?

That was fun! Thank you. See you on Sunday 😉

TheSequence is a summary of groundbreaking ML research papers, engaging explanations of ML concepts, and exploration of new ML frameworks and platforms. TheSequence keeps you up to date with the news, trends, and technology developments in the AI field.

5 minutes of your time, 3 times a week – you will steadily become knowledgeable about everything happening in the AI space. Please contact us if you are interested in a group subscription.