🌐 Edge#197: Types of Graph Learning Tasks

In this issue:

we overview the types of graph learning tasks;

we dive into the original GNN paper;

we explore Deep Graph Library, a framework for implementing GNNs.

Enjoy the learning!

💡 ML Concept of the Day: Types of Graph Learning Tasks

Continuing with our series about graph neural networks (GNNs), we would like to discuss the different learning tasks that can be performed with this neural network structure. As discussed in Edge#195, GNNs represent a learning problem as a graph structure that describes the relationships between the different constructs in a dataset. The specific type of graph in a GNN is highly optimized for a learning task. There are three fundamental learning tasks that are tackled by modern GNNs:

Graph-Level Tasks

This type of tasks predicts a property of the entire graph. This is analogous to image classification, in which a label is associated with the entire image input. Examples of graph-level tasks in GNNs include graph classification, graph regression, and graph matching.

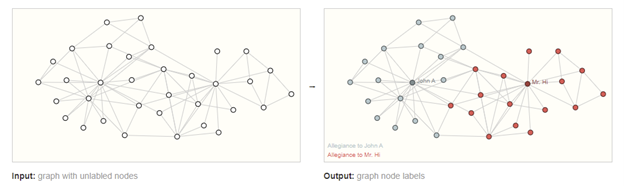

Node-Level Tasks

This type of tasks predicts the role of a node within a graph. For instance, node classification categorizes nodes into several classes; node regression predicts a continuous value for each node; node clustering aims to partition the nodes into several disjoint groups.

Image Credit: Google Research

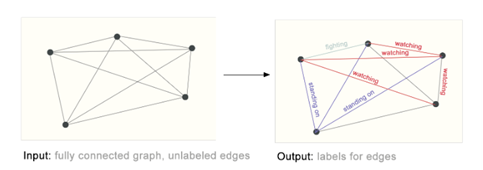

Edge-Level Tasks

This type of tasks focuses on classifying graph edges to predict whether there is an edge between different nodes. This is analogous to image understanding tasks in computer vision that attempt to detect the relationships between different objects in an image.

Most of the GNN architectures are based on one of these types of tasks. Therefore, their understanding is essential to apply GNNs effectively.

🔎 ML Research You Should Know: The Original GNN Paper

In the paper, The graph neural network model, researchers from the University of Sienna introduced the concept of GNNs.

The objective: to introduce a new type of neural network that works efficiently on graph data structures.

Why is it so important: The paper marked the beginning of the GNN movement in deep learning.

Diving deeper: The original idea behind GNNs was to design neural network structures able to learn from graph structures. The intuition is that we can represent a problem using a series of nodes and connections. This is an area where traditional neural networks fail to execute well. The inspiration for GNNs comes from methods such as recursive neural networks and Markov chains. Recursive neural networks work well with directed acyclic graph data structures and learn functions that map those structures to vectors. Markov chains excel at modeling causal relationships between events represented by nodes in a graph.

The researchers presented GNNs as an extension of both the recursive neural networks and Markov chain models, retaining some of their characteristics. In their work, GNNs are characterized as supervised learning methods that extend recursive models by supporting different graph types while also extending Markov chains by introducing a learning algorithm that can learn complex relationships between objects in a graph. More specifically, the paper describes GNNs as two fundamental components:

GNN Model: The graph structure that describes the model in the form of nodes and edges. Each node in the graph is enriched with attributes in the form of vectors that encode features relevant to the node.

Learning Algorithm: The algorithm that learns the parameters of the graph based on the training dataset. Part of the algorithm is to propagate the learned information across the nodes.

The research paper goes into an incredible level of detail for both components. Since the original publication, this paper sparked a new area of research based on graph learning models.

🤖 ML Technology to Follow: Deep Graph Library is a Framework for Implementing Graph Neural Networks You Need to Know About

Why should I know about this: There are not many robust frameworks for implementing graph neural networks. Deep Graph Library (DGL) is one of the most complete open-source offerings in the current market.

What is it: DGL enables the creation of deep learning models that operate on graph structures. The project is maintained as an open-source release with contributors from academic institutions like the New York University of NYU Shanghai and top technology companies like AWS.

DGL enables data scientists to quickly build and train graph neural networks on a specific dataset. Functionally, DGL is a Python package that has been built on top of existing deep learning frameworks such as TensorFlow, PyTorch, or MXNet. On top of those frameworks, DGL enables a series of building blocks such as graph authoring packages, a message passing interface that allows the communications between different nodes in a graph, and an entire series of modules for modeling graph neural networks. These building blocks are abstracted using the DGL NN module, the fundamental component used to build a model in DGL. The DGL NN inherits from native modules such as Pytorch’s NN Module, MXNet Gluon’s NN Block, and TensorFlow’s Keras Layer facilitating the integration with the underlying deep learning framework.

DGL has quickly gained popularity within the deep learning community. Recent releases have integrated into mainstream deep learning platforms such as AWS SageMaker.

How can I use it: The Deep Graph Library is open source and available at https://github.com/dmlc/dgl.