💠 Edge#195: A New Series About Graph Neural Networks

In this issue:

we start a new series about graph neural networks (GNN);

we observe how DeepMind showcases the potential of GNN;

we discuss Deep Graph Library, a framework for implementing GNNs.

Enjoy the learning!

💡 ML Concept of the Day: A New Series About Graph Neural Networks

After completing a series about heavy ML engineering concepts like distributed training, we plan to spend a few weeks back in deep learning theory. Specifically, we would like to focus the next few editions of TheSequence on graph neural networks (GNNs). Graphs are one of the most common data structures to describe complex relationships between entities. Social networks, and search engines are some of the key movements that have fast-tracked the adoption of graph data structures. Not surprisingly, there has been a huge demand for deep learning models that can natively learn from graph structures.

Most modern deep learning models, such as recurrent neural networks (RNNs) or convolutional neural networks (CNNs), are fundamentally designed to work on tabular, vector-based structures and struggle when presented with graph datasets. GNNs are a new area of deep learning focused on tackling this problem. Initially created in 2009, GNNs have become incredibly prominent in the last few years. Conceptually, GNNs are able to model the relationship between the nodes in a graph and produce a numeric representation of it. In a graph, each node is naturally defined by its features and the related nodes. The target of GNN is to learn a state embedding that contains the information of the neighborhood for each node.

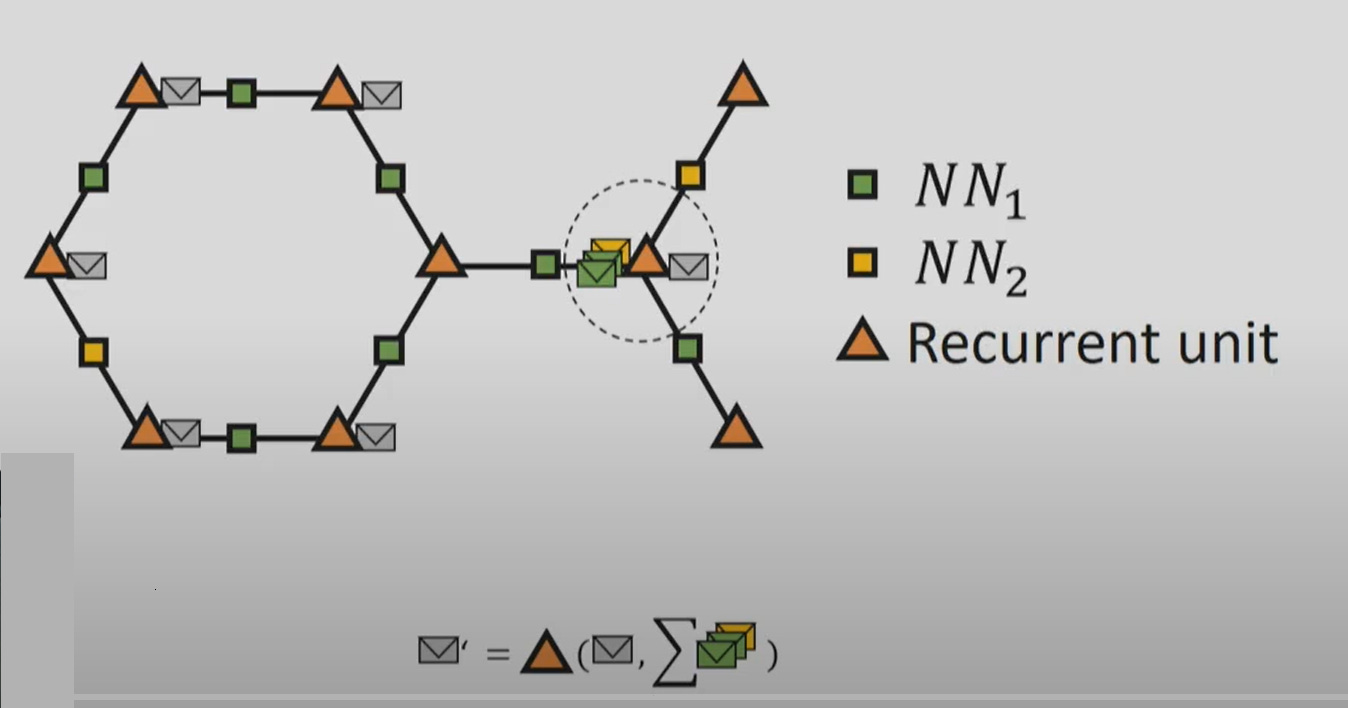

The following figure illustrates the core concepts behind a GNN. Each orange triangle represents a recurrent unit. The envelopes represent the embeddings of the nodes that will travel through the graph. Each graph edge is also replaced by a neural network to capture the information of the edge. Every single timestep, each node pulls the embedding from all its neighbors, calculates their sum, and passes them along with its embedding to the recurrent unit, producing a new embedding. In the first pass, each embedding will contain information about the node and its first-order neighbors. In the second pass, it will contain information about the second-order neighbors, and the process will continue until each node knows all the other nodes in the graph.

In the next few weeks, we will start deep-diving into some of the concepts behind GNNs.