👏 Edge#114: AI2’s Longformer is a Transformer Model for Long

What’s New in AI, a deep dive into one of the freshest research papers or technology frameworks that is worth your attention. Our goal is to keep you up to date with new developments in AI in a way that complements the concepts we are debating in other editions of our newsletter.

💥 What’s New in AI: AI2’s Longformer is a Transformer Model for Long

Transformer architectures have revolutionized many disciplines in natural language processing (NLP). Question-answering, text summarization, classifications, and machine translation are some of the NLP disciplines that have achieved new milestones by relying on transformer architectures. The self-attention mechanisms included in transformer models have proven to be incredibly effective in processing the sequence of textual data and effectively capturing contextual information. However, transformers are not without limitations. Despite their powerful capabilities, most transformer models struggle when processing long text sequences. Partly, it's due to the memory and computational costs required by the self-attention modules. In 2020, researchers from the Allen Institute for AI (AI2) published a paper unveiling Longformer, a transformer architecture optimized for the processing of long-form texts.

The challenge of using self-attention mechanisms for processing long-form texts becomes relatively evident once we understand its internal mechanics. Conceptually, self-attention methods allow associating each word in the input with other words. To achieve this, the self-attention mechanism allows each word in the input to reference every other word in the input. This means that the memory and computational cost of self-attention mechanisms grow quadratically relative to the length of the input sequence, making it incredibly infeasible for processing long text sequences. The default way transformer models handle this type of task is by partitioning long sequences into smaller sequences, but that creates its own set of problems.

To work efficiently with long-form text, the relationship between the memory, computational cost, and length of the texts should be close to linear.

Enter the Longformer Architecture

The simplest way to think about the Longformer architecture is as a transformer-based model optimized for long sequence processing. What does that mean exactly? Longformer uses a different type of attention mechanism that can scale linearly with the length of the input sequence. More specifically, the Longformer architecture relaxes the full attention mechanisms found in traditional transformer architectures to facilitate scalability.

The idea of modifying the dynamics of the self-attention mechanism comes as an alternative to traditional methods that work by truncating the input in different forms. For instance, traditional transformer models like BERT have a limit of 512 characters per token (it approximately equals 10-15 sentences). This limitation motivated the researchers: they need models that can process longer chunks of text, full articles or Wikipedia pages, or even an entire book.

The simplest mechanism to address this limitation is to truncate the document into smaller parts. Alternative models look to partition the document into sometimes overlapping chunks that can be processed in parallel and then combined for a final result. These techniques are often used in classification tasks. Another approach adopted in question-answering models is to use a two-stage model in which the first stage retrieves relevant documents passed onto the second stage for answer extraction. Despite the effectiveness of the techniques, they suffer from the limitation of information loss due to the truncation of the input.

An intriguing alternative to the truncation methods could be to modify the dynamics of the self-attention mechanism. This is the path followed by the Longformer model. Instead of the full attention mechanism used in most transformer architectures, the Longformer model uses three complementary patterns to facilitate both local and global attention:

Sliding Window

Dilated Sliding Window

Global Attention (full self-attention)

Sliding Window Attention

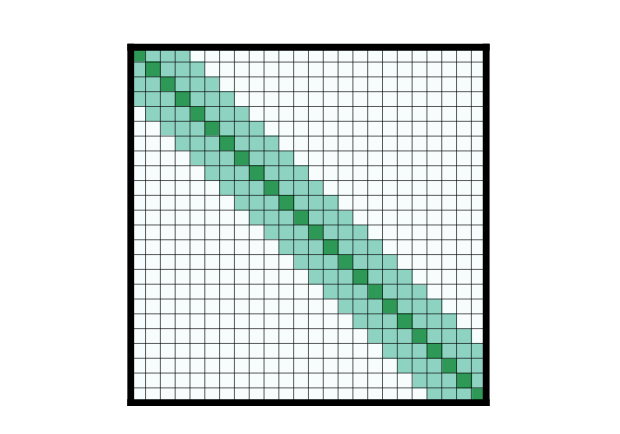

The Longformer model uses the sliding window pattern by relying on fixed-size windows attention for each token. This approach limits the surface of attention of each token to a small set of tokens. In principle, this attention pattern might seem limited, but it helps a multi-layer transformer build a large receptive field in which the top layers can build representations that incorporate information from the entire input. This is very similar to the way Convolutional Neural Networks (CNNs) work.

Dilated Sliding Windows Attention

To further the attention mechanism, the Longformer architecture uses a pattern to dilate the sliding surface attention. In this approach, the sliding windows of a specific size are dilated with gaps of a different size. The gaps in the kernel force it to span wider, capturing distant features from the sequence in a single slide. The dilated sliding window pattern increases the reach of the attention model without increasing computation. This approach is analogous to dilated CNNs.

Global Attention + Dilated Sliding Window Attention

The sliding window patterns are great to foment local attention, but they lack the flexibility to learn task-specific representation. The Longformer architecture incorporates a traditional global attention mechanism to address this challenge but only for selected locations in the input. This global attention mechanism is symmetric in the sense that a token with global attention will attend a series of tokens in the sequence, and this, in turn, will pay attention to it.

Gracefully Scaling for Long Sequences

The combination of the different sliding attention mechanisms allows the Longformer architecture to process long sequence input without drastically increasing the memory of computation. As you can see in the following figure, the Longformer memory scales linearly with the input length contrasting with the polynomial scaling model of traditional transformer architectures.

Conclusion

The Longformer model is a significant contribution to apply transformer architectures to long document processing, consistently outperforming other approaches on a wide range of document-level NLP tasks without shortening the long input. Fields, such as healthcare, legal, and government, full of scenarios requiring applying NLP to datasets of large documents, can be immediate beneficiaries of the Longformer techniques. Given its importance, several implementations of the Longformer model have been implemented in libraries such as Hugging Face. The code for the original implementation is also available at https://github.com/allenai/longformer