☝️ CoreWeave is allocating $50 million to scale the best compute infrastructure for ML*

How are they going to do it? Read along

More Machine Learning in More Ways

Now more than ever, ML shops of all sizes are optimizing their cloud technology stack and seeing both top-line and bottom-line benefits. Founders, data scientists, engineers, and DevOps teams are able to reduce inference latency by 50%, serve requests 300% faster, and lower their costs by 75%.

With our latest round of funding, we're scaling up to help the world's most innovative companies train and serve large language models faster, develop innovations in early-stage drug discovery, and so much more.

I hope you are just excited about these developments as I am, and if there's any way that CoreWeave can help your company solve compute-intensive challenges, please don't hesitate to ask us!

Warmest Regards,

Brian Venturo

Co-Founder and CTO, CoreWeave

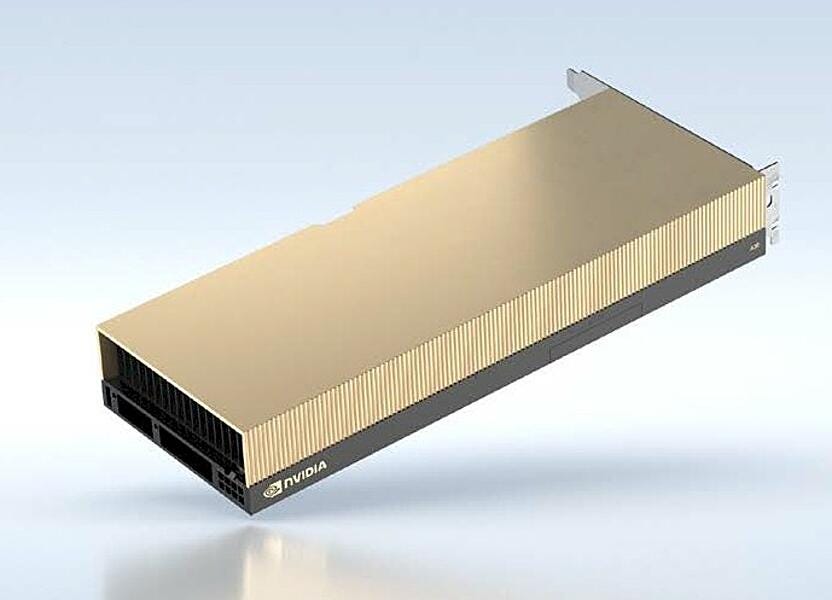

The Largest Fleet of A40 GPUs in North America

NVIDIA's latest A40 hardware is the world’s most powerful data center GPU. As their first elite partner, we're adding thousands of these (alongside our current fleet of A100s, V100s, and Quadro RTXs) into our 5 own data centers across the United States. AI training performance is accelerated by up to 3x without any code changes, which means that data scientists and engineers can now get access to virtually unlimited on-demand compute resources while improving the quality of their training and inference models within our specialized cloud.

How AI Dungeon Improved 3 Critical KPIs

With a seamless migration to CoreWeave, AI Dungeon was able to cut spin-up times by 4X, reduce inference latency by 50%, and lower computing costs by 76%.

Powering a World of Possibility with GPT-J

Our latest API Inference Service offers one-click GPT-J deployment, eliminating infrastructure overhead and giving clients access to the full range of CoreWeave GPUs, including NVIDIA A5000s which deliver an unmatched performance adjusted cost.

About CoreWeave

CoreWeave is a specialized cloud provider delivering access to a massive scale of accelerated compute on top of the industry's fastest and most flexible infrastructure for HPC workloads. As NVIDIA's first Elite CSP Partner for compute, ML clients find that we're up to 50-80% less expensive AND 35X faster than competing clouds. To learn more about our full capabilities and services, click here or get in touch with us.